Event-driven architecture was an evolutionary step toward cloud-native applications, and supports serverless applications. Events connect microservices, letting you decouple functions in space and time and make your applications more resilient and elastic.

But events come with challenges. One of the first challenges for a development team is how to describe events in a repeatable, structured form. Another challenge is how to work on applications that consume events without having to wait for another team to hand you the applications that produce those events.

This article explores those two challenges and shows how to simulate events using CloudEvents, AsyncAPI, and Microcks. CloudEvents and AsyncAPI are complementary specifications that you can combine to help define an event-driven architecture. Microcks allows simulation of CloudEvents to speed up and protect the autonomy of development teams.

CloudEvents or AsyncAPI?

New standards such as CloudEvents or AsyncAPI have emerged to address the need to describe events in a structured format. People often ask: "Should I use CloudEvents or AsyncAPI?" There's a widespread belief that CloudEvents and AsyncAPI compete within the same scope. I see things differently, and in this article, I'll explain how the two standards work well together.

What is CloudEvents?

The essence of CloudEvents can be found on a statement from its website:

CloudEvents is a specification for describing event data in common formats to provide interoperability across services, platforms, and systems.

The purpose of CloudEvents is to establish a common format for event data description. CloudEvents is part of the Cloud Native Computing Foundation's Serverless Working Group. A lot of integrations already exist within Knative Eventing (or Red Hat OpenShift Serverless), Trigger Mesh, and Azure Event Grid, allowing true cross-vendor platform interoperability.

The CloudEvents specification is focused on events and defines a common envelope (set of attributes) for your application event. As of today, CloudEvents proposes two different content modes for transferring events: structured and binary.

The CloudEvents repository offers an example of a JSON structure containing event attributes. This is a structured CloudEvent. The event data in the example is XML, contained in the value <much wow=\"xml\"/>, but it can be of any type. CloudEvents takes care of defining meta-information about your event, but does not help you define the actual event content:

{

"specversion" : "1.0.1",

"type" : "com.github.pull.create",

"source" : "https://github.com/cloudevents/spec/pull/123",

"id" : "A234-1234-1234",

"time" : "2020-04-05T17:31:00Z",

"comexampleextension1" : "value",

"comexampleextension2" : {

"othervalue": 5

},

"contenttype" : "text/xml",

"data" : "<much wow=\"xml\"/>"

}

What is AsyncAPI?

To understand AsyncAPI, again we turn to its website:

AsyncAPI is an industry standard for defining asynchronous APIs. Our long-term goal is to make working with EDAs as easy as it is to work with REST APIs.

The "API" stands for application programming interface and embodies an application's interactions and capabilities. AsyncAPI can be seen as the sister specification of OpenAPI, but targeting asynchronous protocols based on event brokering.

AsyncAPI focuses on the application and the communication channels it uses. Unlike CloudEvents, AsyncAPI does not define how your events should be structured. However, AsyncAPI provides an extended means to precisely define both the meta-information and the actual content of an event.

An example in YAML can be found on GitHub. This example describes an event with the title User signed-up event, published to the user/signedup channel. These events have three properties: fullName, email, and age . Each property is defined using semantics from JSON Schema. Although it's not shown in this example, AsyncAPI also allows you to specify event headers and whether these events will be available through different protocol bindings such as Kafka, AMQP, MQTT, or WebSocket:

asyncapi: 2.0.0

id: urn:com.asyncapi.examples.user

info:

title: User signed-up event

version: 0.1.1

channels:

user/signedup:

publish:

message:

payload:

type: object

properties:

fullName:

type: string

email:

type: string

format: email

age:

type: integer

minimum: 18CloudEvents with AsyncAPI

The explanations and examples I've shown reveal that the CloudEvents with AsyncAPI standards tackle different scopes. Thus they do not have to be treated as mutually exclusive. You can actually combine them to achieve a complete event specification, including application definition, channels description, structured envelope, and detailed functional data carried by the event.

The general idea behind the combination is to use an AsyncAPI specification as a hosting document. It holds references to CloudEvents attributes and adds more details about the event format.

You can use two mechanisms in AsyncAPI to ensure this combination. Choosing the correct mechanism might depend on the protocol you choose to convey your events. Things aren't perfect yet and you'll have to make a choice.

Let's take the example of using Apache Kafka to distribute events:

- In the structured content mode, CloudEvents meta-information is tangled up with the

datain the messages value. For that mode, we'll use the JSON Schema composition mechanism that is accessible from AsyncAPI. - In the binary content mode (for which we can use Avro), meta-information for each event is dissociated from the message value and inserted, instead, into the header of each message. For that, we'll use the MessageTrait application mechanism present in AsyncAPI.

Structured content mode

This section rewrites the previous AsyncAPI example to use CloudEvents in structured content mode. The resulting definition contains the following elements worth noting:

- The definition of

headersthat starts on line 16 contains our application'scustom-header, as well as the mandatory CloudEventscontent-type. - A

schemasfield refers to the CloudEvents specification on line 33, re-using this specification as a basis for our message. - The

schemasfield also refers to a refined version of thedataproperty description on line 36.

Here is the definition:

asyncapi: '2.0.0'

id: 'urn:io.microcks.example.user-signedup'

info:

title: User signed-up CloudEvents API structured

version: 0.1.3

defaultContentType: application/json

channels:

user/signedup:

subscribe:

message:

bindings:

kafka:

key:

type: string

description: Timestamp of event as milliseconds since 1st Jan 1970

headers:

type: object

properties:

custom-header:

type: string

content-type:

type: string

enum:

- 'application/cloudevents+json; charset=UTF-8'

payload:

$ref: '#/components/schemas/userSignedUpPayload'

examples: [...]

components:

schemas:

userSignedUpPayload:

type: object

allOf:

- $ref: 'https://raw.githubusercontent.com/cloudevents/spec/v1.0.1/spec.json'

properties:

data:

$ref: '#/components/schemas/userSignedUpData'

userSignedUpData:

type: object

properties:

fullName:

type: string

email:

type: string

format: email

age:

type: integer

minimum: 18Binary content mode

Now, we'll apply the binary content mode to the AsyncAPI format. The resulting definition shows that event properties have moved out of this format. Other important things to notice here are:

- A trait is applied at the message level on line 16. The trait resource is a partial AsyncAPI document containing a

MessageTraitdefinition. This trait will bring in all the mandatory attributes (ce_*) from CloudEvents. It is the equivalent of the CloudEvents JSON Schema. - This time we're specifying our event payload using an Avro schema as specified on line 25.

Here is the definition:

asyncapi: '2.0.0'

id: 'urn:io.microcks.example.user-signedup'

info:

title: User signed-up CloudEvents API binary

version: 0.1.3

channels:

user/signedup:

subscribe:

message:

bindings:

kafka:

key:

type: string

description: Timestamp of event as milliseconds since 1st Jan 1970

traits:

- $ref: 'https://raw.githubusercontent.com/microcks/microcks-quickstarters/main/cloud/cloudevents/cloudevents-v1.0.1-asyncapi-trait.yml'

headers:

type: object

properties:

custom-header:

type: string

contentType: avro/binary

schemaFormat: application/vnd.apache.avro+json;version=1.9.0

payload:

$ref: './user-signedup.avsc#/User'

examples: [...]

What are the benefits of combining CloudEvents with AsyncAPI?

Whichever content mode you chose, you now have a comprehensive description of your event and all the elements of your event-driven architecture. The description guarantees the low-level interoperability of the CloudEvents-plus-AsyncAPI combination, along with the ability to be routed and trigger a function in a serverless world. In addition, you provide a complete description of the carried data that will be of great help for applications consuming and processing events.

Simulating CloudEvents with Microcks

Let's tackle the second challenge stated at the beginning of this article: How can developers efficiently work as a team without having to wait for someone else's events? We've seen how to fully describe events. However, it would be even better to have a pragmatic approach for leveraging this CloudEvents-plus-AsyncAPI contract. That's where Microcks comes to the rescue.

What is Microcks?

Microcks is an open source Kubernetes-native tool for mocking/simulating and testing APIs. One purpose of Microcks is to turn your API contract (such as OpenAPI, AsyncAPI, or the Postman collection) into live mocks in seconds. Once it has imported your AsyncAPI contract, Microcks starts producing mock events on a message broker at a defined frequency.

Using Microcks you can simulate CloudEvents in seconds, without writing a single line of code. Microcks allows the team that is relying on input events to immediately start working. They do not have to wait for the team that is coding the application that will publicize events.

Using Microcks for CloudEvents

To produce CloudEvents events with Microcks, simply re-use examples by adding them to your contract. We omitted the examples property before, but we'll now add that property to our example in binary content mode on line 26:

asyncapi: '2.0.0'

id: 'urn:io.microcks.example.user-signedup'

info:

title: User signed-up CloudEvents API binary

version: 0.1.3

channels:

user/signedup:

subscribe:

message:

bindings:

kafka:

key:

type: string

description: Timestamp of event as milliseconds since 1st Jan 1970

traits:

- $ref: 'https://raw.githubusercontent.com/microcks/microcks-quickstarters/main/cloud/cloudevents/cloudevents-v1.0.1-asyncapi-trait.yml'

headers:

type: object

properties:

custom-header:

type: string

contentType: avro/binary

schemaFormat: application/vnd.apache.avro+json;version=1.9.0

payload:

$ref: './user-signedup.avsc#/User'

examples:

- john:

summary: Example for John Doe user

headers:

ce_specversion: "1.0"

ce_type: "io.microcks.example.user-signedup"

ce_source: "/mycontext/subcontext"

ce_id: "{{uuid()}}"

ce_time: "{{now(yyyy-MM-dd'T'HH:mm:SS'Z')}}"

content-type: application/avro

sentAt: "2020-03-11T08:03:38Z"

payload:

fullName: John Doe

email: john@microcks.io

age: 36Key points to note are:

- You can put in as many examples as you want because this property becomes a map in AsyncAPI.

- You can specify both

headersandpayloadvalues. - Even if

payloadwill be Avro-binary encoded, you use YAML or JSON to specify examples. - You can use templating functions through the

{{ }}notation to introduce random or dynamic values.

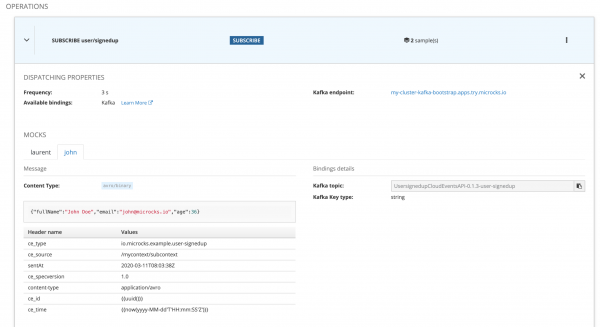

Once the schema is imported into Microcks, it discovers the API definition as well as the different examples. Microcks starts immediately producing mock events on the Kafka broker it is connected to—every three seconds in our example (see Figure 1).

Since release 1.2.0, Microcks also supports the connection to a schema registry. Therefore, it publishes the Avro schema used at the mock-message publication time. You can use the kafkacat command-line interface (CLI) tool to connect to the Kafka broker and registry, and then inspect the content of mock events. Here we're using the Apicurio service registry:

$ kafkacat -b my-cluster-kafka-bootstrap.apps.try.microcks.io:9092 -t UsersignedupCloudEventsAPI_0.1.3_user-signedup -s value=avro -r http://apicurio-registry.apps.try.microcks.io/api/ccompat -o end -f 'Headers: %h - Value: %s\n'

--- OUTPUT

% Auto-selecting Consumer mode (use -P or -C to override)

% Reached end of topic UsersignedupCloudEventsAPI_0.1.3_user-signedup [0] at offset 276

Headers: sentAt=2020-03-11T08:03:38Z,content-type=application/avro,ce_id=7a8cc388-5bfb-42f7-8361-0efb4ce75c20,ce_type=io.microcks.example.user-signedup,ce_specversion=1.0,ce_time=2021-03-09T15:17:762Z,ce_source=/mycontext/subcontext - Value: {"fullName": "John Doe", "email": "john@microcks.io", "age": 36}

% Reached end of topic UsersignedupCloudEventsAPI_0.1.3_user-signedup [0] at offset 277

Headers: ce_id=dde8aa04-2591-4144-aa5b-f0608612b8c5,sentAt=2020-03-11T08:03:38Z,content-type=application/avro,ce_time=2021-03-09T15:17:733Z,ce_type=io.microcks.example.user-signedup,ce_specversion=1.0,ce_source=/mycontext/subcontext - Value: {"fullName": "John Doe", "email": "john@microcks.io", "age": 36}

% Reached end of topic UsersignedupCloudEventsAPI_0.1.3_user-signedup [0] at offset 279We can check that the emitted events respect both the CloudEvents meta-information structure and the AsyncAPI data definition. Moreover, each event has different random attributes, which allows it to simulate diversity and variation for the consuming application.

Conclusion

This article has shown how to solve some of the challenges that come with an event-driven architecture.

First, I described how recent standards, CloudEvents and AsyncAPI, focus on different scopes: the event for CloudEvents and the application for AsyncAPI.

Then I demonstrated how to combine the specifications to provide a comprehensive description of all the elements involved in an event-driven architecture: application definition, channels description, structured envelope, and detailed functional data carried by the event. The specifications are complementary, so you can use one or both depending on how deep you want to go in your formal description.

Finally, you've seen how to use Microcks to simulate any events based on AsyncAPI, including those generated by CloudEvents, just by using examples. Microcks answers the challenge of working, testing, and validating autonomously when different development teams are using an event-driven architecture.

I hope you learned something new—if so, please consider reacting, commenting, or sharing. Thanks for reading.

Last updated: October 29, 2024