Just-in-time (JIT) compilation is central to peak performance in modern virtual machines, but it comes with trade-offs. This article introduces you to JIT compilation in HotSpot, OpenJDK's Java virtual machine. After reading the article, you will have an overview of HotSpot's multi-tiered execution model and how it balances the resources required by your Java applications and by the compiler itself. You'll also see two examples that demonstrate how a JIT compiler uses advanced techniques—deoptimization and speculation—to boost application performance.

How a JIT compiler works

At its core, a JIT compiler relies on the same well-known compilation techniques that an offline compiler such as the GNU Compiler Collection (GCC) uses. The primary difference is that a just-in-time compiler runs in the same process as the application and competes with the application for resources. As a consequence, a different set of trade-offs in the JIT compiler design applies. Mainly, compilation time is more of an issue for a JIT compiler than for an offline compiler, but new possibilities for optimization—such as deoptimization and speculation—open up, as well.

The JIT compiler in OpenJDK

A Java-based JIT compiler takes .class files as input rather than Java code, which is consumed by javac. In this way, a JIT compiler differs from a compiler like GCC, which directly consumes the code that you produce. The JIT compiler's role is to turn class files (composed of bytecode, which is the JVM's instruction set) into machine code that the CPU executes directly. Another way a Java JIT compiler differs from an offline compiler is that the JVM verifies class files at load time. When it's time to compile, there's little need for parsing or verification.

Other than performance variations, the JIT compiler's execution is transparent to the end user. It can, however, be observed by running the java command with diagnostic options. As an example, take this simple HelloWorld program:

public class HelloWorld {

public static void main(String[] args) {

System.out.println("Hello world!");

}

}

Here's the output from running this program with the version of OpenJDK that happens to be installed on my system:

$ java -XX:+PrintCompilation HelloWorld

50 1 3 java.lang.Object::<init> (1 bytes)

50 2 3 java.lang.String::hashCode (55 bytes)

51 3 3 java.lang.String::indexOf (70 bytes)

51 4 3 java.lang.String::charAt (29 bytes)

51 5 n 0 java.lang.System::arraycopy (native) (static)

52 6 3 java.lang.Math::min (11 bytes)

52 7 3 java.lang.String::length (6 bytes)

52 8 3 java.lang.AbstractStringBuilder::ensureCapacityInternal (27 bytes)

52 9 1 java.lang.Object::<init> (1 bytes)

53 1 3 java.lang.Object::<init> (1 bytes) made not entrant

55 10 3 java.lang.String::equals (81 bytes)

57 11 1 java.lang.ref.Reference::get (5 bytes)

58 12 1 java.lang.ThreadLocal::access$400 (5 bytes)

Hello world!

Most lines of the diagnostic output list a method that's JIT-compiled. However, a quick scan of the output reveals that neither the main method nor println are compiled. Instead, a number of methods not present in the HelloWorld class were compiled. This brings up a few questions.

Where do those methods come from, if not from HelloWorld? Some code supporting the JVM startup, as well as most of the Java standard library, is implemented in Java, so it is subject to JIT compilation.

If none of its methods are compiled, then how does the HelloWorld class run? In addition to a JIT compiler, HotSpot embeds an interpreter, which encodes the execution of each JVM bytecode in the most generic way. All methods start executing interpreted, where each bytecode is executed one at a time. Compiled code is tailored to a particular Java method, so optimizations can be applied across bytecode boundaries.

Why not have the JIT compiler prepare the code for faster results? That would require the user code to wait for the JIT'ed code to be ready, which would cause a noticeable pause because compilation takes time.

HotSpot's JIT execution model

In practice, the HotSpot JVM's execution model is the result of four observations taken together:

- Most code is only executed uncommonly, so getting it compiled would waste resources that the JIT compiler needs.

- Only a subset of methods is run frequently.

- The interpreter is ready right away to execute any code.

- Compiled code is much faster but producing it is resource hungry, and it is only available after the compilation process is over which takes time.

The resulting execution model could be summarized as follows:

- Code starts executing interpreted with no delay.

- Methods that are found commonly executed (hot) are JIT compiled.

- Once compiled code is available, the execution switches to it.

Multi-tiered execution

In HotSpot, the interpreter instruments the code that it executes; that is, it maintains a per-method count of the number of times a method is entered. Because hot methods usually have loops, it also collects the number of times a branch back to the start of a loop is taken. On method entry, the interpreter adds the two numbers and if the result crosses a threshold, it enqueues the method for compilation. A compiler thread running concurrently with threads executing Java code then processes the compilation request. While compilation is in progress, interpreted execution continues, including for methods in the process of being JIT'ed. Once the compiled code is available, the interpreter branches off to it.

So, the trade-off is roughly between the fast-to-start-but-slow-to-execute interpreter and the slow-to-start-but-fast-to-execute compiled code. How slow-to-start that compiled code is, is under the virtual machine designer's control to some extent: The compiler can be designed to optimize less (in which case code is available sooner but doesn't perform as well) or more (leading to faster code at a later time). A practical design that leverages this observation is to have a multi-tier system.

The three tiers of execution

HotSpot has a three-tiered system consisting of the interpreter, the quick compiler, and the optimizing compiler. Each tier represents a different trade-off between the delay of execution and the speed of execution. Java code starts execution in the interpreter. Then, when a method becomes warm, it's enqueued for compilation by the quick compiler. Execution switches to that compiled code when it's ready. If a method executing in the second tier becomes hot, then it's enqueued for compilation by the optimizing compiler. Execution continues in the second-tier compiled code until the faster code is available. Code compiled at the second tier has to identify when a method becomes hot, so it also has to increment invocation and back-branch counters.

Note that this description is simplified: The implementation tries to not overwhelm compiler threads with requests, and to balance execution speed with compilation load. As a consequence, thresholds that trigger compilations are not fixed and the second tier is actually split into several sub-tiers.

In HotSpot, for historical reasons, the second tier is known as C1 or the client compiler and the optimizing tier is known as C2, or the server compiler.

In the HelloWorld example, the third column of numbers in the diagnostic output identifies the tier at which code is compiled. Tiers 1 to 3 are subtiers of the low-tier compiler. Tier 4 is the optimizing compiler. As can be seen in the output, that example is so short-lived that no method reaches the optimizing compiler.

Deoptimization and speculation

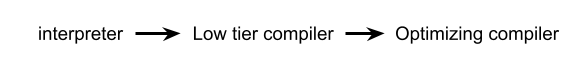

Figure 1 shows a simplified diagram of the state of a method execution.

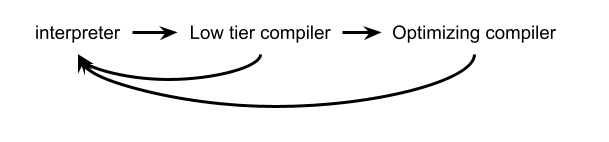

State changes happen left to right. This is incomplete as, perhaps surprisingly, state transitions also exist right to left (that is, from more optimized code to less optimized code), as shown in Figure 2.

In HotSpot jargon, that process is called deoptimization. When a thread deoptimizes, it stops executing a compiled method at some point in the method and resumes execution in the same Java method at the exact same point, but in the interpreter.

Why would a thread stop executing compiled code to switch to much slower interpreted code? There are two reasons. First, it is sometimes convenient to not overcomplicate the compiler with support for some feature's uncommon corner case. Rather, when that particular corner case is encountered, the thread deoptimizes and switches to the interpreter.

The second and main reason is that deoptimization allows the JIT compilers to speculate. When speculating, the compiler makes assumptions that should prove correct given the current state of the virtual machine, and that should let it generate better code. However, the compiler can't prove its assumptions are true. If an assumption is invalidated, then the thread that executes a method that makes the assumption deoptimizes in order to not execute code that's erroneous (being based on wrong assumptions).

Example 1: Null checks in the C2 tier

An example of speculation that C2 uses extensively is its handling of null checks. In Java, every field or array access is guarded by a null check. Here is an example in pseudocode:

if (object == null) {

throw new NullPointerException();

}

val = object.field;

It's very uncommon for a NullPointerException (NPE) to not be caused by a programming error, so C2 speculates—though it cannot prove it—that NPEs never occur. Here's that speculation in pseudocode:

if (object == null) {

deoptimize();

}

val = object.field;

If NPEs never occur, all the logic for exception creation, throwing, and handling is not needed. What if a null object is seen at the field access in the pseudocode? The thread deoptimizes, a record is made of the failed speculation, and the compiled method's code is dropped. On the next JIT compilation of that same method, C2 will check for a failed speculation record before speculating again that no null object is seen at the field access.

Example 2: Class hierarchy analysis

Now, let's look at another example, starting with the following code snippet:

class C {

void virtualMethod() {}

}

void compiledMethod(C c) {

c.virtualMethod();

}

The call in compiledMethod() is a virtual call. With only class C loaded but none of its potential subclasses, that call can only invoke C.virtualMethod(). When compiledMethod() is JIT compiled, the compiler could take advantage of that fact to devirtualize the call. But what if, at a later point, a subclass of class C is loaded? Executing compiledMethod(), which is compiled under the assumption that C has no subclass, could then cause an incorrect execution.

The solution to that problem is for the JIT compiler to record a dependency between the compiled method of compiledMethod() and the fact that C has no subclass. The compiled method's code itself doesn't embed any extra runtime check and is generated as if C had no subclass. When a method is loaded, dependencies are checked. If a compiled method with a conflicting dependency is found, that method is marked for deoptimization. If the method is on the call stack of a thread, when execution returns to it, it immediately deoptimizes. The compiled method for compiledMethod() is also made not entrant, so no thread can invoke it. It will eventually be reclaimed. A new compiled method can be generated that will take the updated class hierarchy into account. This is known as class hierarchy analysis (CHA).

Synchronous and asynchronous deoptimization

Speculation is a technique that's used extensively in the C2 compiler beyond these two examples. The interpreter and lowest tier actually collect profile data (at subtype checks, branches, method invocation, and so on) that C2 leverages for speculation. Profile data either count the number of occurrences of an event—the number of times a method is invoked, for instance—or collect constants (that is, the type of an object seen at a subtype check).

From these examples, we can see two types of deoptimization events: Synchronous events are requested by the thread executing compiled code, as we saw in the example of the null check. These events are also called uncommon traps in HotSpot. Asynchronous events are requested by another thread, as we saw in the example of the class hierarchy analysis.

Safepoints and deoptimization

Methods are compiled so deoptimization is only possible at locations known as safepoints. Indeed, on deoptimization, the virtual machine has to be able to reconstruct the state of execution so the interpreter can resume the thread at the point in the method where compiled execution stopped. At a safepoint, a mapping exists between elements of the interpreter state (locals, locked monitors, and so on) and their location in compiled code—such as a register, stack, etc.

In the case of a synchronous deoptimization (or uncommon trap), a safepoint is inserted at the point of the trap and captures the state needed for the deoptimization. In the case of an asynchronous deoptimization, the thread in compiled code has to reach one of the safepoints that were compiled in the code in order to deoptimize.

As part of the optimization process, compilers often re-order operations so the resulting code runs faster. Re-ordering operations across a safepoint in that way would cause the state at the safepoint to differ from the state expected at that location by the interpreter and can’t be allowed. As a consequence, it’s not feasible to have a safepoint for every bytecode of a method. Doing so would constrain optimizations too much. A compiled method only includes a few safepoints (on return, at calls, and in loops) and a balance is needed between common enough safepoints, so a deoptimization is not delayed, and rare enough safepoints, so the compiler has the freedom to optimize between two of them.

This also affects garbage collection and other virtual machine operations that rely on safepoints. Indeed, garbage collection operations need the locations of live objects on a thread’s stacks, which are only available at safepoints. In general, in compiled code, safepoints are the only locations where state that the virtual machine can work with is available.

Conclusion

To summarize the discussion in this article, a JIT compiler, at its core, is just another compiler. But because it shares resources with the application, the amount of resources used for compilation must be balanced with the benefits of having compiled code. Running concurrently with the application code also has a benefit: It allows compiled code to be tailored to the observed state of the virtual machine through speculation.

Last updated: August 26, 2022