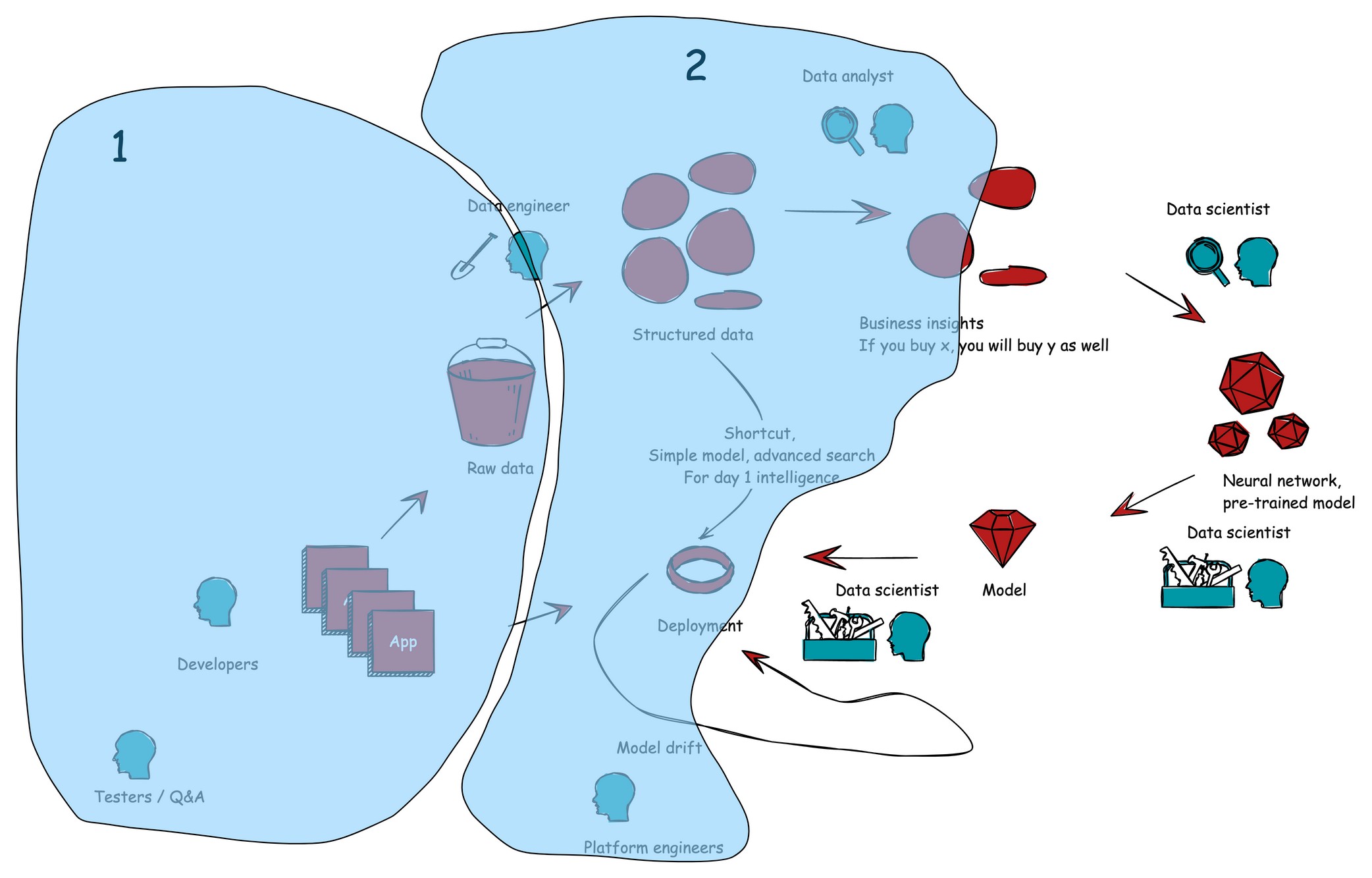

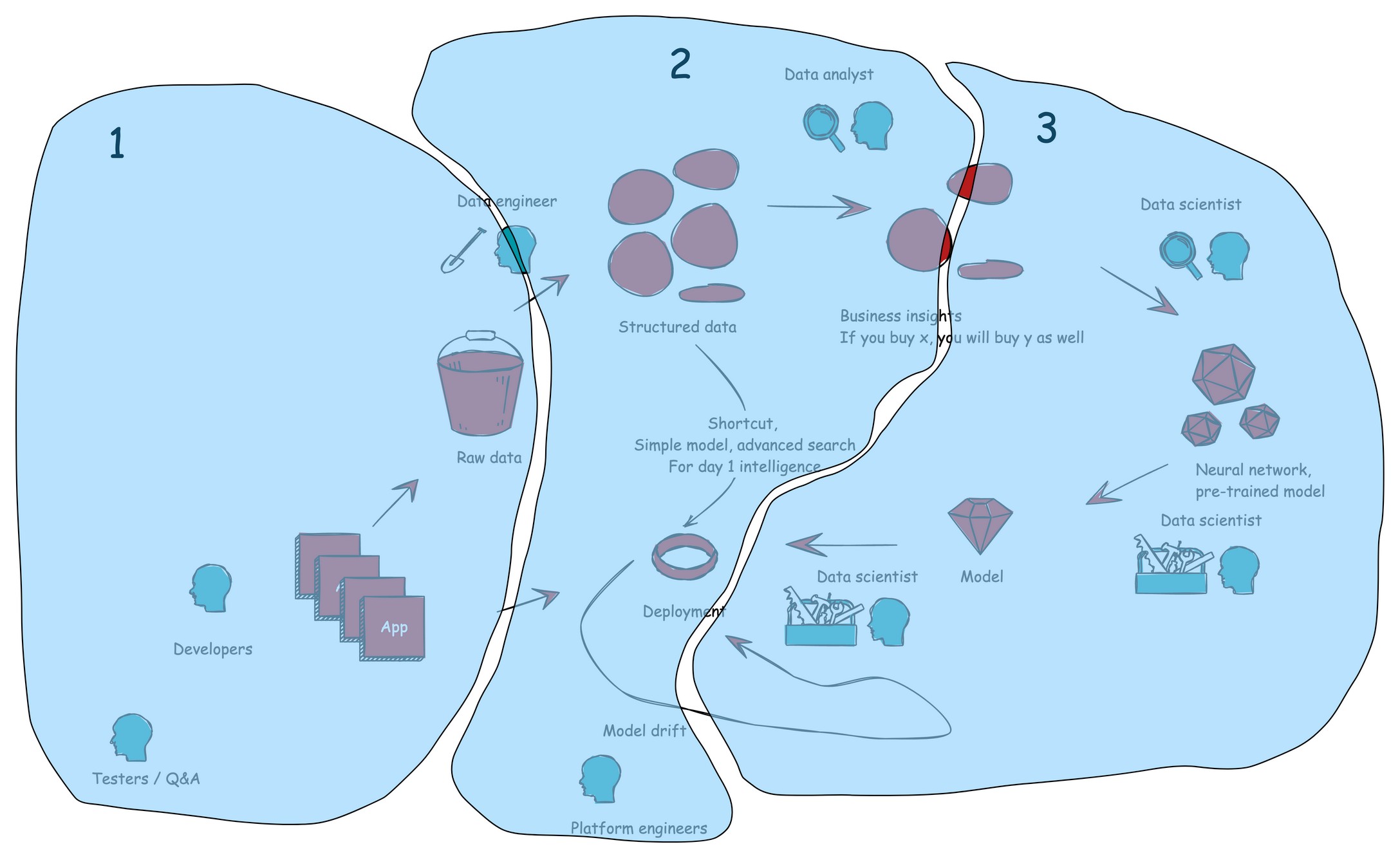

There's no question that artificial intelligence (AI) is the talk of the town these days, overshadowing almost everything else in the world of technology. In this series of articles, we'll explore the road toward AI (Figure 1 ) and how your organization can smoothly transition toward incorporating AI into its platforms and applications.

Clean architecture isn't the only topic I cover, you know! So, welcome aboard as we steer our focus toward artificial intelligence and machine learning (for now, at least, as these principles will map on the domain of AI/ML as well).

Don't worry if AI is still a bit of a mystery to you—we'll start right from scratch. Think of this series of posts as an inverted pyramid, with each post building upon the last (Figure 2). We'll kick things off by laying down the basics and gradually move toward more advanced topics. As we progress, the discussion will branch out into various aspects of AI, providing you with a comprehensive understanding of its applications and implications. So, fasten your seatbelts and get ready to explore the exciting world of AI with me!

Is it AI, ML, or DL?

AI is a broad field that includes many different techniques—machine learning is just one of them.

Artificial intelligence

AI is about creating computer systems that can perform tasks that would normally require human intelligence. This could include things like understanding language, recognizing objects in images, or making decisions based on complex data.

For example, think about a virtual assistant like Siri or Alexa. These systems use AI to understand what you're saying, figure out what you want, and then provide you with the appropriate response.

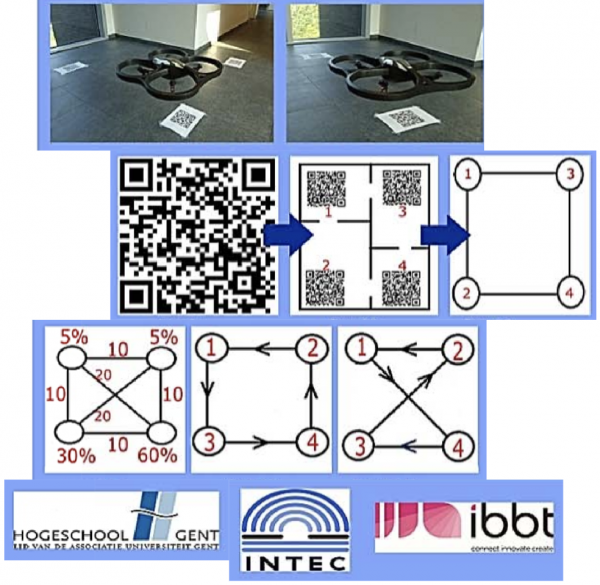

AI is often perceived as a cutting-edge innovation, but that's not entirely accurate. My thesis, completed back in 2011, delved into topics that now resonate with machine learning concepts (see Figure 3). I focused on enabling drones to fly autonomously and designing algorithms to efficiently locate packages within warehouses based on probabilities of their positions. However, at that time, these functionalities were implemented using traditional methods like if-else statements and heuristic algorithms such as the traveling salesman and ant colony optimization.

Taking a step further back, around 2002, we encounter the beginning of iRobot Roomba, marking a significant milestone in autonomous vacuuming technology. Yet the roots of AI stretch back even further. In 1955, John McCarthy organized a workshop at Dartmouth on "artificial intelligence," marking the first usage of the term and laying the groundwork for its popularization over the years. Reflecting on these historical points illustrates the evolution of AI from its early stages to the sophisticated technologies we see today.

Machine learning

Machine learning is like teaching a computer to learn from examples. Instead of giving the computer a set of rules to follow, you provide it with a bunch of data and let it figure out the patterns on its own. It's a bit like how you might learn to recognize different types of dogs by looking at pictures: you don't memorize a list of rules, but you start to recognize common features like floppy ears or wagging tails (i.e., pattern matching). Figure 4 shows an example of a person using their pattern-matching abilities to categorize objects.

For example, suppose you want to build a spam filter for your email. You could give the computer thousands of emails, some marked as spam and some as not spam. By analyzing the patterns in these emails, the computer can learn to recognize which ones are likely to be spam and which ones aren't (notice that manual labeling of the input/training data is a prerequisite for starting with machine learning).

Deep learning

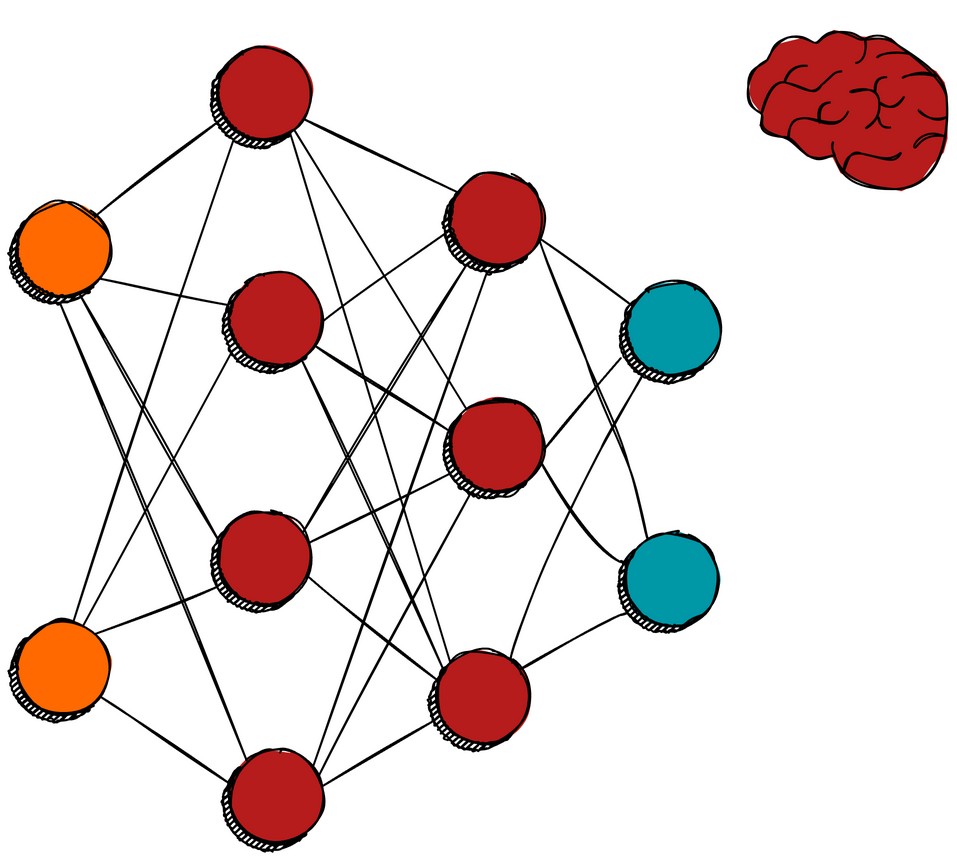

Deep learning (DL) is a subset of machine learning that's inspired by the way the human brain works. It involves building neural networks, which are networks of interconnected nodes that are loosely modeled on the neurons in our brains (see Figure 5 for an artistic depiction of this). These networks are capable of learning increasingly complex representations of data by stacking layers of nodes on top of each other.

A classic example of deep learning in action is image recognition. By training a deep learning model on a large dataset of labeled images, the model can learn to recognize patterns like edges, shapes, and textures at different levels of abstraction. This allows it to accurately identify objects in new images, even ones it hasn't seen before. Figure 6 illustrates how in this process, a human being must first categorize the images in the dataset in order to then train the deep learning model on the appropriate information.

So, in summary, machine learning is about teaching computers to learn from data, AI is about creating systems that can perform tasks that would normally require human intelligence, and deep learning is a specific technique within machine learning that's inspired by the structure of the human brain.

Roadies and rock stars

In the context of discussing AI, we can draw a comparison to the dynamics of a concert. Imagine purchasing tickets to see your favorite band perform. More often than not, the focus lies in witnessing the rock stars take center stage, rather than appreciating the meticulous efforts of the roadies working behind the scenes. These unsung heroes are responsible for setting up the stage, orchestrating the lights and sound, and managing the PR and marketing efforts that make the concert possible. Figure 7 shows a few Red Hat "rock stars."

Similarly, within the landscape of AI/ML, a similar analogy can be drawn. The "rock stars" in this scenario are the models themselves, be it Gemini, GPT, LLaMa, Mistral, or others. These models capture our attention with their remarkable capabilities, such as generating text or recognizing images. However, their success is only made possible by the dedicated individuals working tirelessly behind the scenes.

These AI counterparts come in various roles: software developers, data scientists (sometimes regarded as rock stars themselves), platform engineers, product owners, and even the infrastructure. Much like the stage crew at a concert, they ensure the smooth operation of AI endeavors, from data collection and preprocessing to model training and deployment. Even the infrastructure quietly supports these AI models, though its significance may go unnoticed until a rare disruption, like a 502 error, brings it to our attention.

In essence, just as a rock concert thrives on collaboration between performers and the backstage crew, the success of AI and ML ventures relies on the collective efforts of both the models and the dedicated individuals who bring them to fruition.

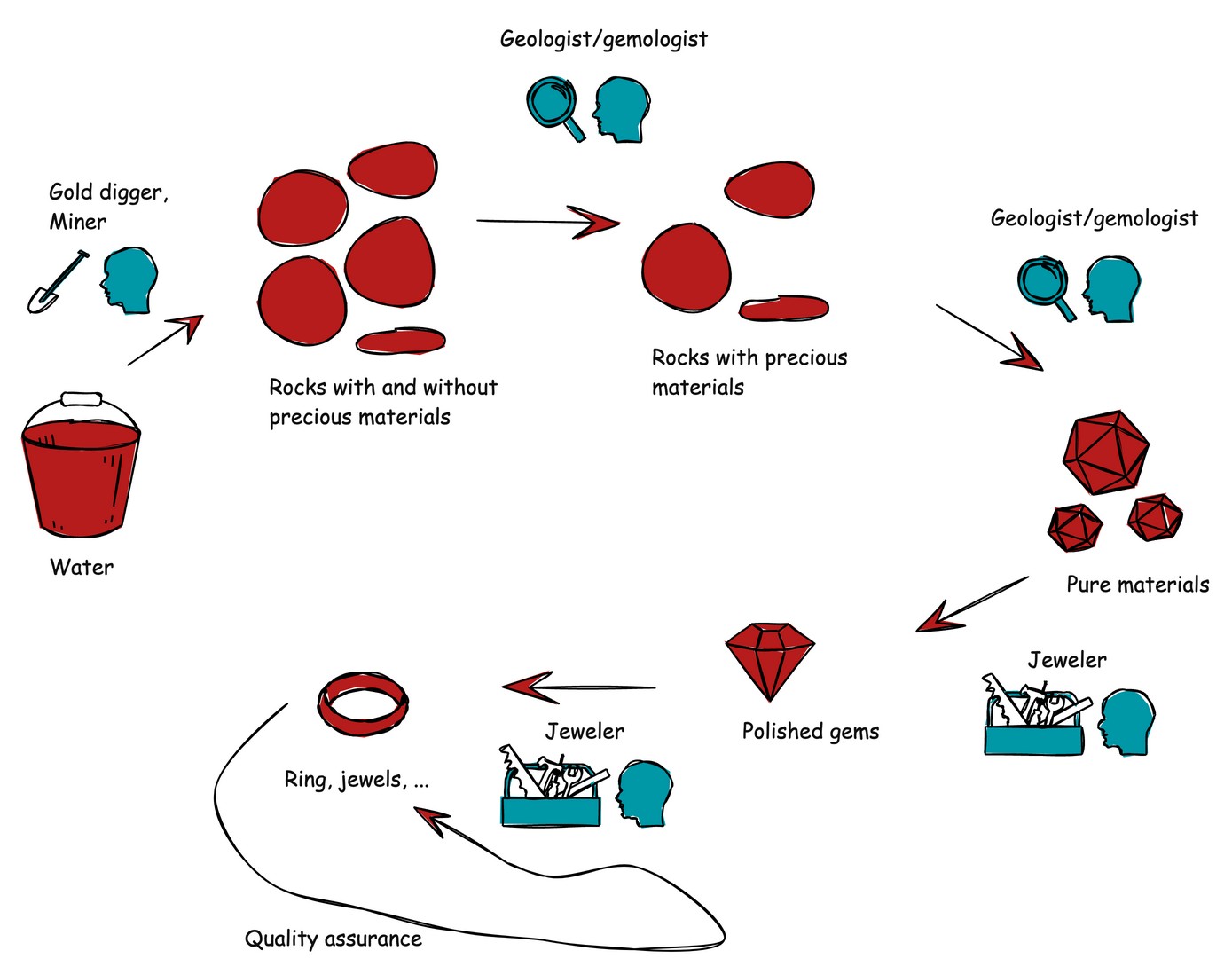

Comparing AI/ML and gem mining

Before diving into the details of AI and ML processes, let's take a moment to draw parallels with the process of gem and gold mining. The gem mining process looks like this:

- Miners venture into the waters to collect nuggets, hoping to find valuable gems or crystals among the regular rocks.

- These nuggets are examined by gemologists or geologists who help identify the ones containing precious materials, filtering out the worthless rocks.

- Then, the valuable materials are extracted from the nuggets, leaving behind pure substances ready for further processing .

- Fourthly, craftsmen such as jewelers and goldsmiths transform these pure materials into sellable items like jewels or gold bars.

Finally, the finished products are sold—but the process doesn't end there. Just as with any product, there's a quality assurance phase where any defects are addressed and fixed by the craftsmens.

Now, you might wonder, what does this have to do with AI? In the next section, we'll uncover the intriguing connections between these processes.

AI/ML process

Although the title of this section refers to AI/ML processes, the focus of this section will primarily be on the ML process. We'll delve into other AI processes in the next section. For now, let's keep things high level. Don't worry—we'll dive deeper into each step in future articles.

To kick off, let's draw parallels with the gem mining process described earlier. The full process is depicted in Figure 8.

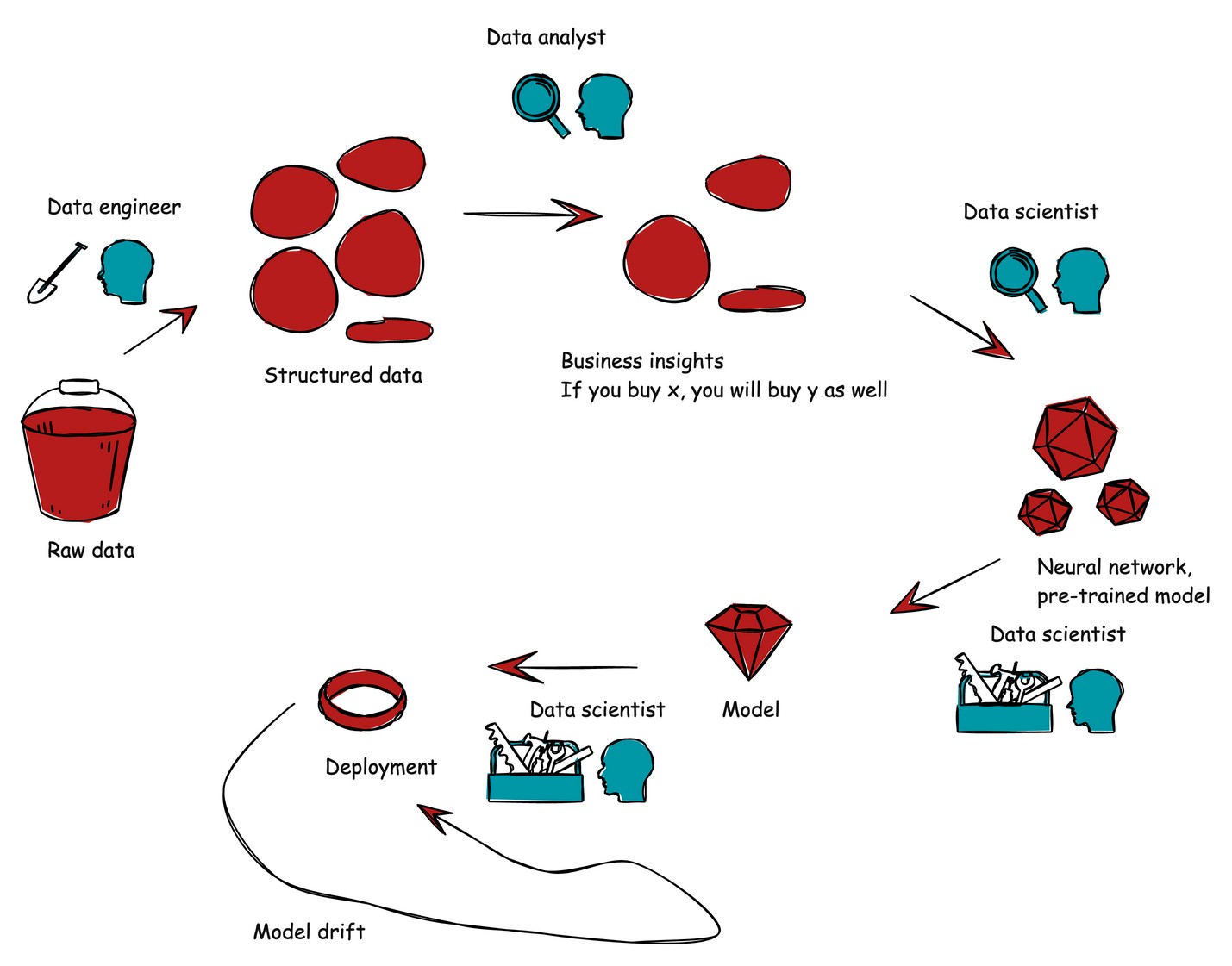

Surprisingly, ML processes share striking similarities, as shown in Figure 9.

- Collecting nuggets from the water: Just as miners gather raw nuggets from the water, collecting data from APIs and applications initiates the ML process. However, not all data is relevant, requiring data engineers to filter and manipulate it.

- Filtering out worthless rocks: Similar to separating valuable nuggets from worthless rocks, data analysts and/or data engineers sift through data to identify which pieces contribute to valuable insights and predictions for businesses.

- Extraction of valuable materials: Extracting valuable materials from nuggets aligns with isolating valuable data in statistical databases. The importance of this step will be elaborated on in subsequent posts.

- Crafting pure materials: Just as craftsmen transform materials into pure forms, data scientists design and train neural networks or (pre-trained) ML models based on datasets. They then refine or train these models with training data.

- Selling jewels/gold bars: Deploying the ML model for consumption by applications mirrors the selling of jewels or gold bars. Additionally, quality assurance involves testing for, detecting, and rectifying model drift, which we'll explore further in later posts.

By drawing parallels with gem mining, we can unravel the complexity of the ML process, laying the groundwork for a deeper understanding in the posts to come.

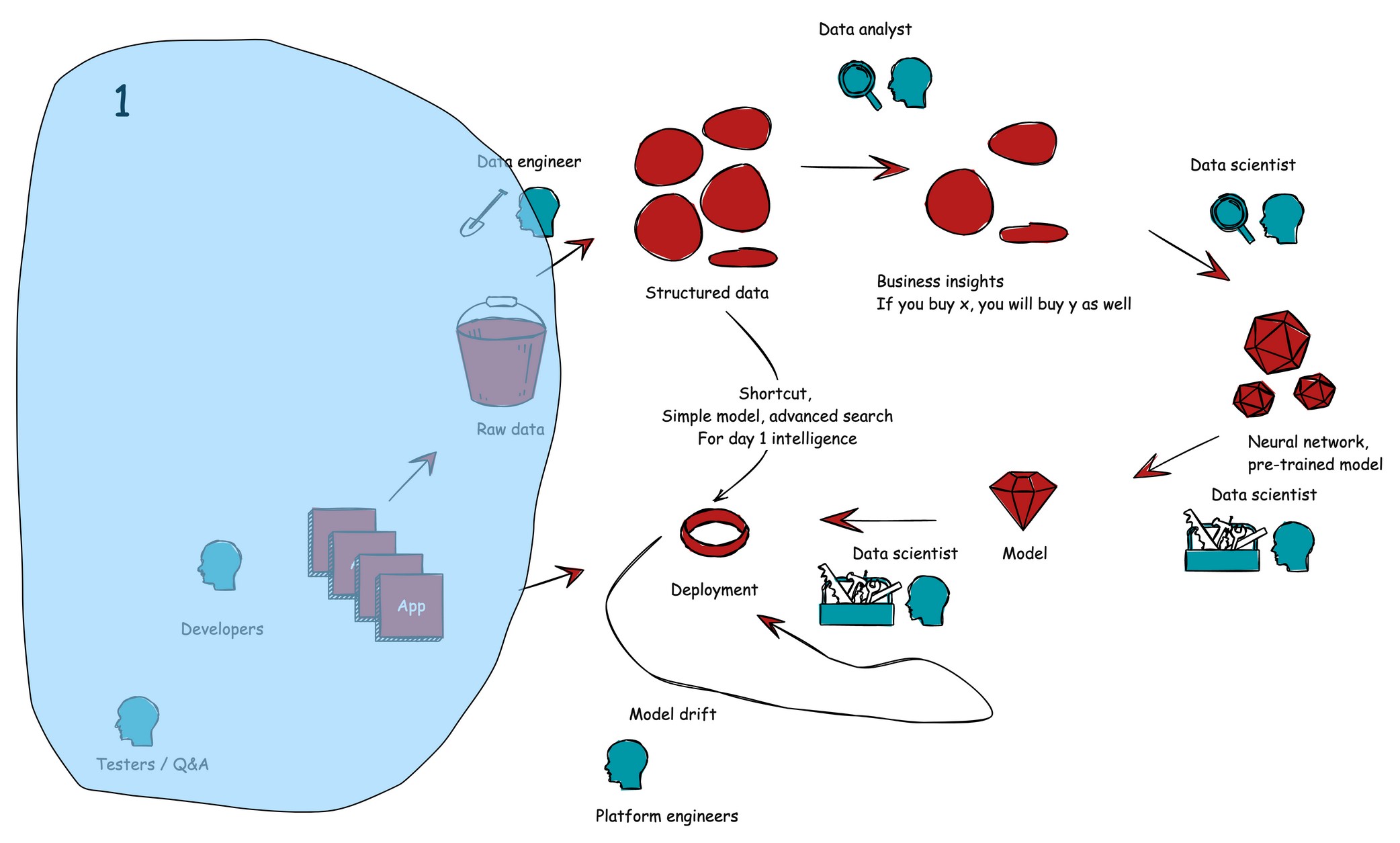

Road toward AI

As earlier noted, future articles will focus on how you can transition from zero to fully embedded AI/ML, highlighting every step along the way. A more general view on the road toward AI will look like this:

- Get your application platform mature.

- Implement structured data and day 1 intelligence.

- Introduce machine learning.

Step 1: Get your application platform mature

Data is the cornerstone of AI/ML initiatives, and the first step toward success lies in acquiring, refining, and managing your data effectively. This process begins with establishing a robust application platform, such as an event-driven architecture or microservices framework.

A solid application platform is crucial as it facilitates agile development and deployments. For instance, if a data engineer or analyst requires the integration of a new data field or API (e.g., a weather API), the platform should enable swift implementation within hours or days, not months.

Furthermore, the platform should support the replication of data in various formats and across different databases to cater to diverse analytical needs. We'll delve deeper into this aspect in subsequent posts.

By laying the groundwork with a solid application platform, organizations can ensure agility and responsiveness in managing and leveraging their data, setting the stage for successful AI/ML endeavors. See Figure 10 for an illustration of this process.

Step 2: Implement structured data and day 1 intelligence

Once your application platform is robust and agile, the next logical step is to implement a data platform. This platform serves as a structured data source for data engineers, data scientists, and business analysts. Beyond simply enabling structured data storage, it's crucial to consider implementing day-one intelligence capabilities. This intelligence acts as a shortcut toward developing ML models.

But what's the value in this approach? Well, traditionally, implementing machine learning requires vast amounts of data—terabytes or even petabytes—which may not be available from day one. However, leveraging tools like Spark and graph databases allows you to code basic intelligence into your platform using a limited dataset. This brings two significant benefits. Firstly, it provides intelligence from the very beginning, enhancing the platform's functionality and usefulness right from the start. Secondly, it helps validate the completeness of your dataset early on. Identifying missing data fields at an early stage allows you to address them promptly, preventing potential setbacks down the line.

This process introduces an AI flow into your platform. Even when incorporating ML in the future, this step remains relevant. While ML models operate as black boxes trained solely on patterns within the training data, the intelligence coded into the platform adds a layer of human logic to the predictions. This complementary approach (e.g., enabled with Kafka) ensures a more comprehensive and nuanced understanding of your data, ultimately enhancing the effectiveness of your AI and ML initiatives. Figure 11 depicts this step of the process.

Step 3: Introduce machine learning

Now that structured data is in place, your data analyst can begin exploring correlations, and/or data scientists can start implementing business requirements using ML models. At this stage, neural networks can be developed from scratch, or pre-trained models can serve as a baseline to speed up the design and training phases. However, it's essential to exercise caution when using pre-trained models, ensuring they align with data governance principles—more on this in future articles.

Once the model is designed and trained, it can be deployed, potentially alongside any shortcuts developed earlier, and seamlessly integrated into your applications or platform. However, the journey doesn't end here. Continuous testing and validation of the model's performance on new data are imperative. Detection of model drift, where the model's performance degrades over time due to changes in the data distribution, signals the need for retraining with the updated data.

Despite the allure of ML, adopting a MLOps approach requires consideration of CI/CD-like pipelines to streamline model development and deployment processes. We'll explore this further in forthcoming blog posts, diving deeper into the complexities of MLOps and its significance in maintaining model performance and reliability over time. Figure 12 illustrates a complete view of this entire process.

Conclusion

Now that we've outlined the basics of the AI/ML process, it's time to delve deeper and clear out the road toward AI. Stay tuned for updates as we progress further along this journey.