This article explains how to build smart, cloud-native Java applications using Quarkus and LangChain4j. We'll explore how to integrate AI capabilities into your Quarkus projects, focusing on chatbots, RAG, and real-time interactions. Making the most of the Quarkus platform, we'll create an intelligent assistant, a knowledge-driven bot, and a production-grade AI service, with instant feedback, observability, and scalability.

About the application: WealthWise

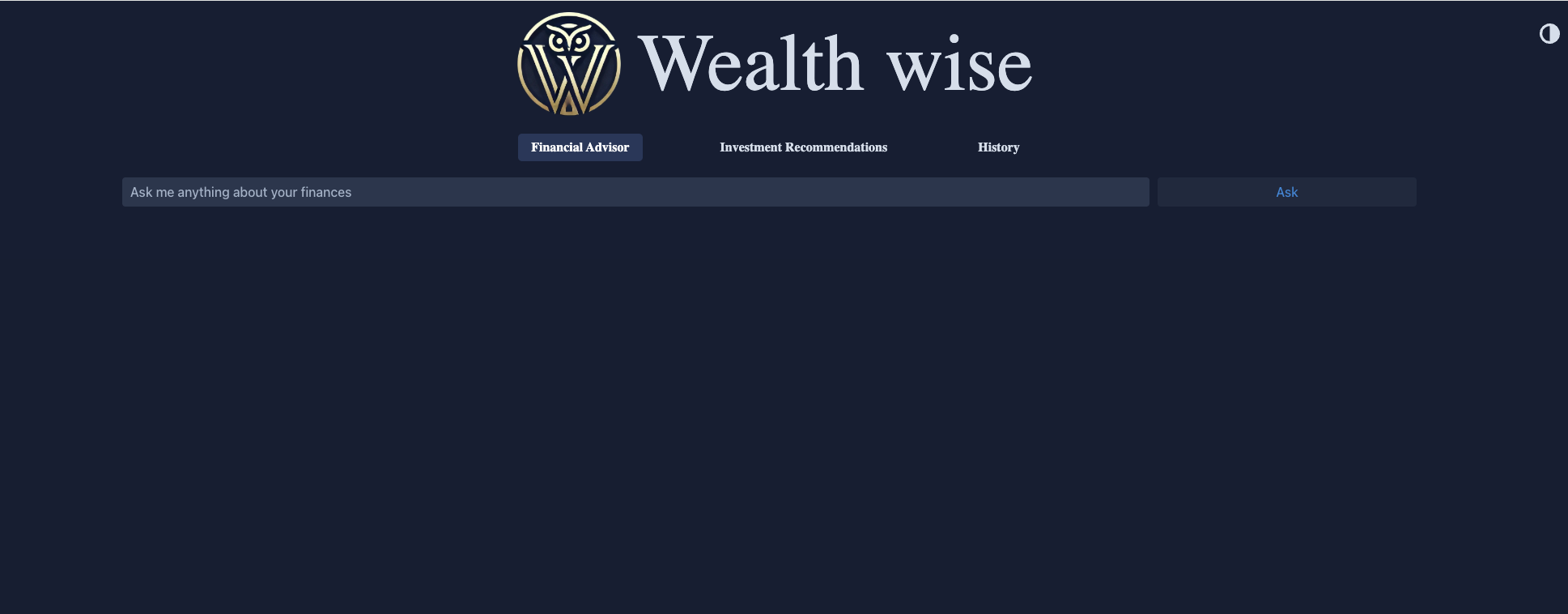

Throughout this series, we'll build on a project called WealthWise. WealthWise is a cloud-native Java application infused with AI capabilities to deliver real-time financial guidance to users.

WealthWise's core services include:

- Chatbot financial advisor: An AI-powered assistant that answers financial questions, explains investment options, and supports users in tax planning.

- RAG-powered insights: Uses Retrieval-Augmented Generation (RAG) to fetch and summarize information from a curated knowledge base, including market trends, tax regulations, and investment strategies.

- Investment recommendations: Personalized suggestions for stock, bond, and ETF allocations based on user risk tolerance.

Getting started with Quarkus and AI

To get a Quarkus project up and running with AI capabilities, we'll walk through setting up the WealthWise project.

Let's break it down into key steps:

Clone the WealthWise repository:

git clone https://github.com/phillip-kruger/wealth-wise.git cd wealth-wiseThis project is already structured as a Quarkus application and ready to run in development mode.

Run Quarkus in Dev Mode:

./mvnw quarkus:devWith Quarkus Live Coding, your code is hot-reloaded during development. This means you can modify chatbot prompts, system messages, or back-end logic and see the changes reflected instantly, without restarting the application. This is especially useful when fine-tuning conversational flows, adjusting response formats, or iterating on RAG configurations to improve user experience

Let Dev Services handle the database. Storing chat history and user interactions is easy in Quarkus, thanks to Dev Services and Panache.

PostgreSQL made effortless

WealthWise uses PostgreSQL as its database. To get started, simply add the JDBC driver dependency to your pom.xml file as follows:

<dependency>

<groupId>io.quarkus</groupId>

<artifactId>quarkus-jdbc-postgresql</artifactId>

</dependency>Quarkus also includes a powerful Dev UI, a web-based interface aimed at boosting developer productivity during the development phase. It provides real-time visibility into your application's configuration and runtime environment.

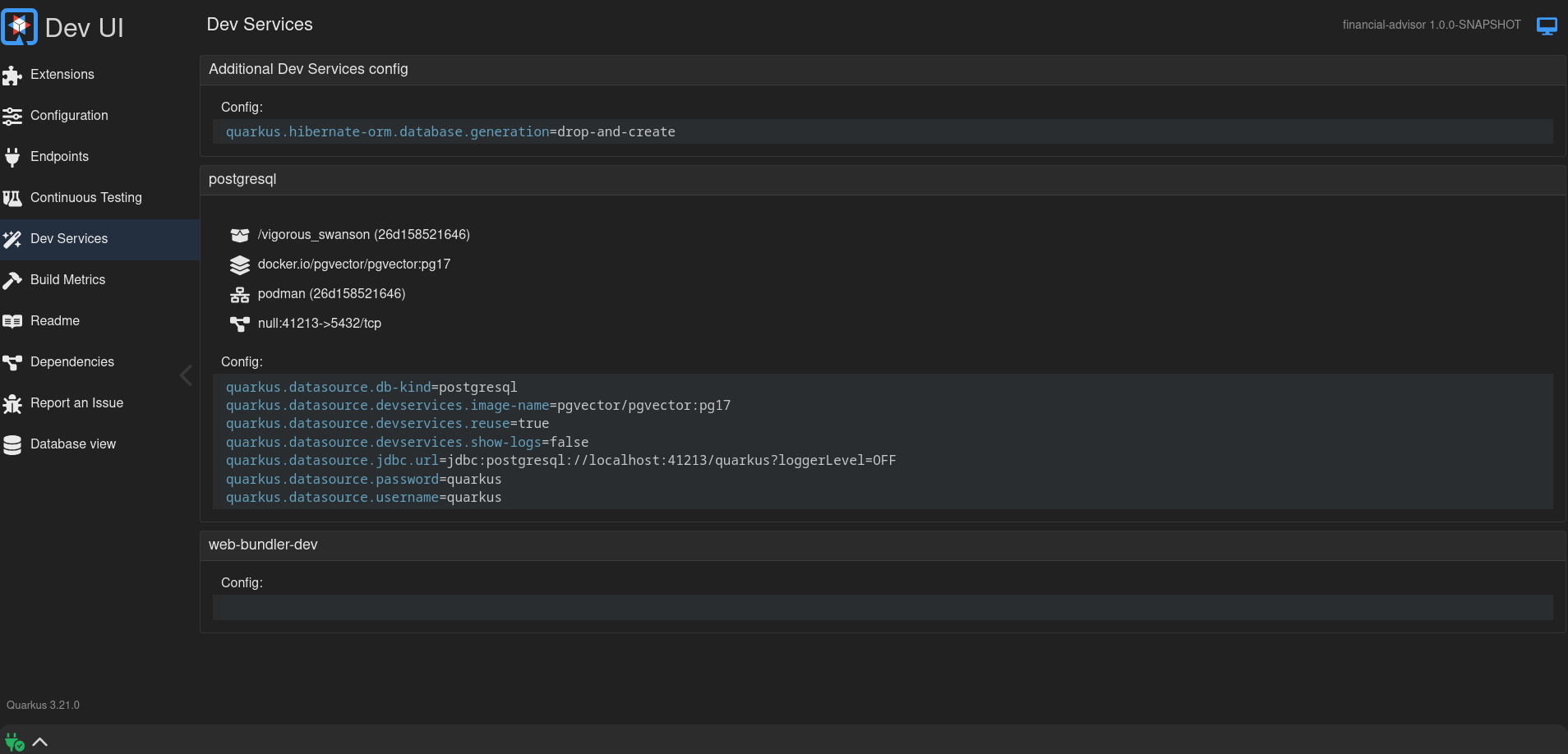

To access the Dev UI, navigate to http://localhost:8080/q/dev-ui in your browser. From there, you can view connection details, ports, credentials, and the container image currently in use, as shown in Figure 1.

This is incredibly useful for persisting chat history without additional infrastructure.

Persisting chat history with Panache

Quarkus uses Hibernate ORM with Panache, a library that simplifies entity and repository logic with active record-style APIs.

Here's the entity used to store user questions in WealthWise:

@Entity

public class History extends PanacheEntity {

public LocalDateTime timestamp;

public String question;

public History() {}

public History(String question) {

this.timestamp = LocalDateTime.now();

this.question = question;

}

public History(LocalDateTime timestamp, String question) {

this.timestamp = timestamp;

this.question = question;

}

public static List<History> findAllByOrderByTimestampDesc() {

return findAll(Sort.by("timestamp").descending()).list();

}

}Key highlights:

- The entity extends

PanacheEntity; it automatically provides an ID field and helper methods likepersist(),findAll(), anddeleteAll(). - No need for boilerplate repositories—logic like

findAllByOrderByTimestampDesc()is implemented directly inside the entity. - Panache helps save time and reduce complexity while remaining fully type-safe and JPA-compliant.

Explore your data in the Dev UI

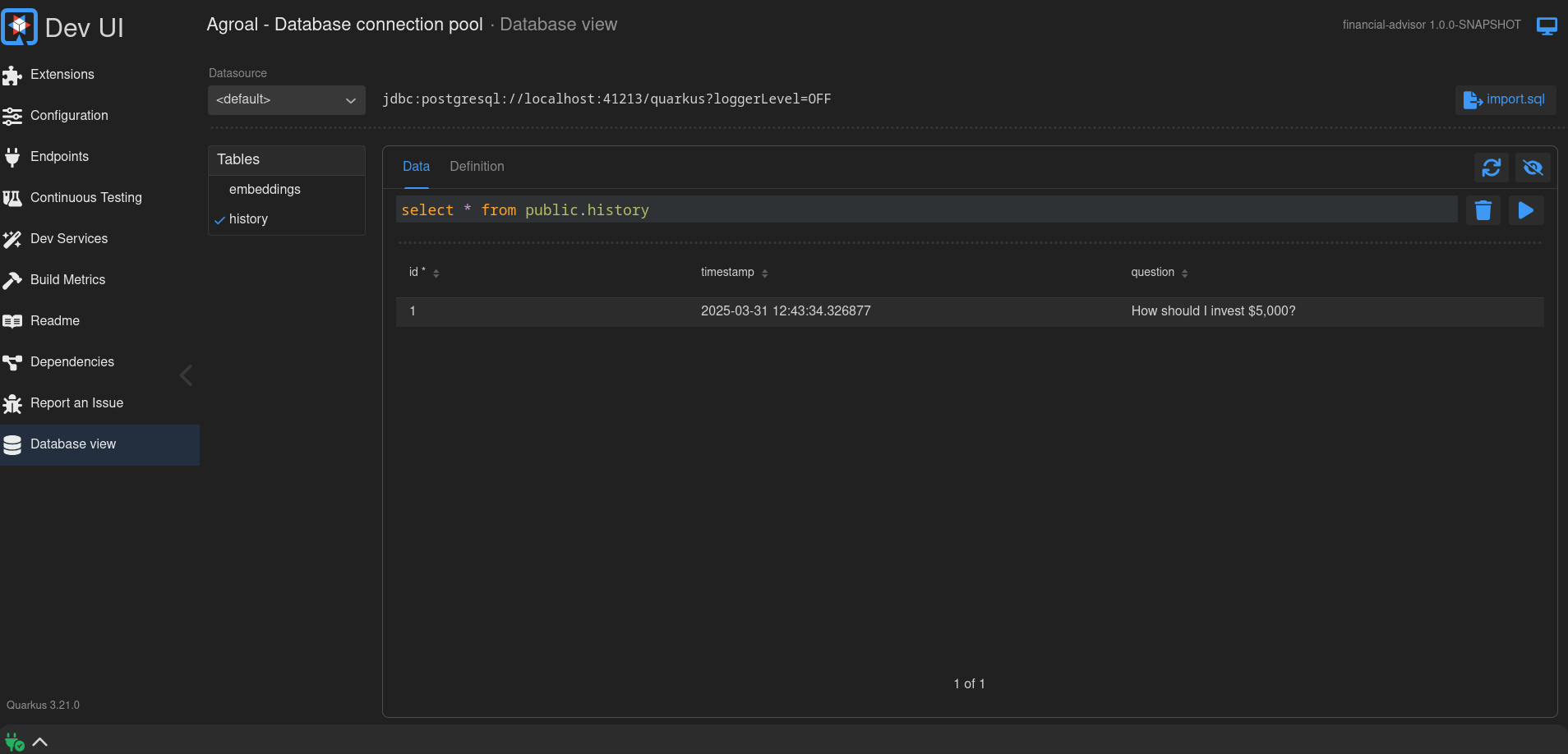

Want to verify that your question was stored correctly? Just head to the Database view tab in Dev UI, powered by the Agroal extension (Figure 2).

You can inspect your tables (history, embeddings, etc.), run SQL queries, and check your data, all without leaving the browser or writing extra tools.

Quarkus Dev UI brings real observability to your local dev environment. It's like a built-in dashboard for your services, configuration, and runtime state.

Add AI capabilities with LangChain4j

WealthWise uses the Quarkiverse LangChain4j extension to bring large language model (LLM) capabilities directly into your Java application. With just a few dependencies and simple annotations, you can integrate features like:

- Chatbots for financial advice

- Retrieval-augmented generation (RAG) for domain-specific document queries

Let's look at how that comes together in the WealthWise project.

Step 1: Enable AI with LangChain4j

Add dependencies for core LangChain4j and OpenAI integration in your pom.xml file:

<dependency>

<groupId>io.quarkiverse.langchain4j</groupId>

<artifactId>quarkus-langchain4j</artifactId>

</dependency>

<dependency>

<groupId>io.quarkiverse.langchain4j</groupId>

<artifactId>quarkus-langchain4j-openai</artifactId>

</dependency>You can easily switch providers (e.g., Hugging Face, Ollama) by swapping out the corresponding extension and configuration. The interface remains the same.

Step 2: Configure the AI model

Add your API key and model configuration to application.properties:

quarkus.langchain4j.openai.api-key=${OPENAI_API_KEY}

quarkus.langchain4j.openai.model=gpt-3.5-turboAnd set your OpenAI API key as an environment variable:

export OPENAI_API_KEY=sk-...Step 3: Define the AI service

LangChain4j lets you define an interface that represents your AI assistant. Annotate it with @RegisterAiService and use declarative prompts.

Here's FinancialAdviseService.java:

@RegisterAiService

public interface FinancialAdviseService {

@SystemMessage(SYSTEM_MESSAGE)

@UserMessage(FINANCIAL_ADVISOR_USER_MESSAGE)

String chat(String question);

public static final String SYSTEM_MESSAGE = """

You are a professional financial advisor specializing in Australian financial markets.

You are given a question and you need to answer it based on your knowledge and experience.

Your reply should be in a conversational tone and should be easy to understand. The format of your reply should be in valid Markdown that can be rendered in a web page.

""";

public static final String FINANCIAL_ADVISOR_USER_MESSAGE = """

Question: {question}

""";

}This sets the tone, role, and output format. You can control everything from domain knowledge to voice (e.g., friendly, formal) and output format (e.g., plain text, JSON, Markdown).

About prompt placeholders

The {question} in the @UserMessage is a placeholder that will be replaced with the method parameter at runtime. For example, if you call:

financialAdviseService.chat("What tax deductions can I claim this year?");The final prompt sent to the model will look like the following:

System:

You are a professional financial advisor...

User:

Question: What tax deductions can I claim this year?This simple structure helps ensure that every interaction with the model follows a well-defined pattern.

Test AI interactions from the Dev UI

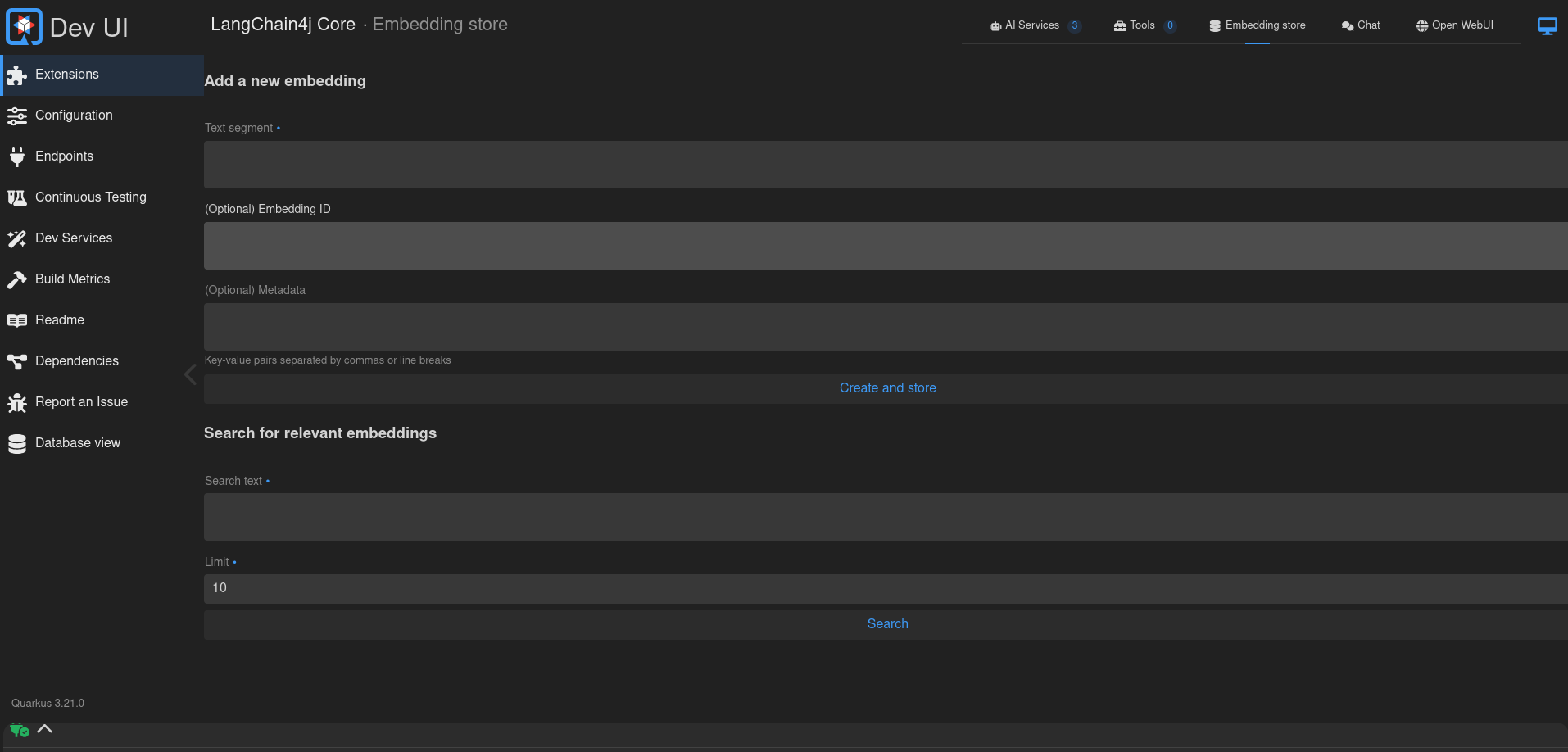

The LangChain4j Dev UI panel makes it easy to test AI responses, tweak system messages, and validate RAG functionality directly from your browser—no REST client needed.

You can:

- Toggle RAG (if a

RetrievalAugmentoris present). - Override the system message on the fly.

- Send prompts and inspect responses.

In this Dev UI, this looks something like Figure 3.

This UI panel is invaluable for fine-tuning prompts, validating output, and experimenting with system behaviors during development.

Enable retrieval-augmented generation (RAG)

RAG empowers your chatbot to provide accurate answers by referencing your own content, like PDFs, docs, or Markdown files, instead of relying solely on the language model.

In WealthWise, we use pgvector to store vector embeddings directly in PostgreSQL. You'll need the following dependency in your pom.xml:

<dependency>

<groupId>io.quarkiverse.langchain4j</groupId>

<artifactId>quarkus-langchain4j-pgvector</artifactId>

</dependency>pgvector lets you store and search for document embeddings efficiently using vector similarity.

Indexing domain-specific documents

In WealthWise, documents are indexed at application startup for demo simplicity. In real-world systems, this indexing is often handled separately, either through a background service, admin endpoint, or data pipeline, since documents usually don't change often.

Here's how it's done in the application:

TaxDeductionsIngestor.javaThis class loads documents from a folder, splits them into chunks, generates vector embeddings, and stores them in the pgvector table.

public void ingestTaxDeductions(@Observes StartupEvent event) {

Log.infof("Ingesting documents...");

// Load documents from local resources

List<Document> documents = FileSystemDocumentLoader.loadDocuments(

new File("src/main/resources/documents/").toPath(),

new TextDocumentParser()

);

// Create the ingestor with a splitter and embedding model

var ingestor = EmbeddingStoreIngestor.builder()

.embeddingStore(store)

.embeddingModel(embeddingModel)

.documentSplitter(recursive(500, 0)) // Split into ~500 token chunks

.build();

// Index the documents into pgvector

ingestor.ingest(documents);

Log.infof("Ingested %d documents.%n", documents.size());

}@Observes StartupEvent: Runs on application startup.FileSystemDocumentLoader: Loads plain text files from a local folder.recursive(500, 0): Splits documents into smaller parts so the language model can better understand and retrieve relevant chunks.

You can drop domain-specific documents (e.g., tax tips, financial advice) into src/main/resources/documents/ and they'll be embedded and indexed.

Implement retrieval logic

When a user asks a question, the chatbot uses the class TaxDeductionsAugmentor.java to fetch relevant chunks from the embedded store, which are then fed into the language model along with the prompt.

@ApplicationScoped

public class TaxDeductionsAugmentor implements Supplier<RetrievalAugmentor> {

private final EmbeddingStoreContentRetriever retriever;

TaxDeductionsAugmentor(EmbeddingStore store, EmbeddingModel model) {

retriever = EmbeddingStoreContentRetriever.builder()

.embeddingModel(model)

.embeddingStore(store)

.maxResults(20) // Fetch top 20 similar documents

.build();

}

@Override

public RetrievalAugmentor get() {

return DefaultRetrievalAugmentor.builder()

.contentRetriever(retriever)

.build();

}

}

This is where the actual retrieval logic lives:

- When a prompt is received, LangChain4j uses the

EmbeddingModelto convert it into a vector. - That vector is compared against the stored vectors using

pgvector. - The most relevant document snippets are selected and added to the prompt context for the model.

With this setup, you get a powerful combination:

- A PostgreSQL-backed vector store via pgvector.

- An AI assistant that can reason over your own business content.

- A clean and modular architecture where document ingestion and augmentation are handled separately.

Manage embeddings in the Dev UI

The LangChain4j integration with Quarkus includes a Dev UI tab for embeddings (Figure 4), which lets you:

- Add new embeddings manually.

- Search for relevant embeddings.

- Inspect metadata.

- Debug RAG queries in real-time.

This view is extremely helpful when debugging or experimenting with your vector store setup. You can verify what's been indexed, run similarity searches, and prototype your RAG flow, all from the browser.

Interact with the chatbot in real time

Once your AI service is configured, it's time to experience it in action.

In WealthWise, real-time interaction is made possible using:

- WebSockets, via the quarkus-websockets-next extension, enabling smooth, bidirectional communication between the front end and back end.

- Lit Web Components for building a reactive and modern UI.

- Quarkus Web Bundler from Quarkiverse to bundle and serve the frontend alongside the backend.

- mvnpm.org to fetch npm packages (like Lit) directly into Maven, which avoids the need for a separate

package.jsonor Node.js tooling.

This means you can manage your JavaScript dependencies right from your pom.xml, just like your Java ones, streamlining builds, and keeping your full-stack project inside Maven.

Simply run the application with:

./mvnw quarkus:devThen open your browser at http://localhost:8080 (Figure 5).

From there, simply type a financial question, like How should I invest $5,000?, and receive a Markdown-formatted, conversational response, powered by Quarkus, LangChain4j, OpenAI, and pgvector, as shown in Figure 6.

Summary: Building AI-ready applications with Quarkus

In this article, we explored how Quarkus makes integrating AI into your Java applications both simple and powerful. Using the WealthWise project as a guide, we showed how Quarkus enables rapid, full-stack development with modern AI tooling:

- Dev Mode gives you instant feedback with hot reloads for fast iteration.

- Dev Services handles databases automatically using Testcontainers—no setup needed.

- Panache simplifies persistence logic for storing data like chat history with minimal boilerplate.

- LangChain4j brings powerful AI capabilities like Chatbots and RAG into your codebase with type-safe, declarative Java interfaces.

- pgvector integrates seamlessly with PostgreSQL to enable vector-based similarity search for RAG.

- Dev UI makes all of this observable and testable, with built-in panels for:

- Checking Dev Services and containerized databases

- Exploring database contents via the Agroal extension

- Chatting with your AI assistant directly in the browser

- Managing embeddings and verifying RAG logic live

Whether you're building an intelligent assistant, a knowledge-driven bot, or a production-grade AI service, Quarkus and LangChain4j offer a productive and scalable foundation, with full-stack Java and zero infrastructure friction.