Virtualization platforms are used for workload consolidation and although it is not commonplace to oversubscribe resources on a virtualization platform, there are scenarios where a virtualization host can end with virtual machines that require more resources than the host can provide. These situations can happen during planned downtime for some of the hosts in the cluster or unplanned outage of hosts where the virtual machines from these hosts are likely to end up on surviving hosts.

In these cases, it is very important for business continuity that the platform is able to sustain these additional virtual machines without failure and with minimal impact on performance. By using the wasp-agent, we can facilitate memory overcommitment by assigning swap resources to the worker nodes. It also manages pod evictions when nodes are at high risk due to high swap I/O traffic or high utilization. The thresholds for high traffic are configurable. For more information, refer to Configuring higher VM workload density in the product documentation.

How Red Hat OpenShift Virtualization handles memory oversubscription

The study we'll discuss in this article proves that the Red Hat OpenShift Virtualization platform is able to maintain continuity of workloads even when the host is oversubscribed, without any tuning required. The performance impact varies by workload, which is to be expected.

We evaluated four different databases in this study. Two of the databases, MariaDB and Postgres, are open source databases that are shipped as part of the Red Hat Enterprise Linux distribution. The other two databases that were part of this study were Microsoft SQL Server and Oracle.

The focus of this study was on memory oversubscription, as it is found to be very disruptive. It not only has a huge impact on performance, but in some cases it leads to virtual machines (VMs) being shut down by the OOM killer process. This typically happens when the memory utilization exceeds the system limit. By provisioning swap on the host, this scenario can be avoided and the workloads can continue to run with some impact on performance, the extent of which depends on the nature of the workloads.

Testing environment

The host used in this study was a Dell PowerEdge R740XD with two Intel Xeon Gold 6130 CPU @ 2.10GHz with 16 cores each, for a total of 64 CPUs with 192G memory. The VMs had 6 vCPUs and 20G memory each. The test required each VM to run the database workload to saturation and the transaction per minute (TPM) was the metric used for studying the performance impact.

While the TPMs were used to track performance, the numbers are not published as this will lead to comparison between the databases. The databases were not tuned for the runs in order to stress the various subsystems, and publishing the numbers would only lead to confusion about the performance characteristics of the databases under test.

The number of VMs was increased for each run until the host was oversubscribed and the total TPM was measured for each run and tracked on a chart. Table 1 shows the different VM counts and total memory and CPU for each run.

| Number of VMs | 1 | 2 | 4 | 8 | 12 |

|---|---|---|---|---|---|

| Total vCPU | 6 | 12 | 24 | 48 | 72 |

| Total memory (G) | 20 | 40 | 80 | 160 | 240 |

The last column shows that the total memory for the run was 240G, an oversubscription of 25%, and total CPU was 72, an oversubscription of 12.5%.

The system under test ran the full OpenShift 4.17.5 cluster components. The storage used was 3 x 1.5 TB NVMe striped as a single LVMCluster for VM disk images. The swap was configured on a separate NVMe device.

Workload overview

HammerDB is the benchmarking tool used to drive database load testing to all the databases, with a focus on the aggregate TPM (transactions per minute) throughput value that can be achieved by scaling up the total number of “instances.” The client-server pair was contained within the same VM to drive each database load.

The Linux VMs were configured with 20 GiB of memory and 6 vCPUs, otherwise using default template settings.

Scaling study

For the scaling study, a user load (i.e., thread count) of 40 was chosen to represent a meaningful workload. HammerDB is a synthetic benchmark tool where user counts do not represent max capacity as each user enters transactions as fast as possible with zero sleep delay. For each database, the VMs were spun up as shown in Table 1 and the TPMs were captured for each run. As each database behaves differently, we will look at the data for each separately.

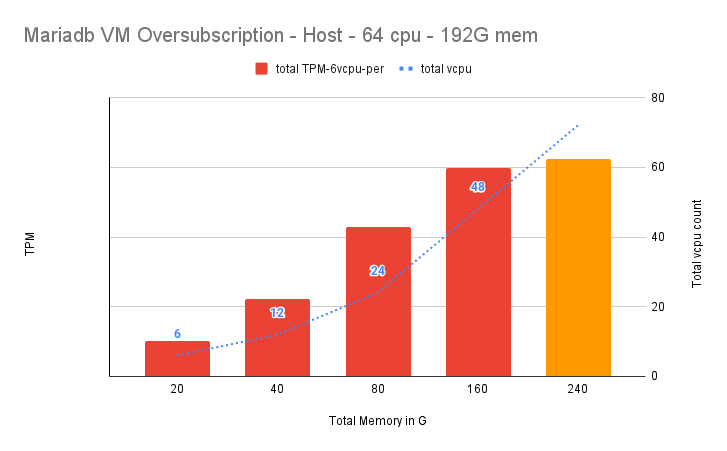

MariaDB

For MariaDB, the database buffer pool was set to 50% of the VM memory in each VM. MariaDB uses directIO, so the memory in the VMs are used well below capacity. In this test we did not expect to see a significant drop in performance, as the active memory in the VMs is not being swapped out. The data is shown in Figure 1.

We observed that the total TPM scaled very well as VMs were added to the host. The host neared saturation with 8VMs. When the VM count was increased to 12VMs, the memory was oversubscribed by 25% and CPUs were oversubscribed by 12.5% but there was no drop in performance.

Microsoft SQL Server

For SQL Server, we do not explicitly set any memory values for the database. By default, SQL Server uses 80% of the VM memory. Some of the active memory for all the VMs collectively does get pushed into swap disk on the host, but we did not see any significant performance drop in the oversubscription scenario. See Figure 2.

Oracle

For Oracle, the total System Global Area (SGA) size was set to 75% of the VM memory, in each VM. As Oracle uses directIO, the memory in the VM is not completely occupied, but because we use 75% for the SGA, the VM memory is occupied in the 90% range (Figure 3). So when we oversubscribe memory, we see a 13% drop in performance for this test.

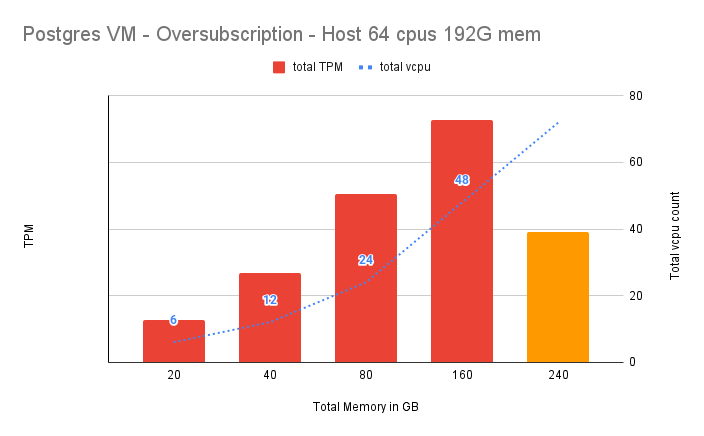

Postgres

For Postgres, the database buffer pool was set to 50% of the VM memory, in each VM. But those familiar with Postgres databases, know that Postgres uses the system file cache in addition to the buffer pool. This leads to the entire memory on the VM being consumed. This memory consumption can be controlled by using the parameter effective_cache_size. However, it was not used in these tests to intentionally consume all the memory. This has a significant impact on performance and the way the TPM scales as we increase the VM count. See Figure 4.

Summary

As we observed in this study, the OpenShift Virtualization platform shows remarkable scaling with different workloads running in the VM, when they are run within the confines of the host resources. It also handles memory oversubscription up to 25% very well for a variety of workloads. It is worth mentioning that the impact on performance during oversubscription will vary based on the workload and memory utilization within the VMs.

Appendix: System configuration

- CPU: Intel(R) Xeon(R) Gold 6130 x 2

- 16 cores x 2 threads, 64 total CPUs

- Memory: 192 GiB RAM

- Storage used for testing: 3x KIOXIA Corporation NVMe (1.5 TB each)

Wasp configuration is an important part of allowing the host to oversubscribe memory. To understand how WASP is set up and configured, refer to this article: Evaluating memory overcommitment in OpenShift Virtualization