Last time we started on our Journey to Delivery Efficiency with a conversation on The Thing, The Life, and The Who (see the post here). In other words, we need to know what the thing is, that it has a lifecycle during which different people care (the who). These basic elements set us up for being able to care for these things throughout their lifecycle and ultimately be Faster, Better, Stronger, and Wiser in their delivery.

I recently heard a simple yet profound statement uttered when walking down the street:

"Be Better"

When you think about it, this simple statement can have significant impact on how you think about the things you do and how you do them. But at its core the simplicity in the statement requires implicit knowledge of where you are. That's where we pick up our journey.

Where am I?

When it comes to information technology systems and things, the next natural question is: “OK, I know what my thing is and how I need to be concerned about it, but how do I do this better?” In this case we are talking about any thing from software development projects, to infrastructure projects, to responding to standard service requests for password resets, even to custom consulting engagements.

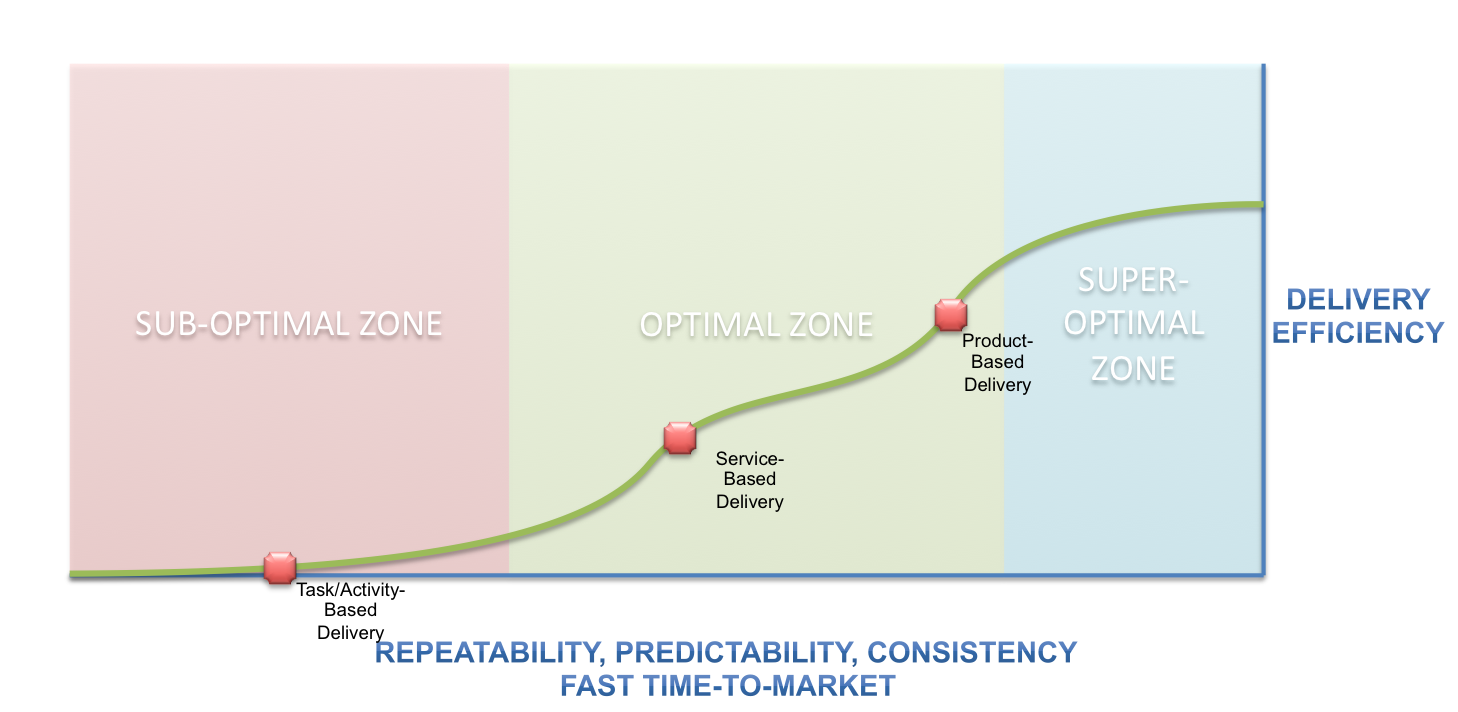

Although these things may seem to be as diverse as individuals on a project team, there is a similarity into how we can think about them from an efficiency perspective. For the delivery of these things there is a natural continuum as to how efficiently we do these things. On this continuum there are three zones that describe how well we deliver: Sub-Optimal, Optimal, and Super-Optimal. While self-explanatory, the key is where along this continuum should the thing that you deliver live. Why? Because this isn’t a question of everything being in the super-optimal bucket. But it is about finding the right place for your thing and how to move it from where it may be today to where you want it to be.

The figure below represents the various points on this continuum where we can shift from one level of delivery efficiency to another and how the consistency, time-to-market, repeatability, and predictability increase. In other words how our efficiency evolves.

Lead by Example

Let’s take a real world example: provisioning a compute device (i.e. virtual or physical machine). Where should this live on the continuum? At least in the Optimal zone, but maybe even in the Super-Optimal zone: highly repeatable, highly predictable, consistent, delivered very fast. A decade or more ago, if I wanted compute power, I would submit a request (maybe even via email) to IT and wait. And wait. And wait some more. Call this, Sub-Optimal. Most of the requests were handled as one-offs that had to be independently approved and go through a lengthy process that involved a number of teams before I actually got access to my server. Call this "Task-Based" Delivery.

As IT teams got smarter, they would, perhaps, provide me a list of standard compute devices that I could choose from along with a standard request form and workflow. The intent with this standardization was to deliver this thing faster time and time again. For those of you versed in Enterprise Architecture, this may sound familiar as the Technical Reference Models (see: The Open Group Architecture Framework) along with inklings of Service Management. When we take the step from one-off to standardized, this allows the delivery team to get slightly faster. We're evolving the thing.

Take this to the next step when we start to have virtual machines enter into the equation. From a delivery perspective, IT is able to deliver faster and more consistent because we have both defined standards as well as a defined consistent approach to provision that includes a high degree of automation. The automation in this case is the virtual machine tools where the delivery team can point and click to spin the image up. Call this "Service-Based" Delivery which is in the Optimal Zone.

Enter Cloud Computing. Now compute resources can be provisioned on-demand in a self-service manner. Every single time delivered reliably, predictably, quickly, and consistently. Call this "Product-Based" Delivery that is on the high-end of the Optimal and bordering on Super-Optimal delivery of compute.

It’s Not Easy Being Here

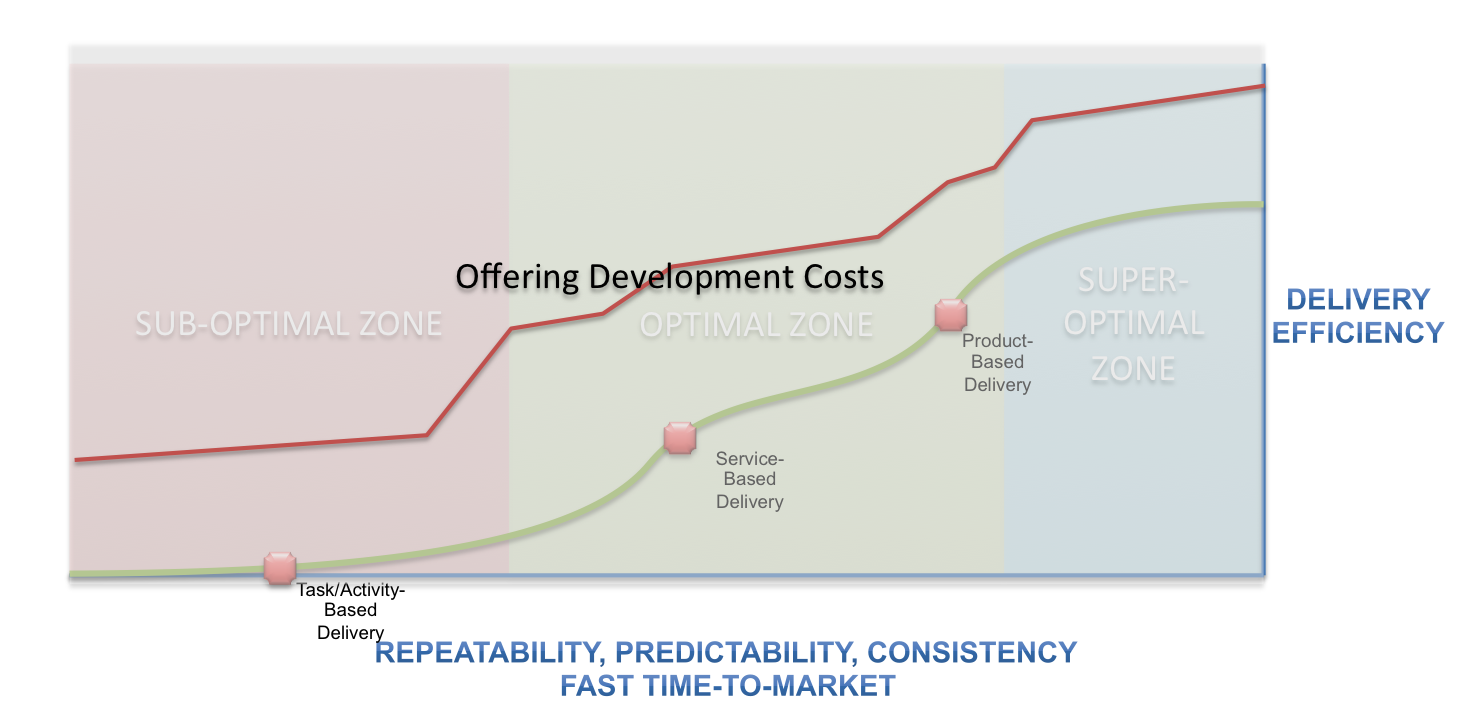

So why shouldn’t we aim to have all of our things delivered in a Super-Optimal fashion? It certainly would be great from a consumer’s point of view. But take a look at the graph below were we show the relative costs associated with moving from one zone to another: Offering Development Costs. There are costs associated with maturing the thing to a point where it can be delivered with a high degree of efficiency.

Ask anyone who has worked on a help desk answering the same call over and over (and over). Eventually they’ll think “why can’t I just automate what I’m doing?”. A nice thought, but in order to automate a manual process you need to consider the time, effort, and cost associated with the automation. The help desk technician can spend time answering the calls or automating. One activity will detract from the other. So we need to ask ourselves: what is the cost associated with maturing the thing to a higher level of maturity and does that cost make sense?

Coming up next…

How do we know that it makes sense to invest in a higher level of efficiency when there are some upfront costs and when we may not have empirical evidence? Next time we’ll talk about the hidden dimension along this continuum that can to make those decisions without doing a full ROI: Relationships. (and not just the interpersonal kind!).

#guerrilla_ea

Last updated:

February 26, 2024