Page

Attach a physical network to your workloads and define micro-segmentation

To begin, let’s understand the scenario we’re working within as well as the personas involved.

In order to get full benefit from taking this lesson, you need to/prerequisites:

- An OpenShift cluster, version >= 4.15.

- OVN Kubernetes CNI configured as the default network provider.

- Kubernetes-nmstate operator deployed.

In this lesson, you will:

- Learn how to use OpenShift networking technologies to connect virtual machines on different nodes through pre-existing physical networks.

- Learn how MultiNetworkPolicy can be utilized for implementing micro-segmentation, allowing for exceptionally fine-grained access control, down to a per-machine basis.

The scenario

In this scenario, we will deploy workloads (both pods and VMs) requiring access to a relational database reachable via the physical network (i.e., deployed outside Kubernetes).

Personas

There are two personas at play here:

- Developer: A person who creates and runs virtual machines. This person must ask the cluster admin for the attachment names for the networks to which the VMs will connect.

- Cluster admin: A person with cluster network admin permissions. They must have authorization to create two types of objects:

NetworkAttachmentDefinitionandNodeNetworkConfigurationPolicy.

User stories

As a virtual machine administrator, I would like to deploy workloads (both pods and VMs) able to connect directly to the physical network.

As a virtual machine administrator, I expect my workloads to get IP addresses either from services running on the underlying network or be statically assigned.

The SDN perspective

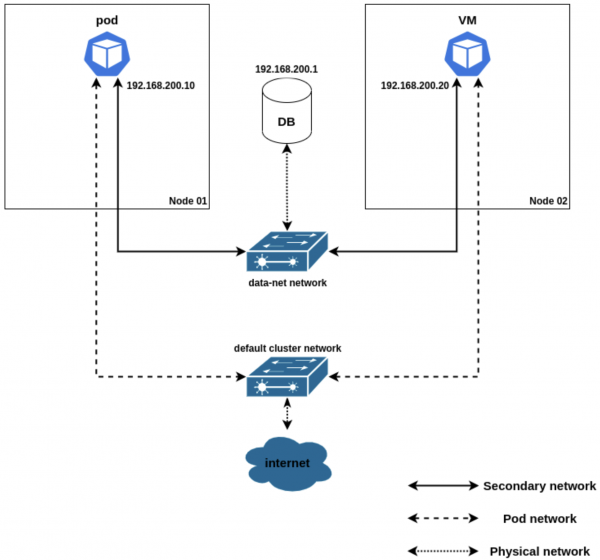

The workloads will be connected to two different networks. The first is the cluster’s default network—owned and managed by Kubernetes—granting them access to the internet. There is also an additional secondary network named data-net, implemented by OVN-Kubernetes and connected to the physical network, through which it will access the database (DB) deployed outside Kubernetes.

Both workloads (and the DB) will be available on the same subnet (192.168.200.0/24). Figure 1 depicts the scenario explained above, along with the static IP address allocations chosen for the workloads.

Physical network mapping

While the diagram above depicts the logical networks (which are separate), we assume your cluster’s nodes have a single network interface available. Thus, it will need to be shared amongst the cluster’s default network and its secondary networks.

Figure 2 showcases the physical network topology.

The single interface within the cluster is attached to the br-ex Open vSwitch (OVS) bridge. This single interface is configured as a VLAN trunk (accepting all the VLANs) and connects to the outside world (e.g., the internet) over the default VLAN (untagged), and to a private physical network over VLAN 123 (tagged). In this VLAN, we have deployed an SQL database, to which our workloads will connect.

The first step is to point the traffic from your secondary network to the desired OVS bridge. This step will be executed by the cluster admin by provisioning a NodeNetworkConfigurationPolicy object in your OpenShift cluster:

apiVersion: nmstate.io/v1

kind: NodeNetworkConfigurationPolicy

metadata:

name: ovs-share-same-gw-bridge

spec:

nodeSelector:

node-role.kubernetes.io/worker: ''

desiredState:

ovn:

bridge-mappings:

- localnet: data-net

bridge: br-exThe NodeNetworkConfigurationPolicy above will be applied in the worker nodes (via the node selector) and will map the traffic from the data-net network to the br-ex OVS bridge. Please refer to the OpenShift documentation for more information about this topic.

The physical network definition

First things first; we need to provision the namespace. Please execute the following command, to provision the data-adapter namespace:

oc create namespace data-adapteroc create namespace data-consumerOnce the namespace has been created, you can provision the following YAML to define the physical network attachment, which will expose the network to the OpenShift Virtualization VMs. Keep in mind this must be provisioned in each of the namespaces wanting to access the physical network—the exception being if this definition is created in the default project.

NetworkAttachmentDefinitions created in the default project are accessible to all other projects:

---

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: db-access-net

namespace: data-adapter

spec:

config: '{

"cniVersion": "0.3.1",

"name": "data-net",

"netAttachDefName": "data-adapter/db-access-net",

"topology": "localnet",

"type": "ovn-k8s-cni-overlay",

"vlanID": 123

}'

---

apiVersion: k8s.cni.cncf.io/v1

kind: NetworkAttachmentDefinition

metadata:

name: db-access-net

namespace: data-consumer

spec:

config: '{

"cniVersion": "0.3.1",

"name": "data-net",

"netAttachDefName": "data-consumer/db-access-net",

"topology": "localnet",

"type": "ovn-k8s-cni-overlay",

"vlanID": 123

}'

The most relevant information to understand from the snippet above is that we're granting the namespace data-adapter access to the data-net network. In the physical network, this traffic will use the VLAN tag 123. Your physical network must be configured to accept traffic tagged with this particular ID.

The NetworkAttachmentDefinition network name (i.e., spec.config.name) must match the localnet name listed in NodeNetworkConfigurationPolicy spec.desiredState.ovn. Once the NetworkAttachmentDefinition has been provisioned, we can now provision the involved workloads: a pod, and a VirtualMachine. Please provision the following YAML to make that happen:

---

apiVersion: v1

kind: Pod

metadata:

name: turtle-db-adapter

namespace: data-adapter

annotations:

k8s.v1.cni.cncf.io/networks: '[

{

"name": "db-access-net",

"ips": [ "192.168.200.10/24" ]

}

]'

labels:

role: db-adapter

spec:

containers:

- name: db-adapter

env:

- name: DB_USER

value: splinter

- name: DB_PASSWORD

value: cheese

- name: DB_NAME

value: turtles

- name: DB_IP

value: "192.168.200.1"

- name: HOST

value: "192.168.200.10"

- name: PORT

value: "9000"

image: ghcr.io/maiqueb/rust-turtle-viewer:main

ports:

- name: webserver

protocol: TCP

containerPort: 9000

securityContext:

runAsUser: 1000

privileged: false

seccompProfile:

type: RuntimeDefault

capabilities:

drop: ["ALL"]

runAsNonRoot: true

allowPrivilegeEscalation: false

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

name: vm-workload

namespace: data-consumer

spec:

runStrategy: Always

template:

metadata:

labels:

role: web-client

spec:

domain:

devices:

disks:

- name: containerdisk

disk:

bus: virtio

- name: cloudinitdisk

disk:

bus: virtio

interfaces:

- name: default

masquerade: {}

- name: data-network

bridge: {}

machine:

type: ""

resources:

requests:

memory: 1024M

networks:

- name: default

pod: {}

- name: data-network

multus:

networkName: db-access-net

terminationGracePeriodSeconds: 0

volumes:

- name: containerdisk

containerDisk:

image: quay.io/containerdisks/fedora:40

- name: cloudinitdisk

cloudInitNoCloud:

networkData: |

version: 2

ethernets:

eth0:

dhcp4: true

eth1:

addresses: [ 192.168.200.20/24 ]

userData: |-

#cloud-config

password: fedora

chpasswd: { expire: False }

packages:

- postgresqlMicro-segmentation

After the previous configurations, the pod, the VM, and the database are now all in the same network, and can all communicate with each other. But what if I want to restrict access so that only the pod has the ability to access the database, and I don’t want to change networks?

This is where the MultiNetworkPolicy object allows us the ability to provide micro-segmentation. First, we must enable MultiNetworkPolicy on our cluster, which is done by editing the Network object:

apiVersion: operator.openshift.io/v1

kind: Network

metadata:

name: cluster

spec:

useMultiNetworkPolicy: true

…Now we create a policy that creates a default deny and will block all traffic associated with this namespace/NetworkAttachmentDefinition pair:

apiVersion: k8s.cni.cncf.io/v1beta1

kind: MultiNetworkPolicy

metadata:

name: default-deny-to-database-server

namespace: data-adapter

annotations:

k8s.v1.cni.cncf.io/policy-for: data-adapter/db-access-net

spec:

podSelector: {}

policyTypes:

- Ingress

- Egress

ingress: []

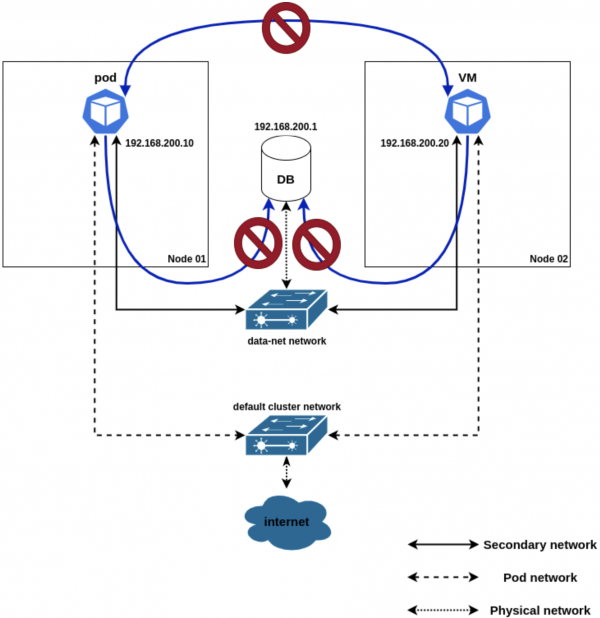

egress: []At this point in time, neither the pod nor the VM can access the database server (or each other). See Figure 3.

Let’s enable it so that the VM can access the database server:

apiVersion: k8s.cni.cncf.io/v1beta1

kind: MultiNetworkPolicy

metadata:

name: allow-traffic-pod

namespace: data-adapter

annotations:

k8s.v1.cni.cncf.io/policy-for: data-adapter/db-access-net

spec:

podSelector:

matchLabels:

vm: my-virtual-machine

policyTypes:

- Ingress

ingress:

- from:

- namespaceSelector:

matchLabels:

kubernetes.io/metadata.name: data-adapter

egress:

- to:

- ipBlock:

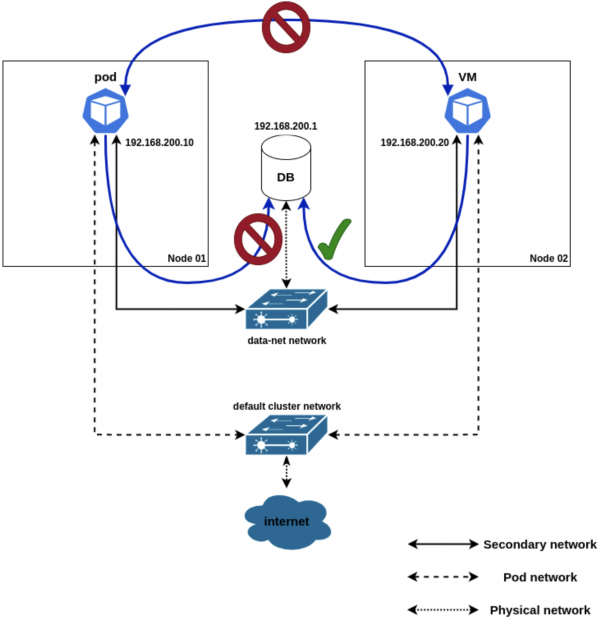

cidr: 192.168.200.1/32The above policy now makes it so that our VM (which has the label vm=my-virtual-machine) can now talk to the database server (whose IP is 192.168.200.1). Keep in mind that you can use the psql command available in your VM to check connectivity towards the DB server located outside the cluster. See Figure 4.

This learning path showed you how to use OpenShift Networking technologies to connect virtual machines on different nodes through pre-existing physical networks. This connectivity paradigm can be utilized for interconnecting workloads that have east/west traffic patterns as well as providing north/south connectivity for traffic coming and going from the cluster. Effective use of micro-segmentation can be a foundational layer towards implementing effective zero-trust networks.

We have also illustrated how MultiNetworkPolicy can be utilized for implementing micro-segmentation, allowing for exceptionally fine-grained access control, down to a per-machine basis.

To learn more about OpenShift Virtualization and virtual machines, explore these offerings:

- Learning path: OpenShift virtualization and application modernization using the Developer Sandbox

- Article: Migrate virtual applications to Red Hat OpenShift Virtualization

- Article: Import your virtual appliances into OpenShift Virtualization

- Article: How to back up and restore virtual machines with OpenShift

- Article: A self-service approach to building virtual machines at scale