This article demonstrates how you can override the cluster default network in Red Hat OpenShift and connect your workloads using a Layer 2 isolated network, with managed IPAM (IP Address Management). This type of network addresses the virtualization workloads challenges in the Kubernetes platform by providing east-west communication without NAT (Network Address Translation), and stable IPAM configuration during their entire lifecycle. For this, we will introduce the user-defined network (UDN) feature.

Motivation

From a virtualization standpoint, we envision two types of OpenShift users: the traditional virtualization user, who seeks an isolated network without NAT for east-west communication (à la OpenStack Neutron), and the Kubernetes-savvy user, who seeks a fully managed network experience, complete with IPAM. This frees the user from assigning static addresses to their workloads, or having to deploy DHCP (Dynamic Host Configuration Protocol) servers into their networks.

No matter the type of user, both of them share the same goals: they need stable IPAM configuration (IP addresses, gateways, and DNS configuration), and the networking solution must work in both bare metal and cloud platforms.

Problem

In this particular case, it is the Kubernetes platform itself that is getting in the way of achieving the user’s goals—specifically, the Kubernetes networking model. Simply put, it is too opinionated.

It provides a single network to connect all the workloads in the platform. If the user wants to restrict in any way traffic to or from their workloads, the only option available is by provisioning NetworkPolicy. This is expensive for two reasons: someone has to write and maintain those policies, and they have to be reconciled every time something changes in the network. Which happens a lot, since there’s a single network connecting everything.

Adding insult to injury, Kubernetes network policies work in the network and transport OSI layers (layers 3 and 4). Meaning there simply isn’t a Kubernetes native way to perform Layer 2 isolation.

Goals

Now that we understand why and what we’re trying to solve with these isolated Layer 2 networks, let’s enumerate the goals we have for them:

- Workload/tenant isolation: The ability to group different types of applications in different isolated networks that cannot talk to each other.

- Overlapping subnets: Create multiple networks in your cluster with the same pod subnet range thereby enabling the same setup to be copied.

- Native Kubernetes API integration: Full support for Kubernetes network API. This means services, network policies, admin network policies can be used in UDNs.

- Native integration with OpenShift network APIs: Support for OpenShift network API. Currently, this means egress IPs can be used in UDNs. There are plans to add support for OpenShift routes.

- Stable IPAM configuration: Workloads require their IPs, gateway, and DNS configuration to be stable during their life cycle.

- Cloud platform support: Packets must egress the cluster nodes with the IP addresses of the node it runs on, otherwise they are dropped by the cloud provider.

- Access to some Kubernetes infrastructure services that are available on the cluster default network: Access to

kube-dnsandkube-apiis still available to workloads attached to UDNs. - No NAT on east-west traffic.

Requirements

- OpenShift cluster, version >= 4.18

- Red Hat OpenShift Virtualization and MetalLB installed and configured (the latter is required only for the services part). Refer to the OpenShift documentation for instructions.

Use cases

There are two major types of use cases we address via the OpenShift UDN feature: namespace isolation and isolated cluster-wide network. Keep in mind that all the goals noted in the previous section apply to both use cases.

Namespace isolation

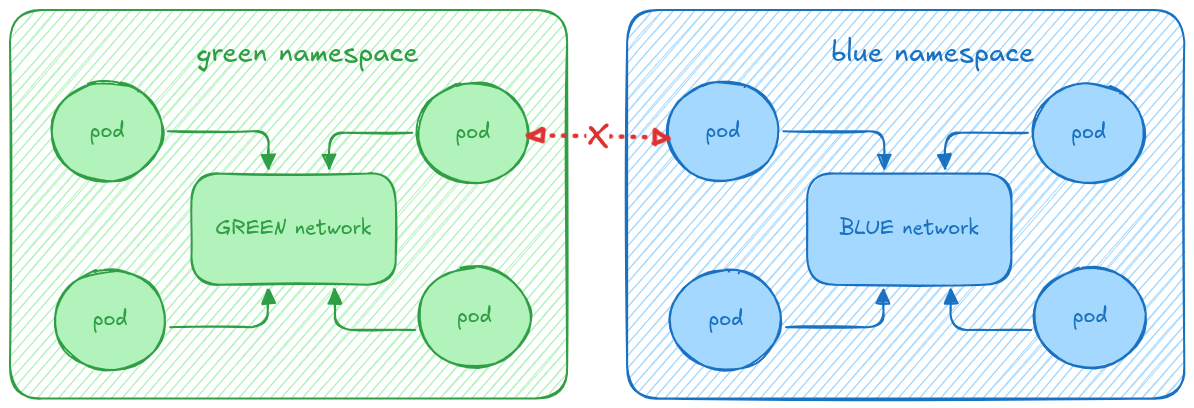

In this use case all the workloads in a namespace can access one another, but they are isolated from the rest of the cluster. This means that workloads in another UserDefinedNetwork cannot access them or be reached by them. The same applies to workloads attached to the cluster default network, which cannot reach UDN pods (or vice versa).

You can find a graphical representation of the concept in Figure 1, where communication between the workloads in the green namespace and the workloads in the blue namespace is blocked, but can freely communicate with other pods in the same namespace.

To achieve this use case, in OpenShift 4.18, the user can provision a UserDefinedNetwork CR, which indicates the namespace’s workloads will be connected by a primary UDN, and workloads on other UDNs (or the cluster’s default network) will not be able to access them.

The namespace’s workloads will be able to egress the cluster and integrate with the Kubernetes features listed in the Goals section. For that, the user must first create the namespace, ensuring to explicitly indicate their interest in overriding the default cluster network with the UDN, by adding the k8s.ovn.org/primary-user-defined-network label.

Refer to the following YAML for a reference manifest:

---

apiVersion: v1

kind: Namespace

metadata:

name: green

labels:

k8s.ovn.org/primary-user-defined-network: ""

---

apiVersion: k8s.ovn.org/v1

kind: UserDefinedNetwork

metadata:

name: namespace-scoped

namespace: green

spec:

topology: Layer2

layer2:

role: Primary

subnets:

- 192.168.0.0/16

ipam:

lifecycle: PersistentNow that we have provisioned the UDN, let’s create some workloads attached to it to showcase the most relevant features. In this instance, we are creating a web server container, and two virtual machines (VMs). Provision the following YAML manifest:

---

apiVersion: v1

kind: Pod

metadata:

name: webserver

namespace: green

spec:

containers:

- args:

- "netexec"

- "--http-port"

- "9000"

image: registry.k8s.io/e2e-test-images/agnhost:2.45

imagePullPolicy: IfNotPresent

name: agnhost-container

restartPolicy: Always

securityContext: {}

serviceAccount: default

serviceAccountName: default

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

labels:

kubevirt.io/vm: vm-a

name: vm-a

namespace: green

spec:

runStrategy: Always

template:

metadata:

name: vm-a

namespace: green

spec:

domain:

devices:

disks:

- disk:

bus: virtio

name: containerdisk

- disk:

bus: virtio

name: cloudinitdisk

interfaces:

- name: isolated-namespace

binding:

name: l2bridge

rng: {}

resources:

requests:

memory: 2048M

networks:

- pod: {}

name: isolated-namespace

terminationGracePeriodSeconds: 0

volumes:

- containerDisk:

image: quay.io/kubevirt/fedora-with-test-tooling-container-disk:v1.4.0

name: containerdisk

- cloudInitNoCloud:

userData: |-

#cloud-config

password: fedora

chpasswd: { expire: False }

name: cloudinitdisk

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

labels:

kubevirt.io/vm: vm-b

name: vm-b

namespace: green

spec:

runStrategy: Always

template:

metadata:

name: vm-b

namespace: green

spec:

domain:

devices:

disks:

- disk:

bus: virtio

name: containerdisk

- disk:

bus: virtio

name: cloudinitdisk

interfaces:

- name: isolated-namespace

binding:

name: l2bridge

rng: {}

resources:

requests:

memory: 2048M

networks:

- pod: {}

name: isolated-namespace

terminationGracePeriodSeconds: 0

volumes:

- containerDisk:

image: quay.io/kubevirt/fedora-with-test-tooling-container-disk:v1.4.0

name: containerdisk

- cloudInitNoCloud:

userData: |-

#cloud-config

password: fedora

chpasswd: { expire: False }

name: cloudinitdiskEast-west connectivity

Once the three workloads are running, we can ensure east-west traffic is working properly by trying to access the HTTP server on the web server pod from one of the VMs. But for that, we will need to first figure out the web server IP address. We will rely on the k8snetworkplumbing network-status annotation for that:

kubectl get pods -ngreen webserver -ojsonpath="{@.metadata.annotations.k8s\.v1\.cni\.cncf\.io\/network-status}" | jq

[

{

"name": "ovn-kubernetes",

"interface": "eth0",

"ips": [

"10.244.1.10"

],

"mac": "0a:58:0a:f4:01:0a",

"dns": {}

},

{

"name": "ovn-kubernetes",

"interface": "ovn-udn1",

"ips": [

"192.168.0.4"

],

"mac": "0a:58:cb:cb:00:04",

"default": true,

"dns": {}

}

]

# login using fedora/fedora

virtctl console -ngreen vm-a

Successfully connected to vm-a console. The escape sequence is ^]

vm-a login: fedora

Password:

[fedora@vm-a ~]$ curl 192.168.0.4:9000/hostname

webserver # reply from the webserverIf you try to access the web server from a workload attached to another UDN or to the default cluster network, it will simply not succeed.

Egress to the internet

You can also check egress to the internet works as expected:

[fedora@vm-a ~]$ ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1400 qdisc fq_codel state UP group default qlen 1000

link/ether 0a:58:cb:cb:00:05 brd ff:ff:ff:ff:ff:ff

altname enp1s0

inet 203.203.0.5/16 brd 203.203.255.255 scope global dynamic noprefixroute eth0

valid_lft 2786sec preferred_lft 2786sec

inet6 fe80::858:cbff:fecb:5/64 scope link

valid_lft forever preferred_lft forever

[fedora@vm-a ~]$ ip r

default via 203.203.0.1 dev eth0 proto dhcp metric 100

203.203.0.0/16 dev eth0 proto kernel scope link src 203.203.0.5 metric 100

[fedora@vm-a ~]$ ping -c 2 www.google.com

PING www.google.com (142.250.200.100) 56(84) bytes of data.

64 bytes from 142.250.200.100 (142.250.200.100): icmp_seq=1 ttl=115 time=7.15 ms

64 bytes from 142.250.200.100 (142.250.200.100): icmp_seq=2 ttl=115 time=6.55 ms

--- www.google.com ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1002ms

rtt min/avg/max/mdev = 6.548/6.849/7.150/0.301 msSeamless live-migration

Let’s now check seamless live-migration of one of these VMs. To prove our claim that the TCP connection will survive the migration of the VM, we will monitor the throughput over an iperf session while we migrate the vm-b, in which we will start the iperf server:

# let's start the iperf server in `vm-b` (for instance)

# password is also fedora/fedora

virtctl console -ngreen vm-b

Successfully connected to vm-b console. The escape sequence is ^]

vm-b login: fedora

Password:

[fedora@vm-b ~]$ iperf3 -s -p 9500 -1

-----------------------------------------------------------

Server listening on 9500 (test #1)

-----------------------------------------------------------Let’s connect to it from the vm-a VM, which will be the iperf client.

virtctl console -ngreen vm-a

Successfully connected to vm-a console. The escape sequence is ^]

# keep in mind the iperf server is located at 203.203.0.6, port 9500 ...

[fedora@vm-a ~]$ iperf3 -c 203.203.0.6 -p9500 -t3600

Connecting to host 203.203.0.6, port 9500

[ 5] local 203.203.0.5 port 56618 connected to 203.203.0.6 port 9500

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 2.59 GBytes 22.2 Gbits/sec 0 2.30 MBytes

[ 5] 1.00-2.00 sec 2.53 GBytes 21.7 Gbits/sec 0 2.78 MBytes

[ 5] 2.00-3.00 sec 2.95 GBytes 25.3 Gbits/sec 0 3.04 MBytes

[ 5] 3.00-4.00 sec 3.93 GBytes 33.7 Gbits/sec 0 3.04 MBytes

[ 5] 4.00-5.00 sec 3.83 GBytes 32.9 Gbits/sec 0 3.04 MBytes

[ 5] 5.00-6.00 sec 3.99 GBytes 34.3 Gbits/sec 0 3.04 MBytes

[ 5] 6.00-7.00 sec 3.89 GBytes 33.4 Gbits/sec 0 3.04 MBytes

[ 5] 7.00-8.00 sec 3.88 GBytes 33.3 Gbits/sec 0 3.04 MBytes

[ 5] 8.00-9.00 sec 3.94 GBytes 33.8 Gbits/sec 0 3.04 MBytes

[ 5] 9.00-10.00 sec 3.80 GBytes 32.7 Gbits/sec 0 3.04 MBytes

[ 5] 10.00-11.00 sec 3.98 GBytes 34.2 Gbits/sec 0 3.04 MBytes

[ 5] 11.00-12.00 sec 3.67 GBytes 31.5 Gbits/sec 0 3.04 MBytes

[ 5] 12.00-13.00 sec 3.87 GBytes 33.3 Gbits/sec 0 3.04 MBytes

[ 5] 13.00-14.00 sec 3.82 GBytes 32.8 Gbits/sec 0 3.04 MBytes

[ 5] 14.00-15.00 sec 3.80 GBytes 32.6 Gbits/sec 0 3.04 MBytes

...Let’s now migrate the server VM using the virtctl tool:

virtctl migrate -ngreen vm-b

VM vm-b was scheduled to migrate

kubectl get pods -ngreen -w

NAME READY STATUS RESTARTS AGE

virt-launcher-vm-a-r44bp 2/2 Running 0 22m

virt-launcher-vm-b-44vs4 2/2 Running 0 22m

virt-launcher-vm-b-wct76 0/2 PodInitializing 0 8s

webserver 1/1 Running 0 22m

virt-launcher-vm-b-wct76 2/2 Running 0 13s

virt-launcher-vm-b-wct76 2/2 Running 0 16s

virt-launcher-vm-b-wct76 2/2 Running 0 16s

virt-launcher-vm-b-wct76 2/2 Running 0 16s

virt-launcher-vm-b-wct76 2/2 Running 0 17s

virt-launcher-vm-b-44vs4 1/2 NotReady 0 22m

virt-launcher-vm-b-44vs4 0/2 Completed 0 23m

virt-launcher-vm-b-44vs4 0/2 Completed 0 23m

# and this is what the client sees when the migration occurs:

...

[ 5] 40.00-41.00 sec 2.79 GBytes 23.9 Gbits/sec 0 3.04 MBytes

[ 5] 41.00-42.00 sec 2.53 GBytes 21.7 Gbits/sec 0 3.04 MBytes

[ 5] 42.00-43.00 sec 2.57 GBytes 22.1 Gbits/sec 0 3.04 MBytes

[ 5] 43.00-44.00 sec 2.67 GBytes 22.9 Gbits/sec 0 3.04 MBytes

[ 5] 44.00-45.00 sec 2.94 GBytes 25.2 Gbits/sec 0 3.04 MBytes

[ 5] 45.00-46.00 sec 3.16 GBytes 27.1 Gbits/sec 0 3.04 MBytes

[ 5] 46.00-47.00 sec 3.33 GBytes 28.6 Gbits/sec 0 3.04 MBytes

[ 5] 47.00-48.00 sec 3.11 GBytes 26.7 Gbits/sec 0 3.04 MBytes

[ 5] 48.00-49.00 sec 2.62 GBytes 22.5 Gbits/sec 0 3.04 MBytes

[ 5] 49.00-50.00 sec 2.77 GBytes 23.8 Gbits/sec 0 3.04 MBytes

[ 5] 50.00-51.00 sec 2.79 GBytes 23.9 Gbits/sec 0 3.04 MBytes

[ 5] 51.00-52.00 sec 2.19 GBytes 18.8 Gbits/sec 0 3.04 MBytes

[ 5] 52.00-53.00 sec 3.29 GBytes 28.3 Gbits/sec 2229 2.30 MBytes

[ 5] 53.00-54.00 sec 3.80 GBytes 32.6 Gbits/sec 0 2.43 MBytes

[ 5] 54.00-55.00 sec 5.08 GBytes 43.6 Gbits/sec 0 2.60 MBytes

[ 5] 55.00-56.00 sec 5.18 GBytes 44.5 Gbits/sec 0 2.62 MBytes

[ 5] 56.00-57.00 sec 5.35 GBytes 46.0 Gbits/sec 0 2.74 MBytes

[ 5] 57.00-58.00 sec 5.14 GBytes 44.2 Gbits/sec 0 2.89 MBytes

[ 5] 58.00-59.00 sec 5.28 GBytes 45.4 Gbits/sec 0 2.93 MBytes

[ 5] 59.00-60.00 sec 5.22 GBytes 44.9 Gbits/sec 0 2.97 MBytes

[ 5] 60.00-61.00 sec 4.97 GBytes 42.7 Gbits/sec 0 2.99 MBytes

[ 5] 61.00-62.00 sec 4.78 GBytes 41.1 Gbits/sec 0 3.02 MBytes

[ 5] 62.00-63.00 sec 5.14 GBytes 44.1 Gbits/sec 0 3.02 MBytes

[ 5] 63.00-64.00 sec 5.06 GBytes 43.5 Gbits/sec 0 3.02 MBytes

[ 5] 64.00-65.00 sec 5.02 GBytes 43.1 Gbits/sec 0 3.02 MBytes

[ 5] 65.00-66.00 sec 5.07 GBytes 43.5 Gbits/sec 0 3.02 MBytes

[ 5] 66.00-67.00 sec 5.34 GBytes 45.9 Gbits/sec 0 3.02 MBytes

[ 5] 67.00-68.00 sec 4.99 GBytes 42.9 Gbits/sec 0 3.02 MBytes

[ 5] 68.00-69.00 sec 5.17 GBytes 44.4 Gbits/sec 0 3.02 MBytes

[ 5] 69.00-70.00 sec 5.39 GBytes 46.3 Gbits/sec 0 3.02 MBytes

[ 5] 70.00-70.29 sec 1.57 GBytes 46.0 Gbits/sec 0 3.02 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-70.29 sec 260 GBytes 31.8 Gbits/sec 2229 sender

[ 5] 0.00-70.29 sec 0.00 Bytes 0.00 bits/sec receiverAs you can see, the TCP connection survives the migration and there's no downtime: just a slight hiccup in seconds 52-53.

NetworkPolicy integration

In the previous section on east-west connectivity, remember how you were able to reach into the web server pod? Let’s provision a network policy to prevent that from happening. Actually, let’s provision a more useful network policy that only allows ingress traffic over TCP port 9001.

Refer to the following manifest:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: ingress-port-9001-only

namespace: green

spec:

podSelector: {}

policyTypes:

- Ingress

ingress:

- ports:

- protocol: TCP

port: 9001Once this policy is provisioned, our VMs will no longer be able to access the webserver pod. But let’s create another web server—one that listens to the correct port (i.e., 9001):

---

apiVersion: v1

kind: Pod

metadata:

name: new-webserver

namespace: green

spec:

containers:

- args:

- "netexec"

- "--http-port"

- "9001"

image: registry.k8s.io/e2e-test-images/agnhost:2.45

imagePullPolicy: IfNotPresent

name: agnhost-container

nodeName: ovn-worker

restartPolicy: Always

securityContext: {}

serviceAccount: default

serviceAccountName: defaultLet’s try to access it:

# example for a pod named new-webserver, which listens on port 9001

kubectl get pods -ngreen new-webserver -ojsonpath="{@.metadata.annotations.k8s\.v1\.cni\.cncf\.io\/network-status}" | jq

[

{

"name": "ovn-kubernetes",

"interface": "eth0",

"ips": [

"10.244.1.13"

],

"mac": "0a:58:0a:f4:01:0d",

"dns": {}

},

{

"name": "ovn-kubernetes",

"interface": "ovn-udn1",

"ips": [

"203.203.0.8"

],

"mac": "0a:58:cb:cb:00:08",

"default": true,

"dns": {}

}

]

virtctl console -ngreen vm-a

Successfully connected to vm-a console. The escape sequence is ^]

[fedora@vm-a ~]$ curl 203.203.0.8:9001/hostname

new-webserverInterconnected namespaces isolation

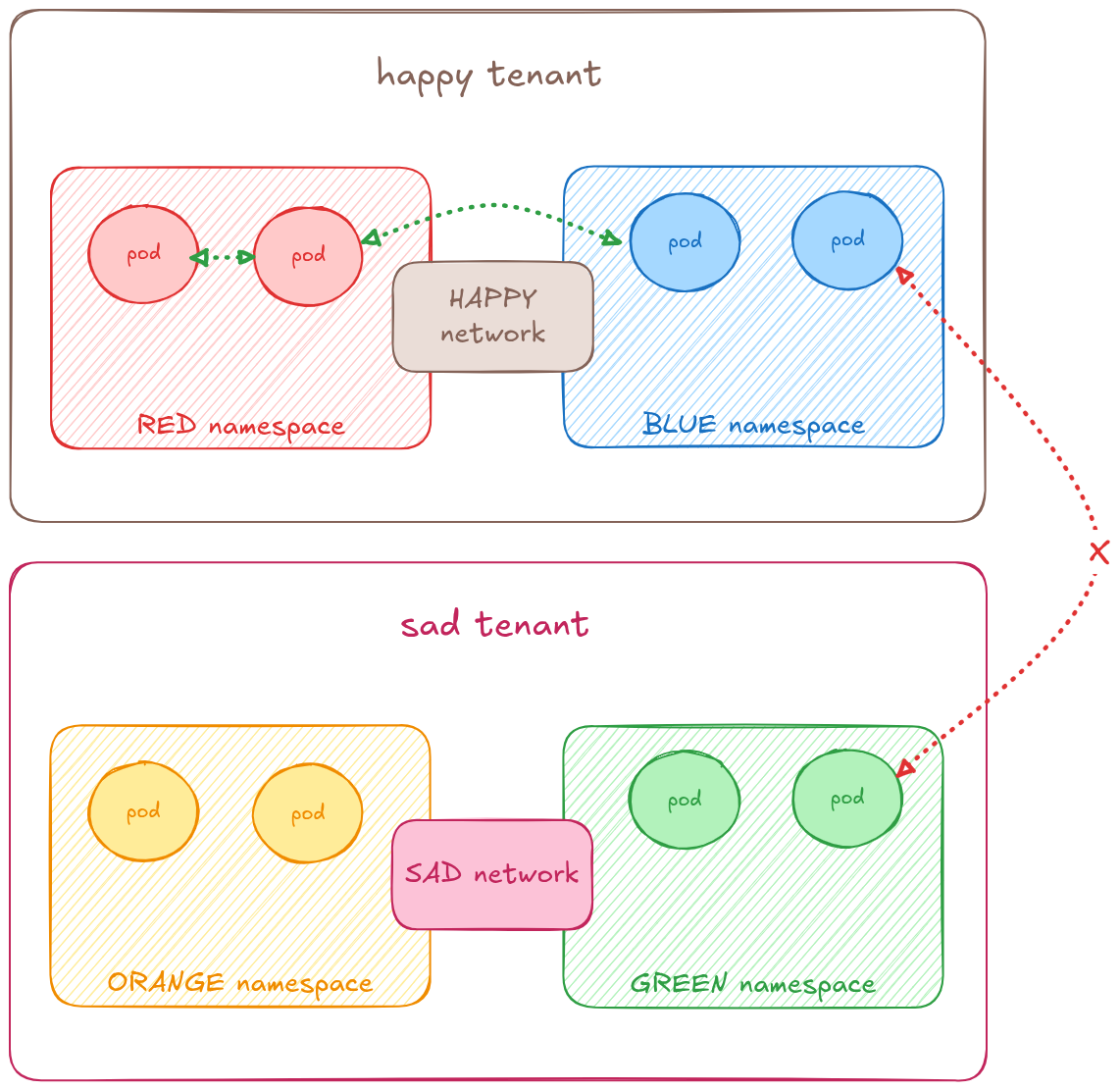

This other use case allows the user to connect different namespaces according to their needs. These interconnected namespaces will be able to reach/be accessed by any workloads connected to their isolated network, but will be unreachable by workloads attached to other cluster wide UDNs, or the cluster default network.

You can find a graphical representation of the concept in Figure 2, where the pods in the red and blue namespaces are able to communicate with each other over the happy network, but are not connected to the orange and green namespaces, which are attached to the sad network.

To achieve this use case, the user must provision a different CR named ClusterUserDefinedNetwork. It is a cluster-wide resource that can be provisioned exclusively by the cluster-admin. It allows for defining the network, and also to specify which namespaces are interconnected by that network.

This scenario would be configured by applying the following YAML manifest:

---

apiVersion: v1

kind: Namespace

metadata:

name: red-namespace

labels:

k8s.ovn.org/primary-user-defined-network: ""

---

apiVersion: v1

kind: Namespace

metadata:

name: blue-namespace

labels:

k8s.ovn.org/primary-user-defined-network: ""

---

apiVersion: k8s.ovn.org/v1

kind: ClusterUserDefinedNetwork

metadata:

name: happy-tenant

spec:

namespaceSelector:

matchExpressions:

- key: kubernetes.io/metadata.name

operator: In

values:

- red-namespace

- blue-namespace

network:

topology: Layer2

layer2:

role: Primary

ipam:

lifecycle: Persistent

subnets:

- 203.203.0.0/16

---

apiVersion: v1

kind: Namespace

metadata:

name: orange-namespace

labels:

k8s.ovn.org/primary-user-defined-network: ""

---

apiVersion: v1

kind: Namespace

metadata:

name: green-namespace

labels:

k8s.ovn.org/primary-user-defined-network: ""

---

apiVersion: k8s.ovn.org/v1

kind: ClusterUserDefinedNetwork

metadata:

name: happy-tenant

spec:

namespaceSelector:

matchExpressions:

- key: kubernetes.io/metadata.name

operator: In

values:

- orange-namespace

- green-namespace

network:

topology: Layer2

layer2:

role: Primary

ipam:

lifecycle: Persistent

subnets:

- 192.168.0.0/16As can be seen, there are two differences from the UserDefinedNetwork CRD:

- It is a cluster-wide resource (i.e., no

metadata.namespace) - It figures a

spec.namespaceselector in which the admin can list the namespaces to interconnect

The latter configuration is a Kubernetes standard namespace selector.

While this scenario is configured by using a different CRD, all the feature set available for the UserDefinedNetwork CRD (which configures the native namespace isolation use case) is available for the interconnected namespaces isolation scenario—thus, east-west connectivity, egress to the internet, seamless live-migration, and network policy integration are all possible for this other use case.

We will showcase the Service integration using the ClusterUserDefinedNetwork CRD (but the same feature is available for the namespace isolation use cases, which is configured via the UDN CRD).

Services

You can expose a VM attached to a UDN (or cUDN) using a Kubernetes Service. A common use case is to expose an application running in a VM to the outside world.

Let's create a VM that will run an HTTP server. Provision the following YAML:

---

apiVersion: kubevirt.io/v1

kind: VirtualMachine

metadata:

labels:

kubevirt.io/vm: red

name: red

namespace: red-namespace

spec:

runStrategy: Always

template:

metadata:

labels:

app.kubernetes.io/name: nginx

spec:

domain:

devices:

disks:

- disk:

bus: virtio

name: containerdisk

- disk:

bus: virtio

name: cloudinitdisk

interfaces:

- name: happy

binding:

name: l2bridge

rng: {}

resources:

requests:

memory: 2048M

networks:

- pod: {}

name: happy

terminationGracePeriodSeconds: 0

volumes:

- containerDisk:

image: quay.io/kubevirt/fedora-with-test-tooling-container-disk:v1.4.0

name: containerdisk

- cloudInitNoCloud:

userData: |-

#cloud-config

password: fedora

chpasswd: { expire: False }

packages:

- nginx

runcmd:

- [ "systemctl", "enable", "--now", "nginx" ]

name: cloudinitdiskThis VM will be running nginx, which by default runs a web server on port 80. Let’s now provision a load balancer service, which will expose this port outside the cluster. For that, provision the following YAML:

apiVersion: v1

kind: Service

metadata:

name: webapp

namespace: red-namespace

spec:

selector:

app.kubernetes.io/name: nginx

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancerWe can now check the state of the service:

kubectl get service -nred-namespace webapp -oyaml

apiVersion: v1

kind: Service

metadata:

...

name: webapp

namespace: red-namespace

resourceVersion: "588976"

uid: 9d8a723f-bd79-48e5-95e7-3e235c023314

spec:

allocateLoadBalancerNodePorts: true

clusterIP: 172.30.162.106

clusterIPs:

- 172.30.162.106

externalTrafficPolicy: Cluster

internalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- nodePort: 30600

port: 80

protocol: TCP

targetPort: 80

selector:

app.kubernetes.io/name: redvm

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 192.168.10.0

ipMode: VIP

# lets ensure we have endpoints ...

kubectl get endpoints -nred-namespace

NAME ENDPOINTS AGE

webapp 10.132.2.66:80 16mFrom the service status, you can now figure out which IP address to use to access the exposed VM.

Let’s access it from outside the cluster:

curl -I 192.168.10.0

HTTP/1.1 200 OK

Server: nginx/1.22.1

Date: Tue, 11 Mar 2025 18:09:12 GMT

Content-Type: text/html

Content-Length: 8474

Last-Modified: Fri, 26 Mar 2021 17:49:58 GMT

Connection: keep-alive

ETag: "605e1ec6-211a"

Accept-Ranges: bytesConclusion

In this article, we introduced the primary UDN feature and explained how it can be used to provide native network segmentation for virtualization workloads in OpenShift clusters to achieve namespace isolation, or to interconnected selected namespaces.

Furthermore, we have shown how UDN features integrate natively with the Kubernetes API, thus allowing the user to expose workloads attached to UDNs using Kubernetes services, and to write micro-segmentation on UDNs, to further restrict the traffic which is allowed in them.

Learn more: