Configuring Kubernetes is an exercise in defining objects in YAML files. While not required, it is nice to have an editor that can at least understand YAML, and it's even better if it knows the Kubernetes language. Kubernetes YAML is descriptive and powerful. We love the modeling of the desired state in a declarative language. That said, if you are used to something simple like podman run, the transition to YAML descriptions can be a bitter pill to swallow.

As the development of Podman has continued, we have had more discussions focused on developer use cases and developer workflows. These conversations are fueled by user feedback on our various principles, and it seems clear that the proliferation of container runtimes and technologies has some users scratching their heads. One of these recent conversations was centered around orchestration and specifically, local orchestration. Then Scott McCarty tossed out an idea: “What I would really like to do is help users get from Podman to orchestrating their containers with Kubernetes.” And just like that, the proverbial light bulb went on.

A recent pull request to libpod has started to deliver on that very idea. Read on to learn more.

Podman can now capture the description of local pods and containers and then help users transition to a more sophisticated orchestration environment like Kubernetes. I think Podman is the first container runtime to take this scenario seriously and not depend on third-party tools.

When considering how to implement something like this, we considered the following developer and user workflow:

- Create containers/pods locally using Podman on the command line.

- Verify these containers/pods locally or in a localized container runtime (on a different physical machine).

- Snapshot the container and pod descriptions using Podman and help users re-create them in Kubernetes.

- Users add sophistication and orchestration (where Podman cannot) to the snapshot descriptions and leverage advanced functions of Kubernetes.

Enough teasing; show me the goods

At this point, it probably will make more sense to see a quick demo. First, I will create a simple Podman container running nginx and binding the host’s port 8000 to the container’s port 80.

$ sudo podman run -dt -p 8000:80 --name demo quay.io/libpod/alpine_nginx:latest 51e14356dc3f2baad3acc0706cbdb9bffa3cba8c2064ef7db9f8061c77db2ae6 $ sudo podman ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 51e14356dc3f quay.io/libpod/alpine_nginx:latest nginx -g daemon o... 4 seconds ago Up 4 seconds ago 0.0.0.0:8000->80/tcp demo $ curl http://localhost:8000 podman rulez $

As you can see, we were able to successfully curl the bound ports and get a response from the nginx container. Now, we can perform the snapshot of the container which will generate Kubernetes YAML. Because we are testing this on the network, we will also ask Podman to generate a service file.

$ sudo podman generate kube demo > demo.yml

Once we have the YAML file, we can then re-create the containers/pods in Kubernetes:

$ sudo kubectl create -f demo.yml pod/demo-libpod created service/demo-libpod created

Now we can check the results and see if “podman rulez.”

$ sudo kubectl get pods NAME READY STATUS RESTARTS AGE demo-libpod 1/1 Running 0 27s $ sudo kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE demo-libpod NodePort 10.96.185.205 <none> 80:31393/TCP 24s kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 8m17s $ curl http://192.168.122.123:31393 podman rulez

Podman’s role as a container engine

When bouncing this idea off of folks and during early development, we really needed to articulate Podman’s role. There were concerns of scope creep and conversations about where Podman “ends” and Kubernetes starts. Most importantly, we did not want to confuse developers by blurring the lines. We kept leaning on the use case that developers should use Podman to develop container content locally and then “lift” that to a Kubernetes environment where greater control of orchestration could be applied.

In general, these are the rules that apply to this use case based on where the Podman code and features stand today:

- All generated descriptions of a Podman container end up “wrapped” in a Kubernetes V1 Pod object.

- All generated descriptions of a Podman pod that contains containers also result in a Kubernetes v1 Pod object.

- NodePort is used in the service file generation as a way to expose services to the network. A random port is generated during this process.

It should also be noted that Podman can describe containers with a lot more granularity than Kubernetes. That results in containers being a bit stripped down when their YAML is generated. Moreover, because Podman and the Kubernetes environment are not actually connected, the generation of the YAML cannot be specific for some things like IP addresses. Earlier, I described that we use NodePort to expose services in the Kubernetes environment. We obviously do not check that the random port is actually available and this is, in spirit, no different than creating the Kubernetes YAML by hand.

A more in-depth example

I recently created a multi-container demo that uses a database, a database client, and a web front end. The idea was to show how multiple containers can work in unison. It uses three images that are also available to you:

quay.io/baude/demodb:latest-- MariaDB serverquay.io/baude/demogen:latest-- Generates random numbers and adds them to the DBquay.io/baude/demoweb:latest-- Web front end that graphs random numbers

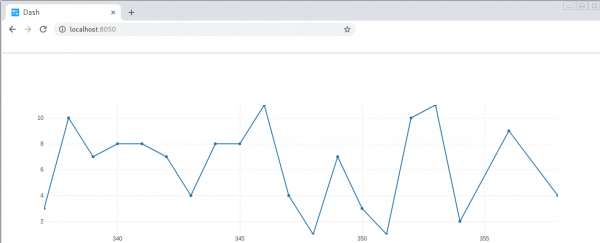

The workflow for these containers is that the demogen container inserts random numbers into the MariaDB server. And then, users can view a live graphing of these numbers with a browser while connecting to the web front-end container.

Running the demo with Podman

Before we can eventually generate Kubernetes YAML, we need to get the demo up and running with Podman. In this case, I will run each "component" in its own pod. First, we run the MariaDB and I will use the podman container runlabel for simplicity. The runlabel sub-command allows Podman to execute a predefined command embedded in a container image label, which is often easier than typing out lengthy command-line options. When the runlabel command is executed, you will see the command that it actually runs.

$ sudo podman container runlabel -p pod quay.io/baude/demodb:latest Command: /proc/self/exe run --pod new:demodb -P -e MYSQL_ROOT_PASSWORD=x -dt quay.io/baude/demodb:latest 6f451c893e0082960f699967c7188e7dedab98f249600bcb9ccfe0f54368602f $ sudo podman container runlabel -p pod quay.io/baude/demogen:latest Command: /proc/self/exe run -dt --pod new:demogen quay.io/baude/demogen:latest python3 generatenums.py 97a8f008686784def0c19bd4314e71bc26209f647d9ea7222940f04fb9eac1c0 $ sudo podman container runlabel -p pod quay.io/baude/demoweb:latest Command: /proc/self/exe run --pod new:demoweb -dt -p 8050:8050 quay.io/baude/demoweb:latest 5f3096dc54bb6311d75aa604be10e30b6dd804d5bf04816bb85d2368d95274eb

We now have three pods named demodb, demogen, and demoweb. Each pod contains its “worker” container and an “infra” container.

$ sudo podman pod ps POD ID NAME STATUS CREATED # OF CONTAINERS INFRA ID 5879b590d43e demoweb Running 4 minutes ago 2 a08da7cc037e 50d18daf6438 demogen Running 4 minutes ago 2 7d8ff6ee1c8a 5aed9c3bbbd5 demodb Running 4 minutes ago 2 000140e3ebc3

Keep in mind that with Podman, port assignments, cgroups, etc. are assigned to the “infra” container and inherited pod-wide by all the pod’s containers. Remember the default options for podman ps does not show the “infra” containers. That requires the use of the --infra command-line switch in podman ps.

$ sudo podman ps -p CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES POD 5f3096dc54bb quay.io/baude/demoweb:latest python3 /root/cod... 4 minutes ago Up 4 minutes ago jovial_joliot 5879b590d43e 97a8f0086867 quay.io/baude/demogen:latest python3 /root/cod... 4 minutes ago Up 4 minutes ago optimistic_joliot 50d18daf6438 6f451c893e00 quay.io/baude/demodb:latest docker-entrypoint... 4 minutes ago Up 4 minutes ago boring_khorana 5aed9c3bbbd5

And if you use your web browser, you can connect to the web client and see a live plotting of the random numbers in the database. Assuming you used the podman container runlabel, the web client is now bound to the host’s 8050 port and can be resolved at http://localhost:8050.

Testing Kubernetes

For testing Kubernetes, I have been using minikube, libvirt, and crio on Fedora 29. For the purposes of brevity, I won’t go deep into the setup, but the following are the command-line options I use to start minikube.

$ sudo minikube start --network-plugin=cni --container-runtime=cri-o --vm-driver kvm2 --kubernetes-version v1.12.0

Note: Some users have had to add --cri-socket=/var/run/crio/crio.sock to avoid Docker usage with minikube.

Generating Kubernetes YAML from the existing Podman pods

To generate Kubernetes YAML files from a Podman pod (or container outside a pod), we use the recently added podman generate kube command. Note the singular option to create a service.

$ sudo podman generate kube --help NAME: podman generate kube - Generate Kubernetes pod YAML for a container or pod USAGE: podman generate kube [command options] CONTAINER|POD-NAME DESCRIPTION: Generate Kubernetes Pod YAML OPTIONS: --service, -s only generate YAML for kubernetes service object

To run our demo in Kubernetes, we need to generate Kubernetes YAML for each pod. Let's generate YAML for the demodb pod first.

$ sudo podman generate kube demodb

# Generation of Kubernetes YAML is still under development!

#

# Save the output of this file and use kubectl create -f to import

# it into Kubernetes.

#

# Created with podman-0.12.2-dev

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: 2018-12-11T15:24:09Z

labels:

app: demodb

name: demodb

spec:

containers:

- command:

- docker-entrypoint.sh

- mysqld

env:

- name: PATH

value: /usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

- name: TERM

value: xterm

- name: HOSTNAME

- name: container

value: podman

- name: GOSU_VERSION

value: "1.10"

- name: GPG_KEYS

value: "199369E5404BD5FC7D2FE43BCBCB082A1BB943DB \t177F4010FE56CA3336300305F1656F24C74CD1D8

\t430BDF5C56E7C94E848EE60C1C4CBDCDCD2EFD2A \t4D1BB29D63D98E422B2113B19334A25F8507EFA5"

- name: MARIADB_MAJOR

value: "10.3"

- name: MARIADB_VERSION

value: 1:10.3.11+maria~bionic

- name: MYSQL_ROOT_PASSWORD

value: x

image: quay.io/baude/demodb:latest

name: boringkhorana

ports:

- containerPort: 3306

hostPort: 38967

protocol: TCP

resources: {}

securityContext:

allowPrivilegeEscalation: true

privileged: false

readOnlyRootFilesystem: false

tty: true

workingDir: /

status: {}

As you can see, we generated a Kubernetes pod with a single container in it. That container runs the quay.io/baude/demodb:latest image and retains the same default command. Let’s now create YAML for each pod and pipe the results to a file for use later.

$ sudo podman generate kube demodb > /tmp/kube/demodb.yml $ sudo podman generate kube demogen > /tmp/kube/demogen.yml $ sudo podman generate kube demoweb -s > /tmp/kube/demoweb.yml

Given that we will want to interact with the demoweb pod and see the live graphing, we are going to generate a service for the demoweb pod. This will expose the demoweb pod to the network using Kubernetes NodePort.

Creating the pods and service in Kubernetes

We have captured each Podman pod as YAML and we have a service description too. We can use these files to create Kubernetes pods using the kubectl command.

$ sudo kubectl create -f /tmp/kube/demodb.yml pod/demodb created

I like for the MariaDB container to be running before creating the rest of the objects.

$ sudo kubectl get pods NAME READY STATUS RESTARTS AGE demodb 1/1 Running 0 44s

Now we can create the rest of the objects including the service.

$ sudo kubectl create -f /tmp/kube/demogen.yml pod/demogen created $ sudo kubectl create -f /tmp/kube/demoweb.yml pod/demoweb created service/demoweb created

And we can now see we have three pods running.

$ sudo kubectl get pods NAME READY STATUS RESTARTS AGE demodb 1/1 Running 0 2m29s demogen 1/1 Running 0 61s demoweb 1/1 Running 0 42s

To connect to the live graph, we need to get the Node address for the demoweb pod and the service’s NodePort assignment.

$ sudo kubectl describe pod demoweb | grep Node: Node: minikube/192.168.122.240 $ sudo kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE demoweb NodePort 10.99.237.80 <none> 8050:32272/TCP 12m kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 23m

The demoweb client can be accessed via http://192.168.122.240:32272.

Local replay with Kubernetes YAML

You can now also “play” a Kubernetes YAML file in Podman. We only support running YAML that Podman has generated. This is in part because we cannot realistically implement the entire Kubernetes stack. Nevertheless, this covers a couple of interesting use cases.

The first use case is having the ability to re-run a local orchestrated set of containers and pods with minimal user input required. Here, we were thinking about container developers being able repeat a previous container run consistently for things like iterative development. Within the same context, you could also theoretically be working on one machine and wish to run the same containers and pods on another. The ability to re-"play" using Kubernetes YAML solves both. Enter podman play kube:

$ sudo podman play kube --help

NAME:

podman play kube - Play a pod based on Kubernetes YAML

USAGE:

podman play kube [command options] kubernetes YAML file

DESCRIPTION:

Play a Pod and its containers based on a Kubrernetes YAML

OPTIONS:

--authfile value Path of the authentication file. Default is ${XDG_RUNTIME_DIR}/containers/auth.json. Use REGISTRY_AUTH_FILE environment variable to override.

--cert-dir pathname pathname of a directory containing TLS certificates and keys

--creds credentials credentials (USERNAME:PASSWORD) to use for authenticating to a registry

--quiet, -q Suppress output information when pulling images

--signature-policy pathname pathname of signature policy file (not usually used)

--tls-verify require HTTPS and verify certificates when contacting registries (default: true)

Suppose we cleaned up after the previous runs of our example application from earlier and we have no existing pods or containers defined. We could re-create our previous running application with three simple commands using the Kubernetes YAML we generated earlier and the podman play kube command. First, we verify that no pods are running:

$ sudo podman pod ps

And now using the podman play kube command, we can deploy pods based on our Kubernetes YAML.

$ sudo podman play kube /tmp/kube/demodb.yaml 2ff8097fcdc69e4a272a6057ec050a5cb1b872340c31098f23d2e0074a098dbc 4d0e218297156f6d59554ec395ca4651b08d69ab7ca3935ca9f4b2de737cdc14 $ sudo podman play kube /tmp/kube/demogen.yaml 560e4e44e0ae9e43a965ab8da332f3ed89fed1702b4fd14072a5f58a4f133541 bc8c180bfb4616c981f3d1a4c54e1c62ec1a19fb58eacc58040d25a825cbe4e5 $ sudo podman play kube /tmp/kube/demoweb.yaml ae47ad61e0c5a156dd8dbf4d18700138d5de4693eec63c29835daf765399faac f01152a2190da8d54f4090eaf75df54faf74df933404ff7fe38026be7f7f483b

$ sudo podman pod ps POD ID NAME STATUS CREATED # OF CONTAINERS INFRA ID ae47ad61e0c5 demoweb Running 4 minutes ago 2 57267bfdbaec 560e4e44e0ae demogen Running 4 minutes ago 2 92b74e53d6d2 2ff8097fcdc6 demodb Running 4 minutes ago 2 eeaf676e115e

We have now re-created our containers and pods as they were before, which leads us to another test case. Suppose we want to increase the number of random numbers being generated into the database container by adding two additional containers that generate random numbers. We can now do this using this:

$ sudo podman run -dt --pod demogen quay.io/baude/demogen:latest python3 generatenums.py a36adcc653566b130158738f06b1934bee28d0817b4fc1fd7b6d66296c989335 $ sudo podman run -dt --pod demogen quay.io/baude/demogen:latest python3 generatenums.py 8e2b28266595e8c2e46fc872ae3638985f0ce65d0c12b5a4c28359d2cc543ef7

$ sudo podman pod ps POD ID NAME STATUS CREATED # OF CONTAINERS INFRA ID ae47ad61e0c5 demoweb Running 8 minutes ago 2 57267bfdbaec 560e4e44e0ae demogen Running 8 minutes ago 4 92b74e53d6d2 2ff8097fcdc6 demodb Running 8 minutes ago 2 eeaf676e115e

As you can see, we have added two additional containers to the demogen pod. If we wanted to test this in a Kubernetes environment, we could simply rerun podman generate kube and regenerate the Kubernetes YAML and redeploy that description in our minikube environment.

Wrap-up

As the generated YAML we saw earlier stated, the ability to generate Kubernetes YAML from Podman is still very much under development. Specifically, certain things like SELinux have not been incorporated yet. As such, so is the ability to replay that same YAML. But the current functionality should start to give Podman users ways to run, rerun, and save their development environments and, more importantly, help provide them the ability to run them in a Kubernetes orchestrated environment.

More about Podman on the Red Hat Developer Blog

- Podman: Managing pods and containers in a local container runtime

- Managing containerized system services with Podman

- Containers without daemons: Podman and Buildah available in RHEL 7.6 and RHEL 8 Beta

- Podman – The next generation of Linux container tools

- Intro to Podman (New in Red Hat Enterprise Linux 7.6)

To get involved and see how Podman is evolving, be sure to check out the project page and podman.io.

Last updated: May 30, 2023