Welcome back to this ongoing series of posts about using Large Language Models(LLMs) with Node.js. This post will serve as the conclusion as we see how all the previous posts come together to illustrate how AI can be integrated into an existing Claims Management System for an insurance company

Before we start, here is what the series looks like up to this point:

Experimenting with a Large Language Model powered Chatbot with Node.js | Red Hat Developer

Improving Chatbot result with Retrieval Augmented Generation (RAG) and Node.js | Red Hat Developer

Chatbot, I Choose You….. to call a function

Application Overview Review

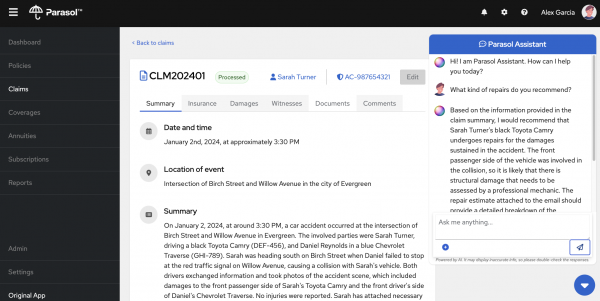

The application that was used to demonstrate all the features was a very basic claims management system. It is similar to something that an insurance company might use to process their insured's claims.

The application front-end was written in react and the backend in node.js, using the Fastify framework for the REST apis and langchain.js as the interface between the application and the Large Language Models(LLMs)

Adding a chatbot

As mentioned in the first post, a chatbot was constructed for users of the application to ask it questions about the claims to better service their customers. The user could ask something like: “What happened here?” and the chat bot would give a succinct summary of this claim.

Throughout that post, we explored some of the basic building blocks when writing AI applications. Some of those concepts were about connecting to models, constructing prompts and creating chains. We also added the ability for the chatbot to store the message history using methods from the lanchain.js framework. This added to the chatbot's ability to have a more natural conversation as well as having some context of previous questions asked.

Email Generation and Output Parsers

The next post in the series had us adding the ability to generate email summaries that could potentially be sent out to a customer. However, generating summaries wasn’t just the main concept of this post.

We learned how we could leverage the use of output parsers to have our response from the LLM to be formatted a certain way. Our Web UI wanted our response to be in JSON and by leveraging langchain.js’s output parsers, as well as defining a schema to send to our model using the popular Zod schema framework, we were able to output the format we wanted.

Retrieval Augmented Generation

In the third post, we expanded our chatbot with extra knowledge without having to retrain the model it was using. This concept is called Retrieval Augmented Generation or RAG. Each insured might have different information in their policies and retraining models can be expensive. Policy data is also very sensitvie, so storing that information in a model might not be possible. So being able to add some more context based on some external resource can be very handy.

As the article stated, there are two main concepts of RAG, Indexing and Retrieval, and we saw how each of those concepts could be added to our chatbot code to give it a little more context when answering questions.

In the example, we wanted the chatbot to be able to answer questions from an insurance policy that was in a pdf. We saw that by using the langchain.js framework, we could easily load the pdf. We used an in-memory vector store to index it. Then a retriever was created that could easily be added to our current chain.

Tools/Functions

The last post in the series gave a brief overview of the concept of having our LLM call a function when responding to a request.

The chatbot was updated so when a user said something like “Update this claims status to approved”, our LLM would parse that request, see that we’ve created a function called “UpdateClaimStatus” and would call that. This function would then update that claims’ status in the database.

Conclusion

While the claims management system we added the chatbot and other features to was fictitious, it illustrates a real world scenario of how a small amount of code can add some powerful AI features to aid in day-to-day scenarios.

As always if you want to learn more about what the Red Hat Node.js team is up to check these out:

https://developers.redhat.com/topics/nodejs

https://developers.redhat.com/topics/nodejs/ai

https://developers.redhat.com/blog/2024/11/20/essential-ai-tutorials-nodejs-developers

https://github.com/nodeshift/nodejs-reference-architecture

https://developers.redhat.com/e-books/developers-guide-nodejs-reference-architecture