The rapid proliferation of generative AI (gen AI) and open source large language models (LLMs) is revolutionizing computing, and AI-enabled applications are now becoming the norm. As a result, application developers now have to learn best practices and how to build applications taking advantage of AI in their solutions. Hence, the need for tools and technologies that make AI more accessible and approachable, enabling developers to tap into its vast potential.

However, this shift also underscores the importance of ensuring data privacy and security, as sensitive information is being processed and stored in these new AI-powered systems. To address this growing demand, it is essential to develop and deploy solutions that not only simplify AI development, but also safeguard user data, fostering trust and innovation in this emerging era of AI-driven computing.

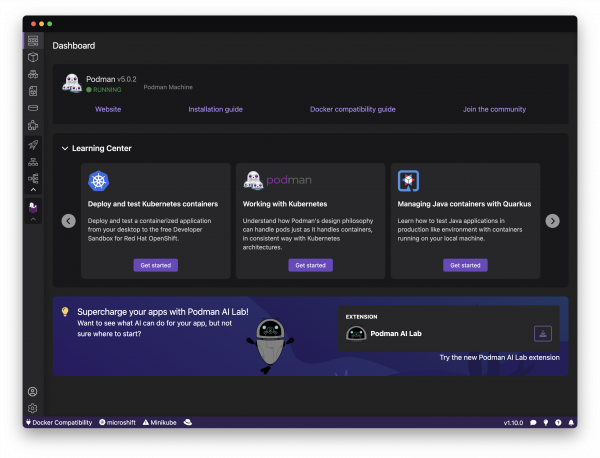

Last year, we announced the general availability of Podman Desktop 1.0, which provides a user-friendly interface for managing containers and working with Kubernetes on your desktop. Today, we are excited to announce Podman AI Lab, a dedicated extension for Podman Desktop that gives developers the ability to build, test and run gen AI-powered applications using an intuitive graphical interface running on their local workstation (Figure 1).

From getting started with AI to experimenting with models and prompts, Podman AI Lab enables developers to easily bring AI into their applications without depending on infrastructure beyond a laptop. Let’s explore what Podman AI is and why it can be advantageous for the future of your applications.

Why use Podman AI Lab?

As developers, we are always using a heterogeneous set of tools to get our developer environment up and properly configured. The convenience of our desktop workstations is real, enabling us fast turnarounds to quickly iterate on our applications. In this emerging era of gen AI-infused applications, getting access to LLMs and running them is a necessity.

More importantly, our motivation is to democratize the journey to gen AI for application developers. A lot of us believe that AI/ML is primarily in the hands of data scientists. But in fact, with gen AI, AI/ML expertise is not a significant factor for integrating models into existing applications. By simplifying LLMs and gen AI for all developers, we believe everyone can seamlessly enhance existing applications with AI and create new experiences.

Running LLMs locally for development and debugging

The main advantage of running LLMs locally is that it provides an easier environment for debugging and developing AI-infused applications. You can quickly test and iterate on your code, you continue to benefit from the convenience of the different tools you are already using, and you can run the LLMs even when offline. All of these reasons make it easier to incorporate LLMs and leverage their capabilities in your applications.

Cost efficiency

When you are experimenting and just playing around with the LLMs, it doesn’t need to light up your cloud billing accounts with GPU charges. Small but efficient models can allow developers to go a long way. While there is an initial investment in hardware and setup, running models on your own machine reduces ongoing costs of cloud computing services and alleviates vendor-locking.

Education and training

Podman Desktop simplifies the onboarding process for developers new to containerization, providing an intuitive interface and streamlined management process that accelerates the adoption of containers and the transition to Kubernetes. Meanwhile, Podman AI Lab's recipe catalog offers sample applications showcasing common use cases for LLMs, serving as a great jump start for developers to learn from and build upon. By reviewing the source code and seeing how these applications are built, developers can gain valuable insights into best practices for wrapping their own code around AI models.

Data security and ownership

By running LLMs locally, you maintain ownership of your data and have full control over how it's processed and stored. This is particularly important when working with sensitive, proprietary information or when you are concerned by regulatory compliance restrictions on how data is processed. This is something you can only achieve in the safe zone of your on-premise deployment and local environment, helping to ensuring your data remains secure and confidential.

Easier transition to production

Podman AI Lab also simplifies the transition to production. With everything running on Podman, containers and pods are portable and can easily be run anywhere across the hybrid cloud using Kubernetes, Red Hat OpenShift, and Red Hat OpenShift AI.

In addition, with the bootc extension also available for Podman Desktop, it provides a tight integration with image mode for Red Hat Enterprise Linux, a new deployment method for the world’s leading enterprise Linux platform that delivers the operating system as a container image. This integration enables developers to more easily go from prototyping and working with models on their laptop, to then turning the new AI-infused application into a bootable container.

Key features

Podman AI Lab includes many features that streamline workflows of working with LLMs to easily get started bringing AI into your applications.

- Discover generative AI

- Built-in catalog of recipes (sample applications) to discover and learn best practices

- Experiment with different compatible models

- Catalog of curated models

- Out-of-the-box list of ready-to-use open source models

- Import your own model files (GGUF)

- Local model serving

- OpenAI-compatible API

- Code snippets generation for instant integration of developers’ applications

- Pre-built container image, based on UBI and kept up-to-date

- Playground environments

- Experiment and test models

- Configure model settings and parameters

To learn more about those features, we invite you to read the getting started guide, which gives a complete overview of each of the capabilities provided.

How to get started and install the extension

To install Podman AI Lab, you’ll need Podman Desktop 1.10 or later. You’ll then find the catalog of extensions (Figure 2).

Alternatively, you can install the extension from the dashboard of Podman Desktop (Figure 3).

What’s next?

We plan to improve Podman AI Lab and add more capabilities to help you infuse AI into your own application, enabling fine-tuning with your own data and more easily integrating LLMs into your applications. We’ll soon enable running the models with GPU acceleration as well.

For developers targeting Red Hat OpenShift AI, you'll see more tools integrated with Podman Desktop and Podman AI Lab to make it smoother and more efficient to build your application locally and transition it to production.

More information

You can get a complete tour of Podman AI Lab by following the getting started guide as well as an deep dive into the Recipes provided by Podman AI Lab.

Since last year's announcement, Podman Desktop has crossed 1 million total downloads and continues its momentum. We are now expanding to help developers with AI and we are excited about the road ahead. Visit podman-desktop.io to download the tool and learn more about the project.