Over the last few months, our Node.js team has explored how to leverage large language models (LLMs) using JavaScript, TypeScript, and Node.js. With TypeScript/JavaScript often being the second language supported by frameworks used to leverage LLMs, we investigated various frameworks to see how easy they are to use and how they might affect the results we get.

One of the key topics we've examined is tool/function calling and how that works with agents. With Llama Stack being released earlier this year, we decided to look at how tool calling and agents work with that framework. We will use some of the same patterns and approaches that we have used in looking at the other frameworks and will take a look at how they compare.

We covered other frameworks and how they work with tool/function calling in previous posts:

- A quick look at MCP with large language models and Node.js

- Building agents with large language models (LLMs) and Node.js

- A quick look at tool use/function calling with Node.js and Ollama

- Diving deeper with large language models and Node.js

Set up Llama Stack

The first thing we needed to do was to get a running Llama Stack instance that we could experiment with. Llama Stack is a bit different from other frameworks in a few ways.

The first difference from other frameworks we've looked at is that instead of providing a single implementation with a set of defined APIs, it aims to standardize a set of APIs and looks to drive a number of distributions. In other words, the goal is to have many implementations of the same API, with each implementation being shipped by a different organization as a distribution. As is common when this approach is followed, a "reference distribution" is provided, but there are already a number of alternative distributions available. You can see those which are available here.

The other difference is a heavy focus on plug-in APIs that allow you to add implementations for specific components behind the API implementation itself. For example, that would allow you to plug-in an implementation (maybe one that is custom tailored for your organization) for a specific feature like Telemetry while using an existing distribution. We won't go into the details of these APIs in this post but hope to look at them later on.

With that said, the first question we had was which distribution to use in order to get stated. The Llama Stack quick start shows how to spin up a container running Llama Stack, which uses Ollama to serve the large language model. Since we already had a working Ollama install, we decided that was the path of least resistance.

Get it running

We followed the Llama Stack quick start using a container to run the stack with it pointing to an existing Ollama server. Following the instructions, we put together this short script that allowed us to easily start/stop the Llama Stack instance:

export INFERENCE_MODEL="meta-llama/Llama-3.1-8B-Instruct"

export LLAMA_STACK_PORT=8321

export OLLAMA_HOST=10.1.2.38

podman run -it \

--user 1000 \

-p $LLAMA_STACK_PORT:$LLAMA_STACK_PORT \

-v ~/.llama:/root/.llama \

llamastack/distribution-ollama:0.1.5 \

--port $LLAMA_STACK_PORT \

--env INFERENCE_MODEL=$INFERENCE_MODEL \

--env OLLAMA_URL=http://$OLLAMA_HOST:11434

Our existing Ollama server was running on a machine with the IP 10.1.2.38, which is what we set OLLAMA_HOST to.

We wanted to use a model which was as close to the models we'd used with other frameworks, which is why we chose meta-llama/Llama-3.1-8B-Instruct, which mapped to llama3.2:8b-instruct-fp16 in Ollama. Currently, Llama Stack only supports a limited set of models with Ollama, and that model was the closest we could get to the quantized 8B models that we had used previously.

Llama Stack also requires that Ollama is already running the model, so we started it with:

ollama run llama3.2:8b-instruct-fp16 --keepalive 24h

That meant that as long as we used the model once a day, it would keep running in our Ollama server.

Otherwise we followed the instructions for starting the container from the quick start so Llama Stack was running on the default port. We ran the container on a Fedora virtual machine with IP 10.1.2.128, so you will see us using http://10.1.2.128:8321 as the endpoint for the Llama Stack instance in our code examples.

At this point, we had a running Llama Stack instance that we could start to experiment with.

Read the docs!

While it is a good idea to read the documentation, that is not quite what we mean in this case. At the time of this writing, Llama Stack is moving fast, and we found it was not necessarily easy to find what we needed by searching. Also, the fact that the TypeScript client was auto-generated sometimes made it a bit harder to interpret the Markdown documentation.

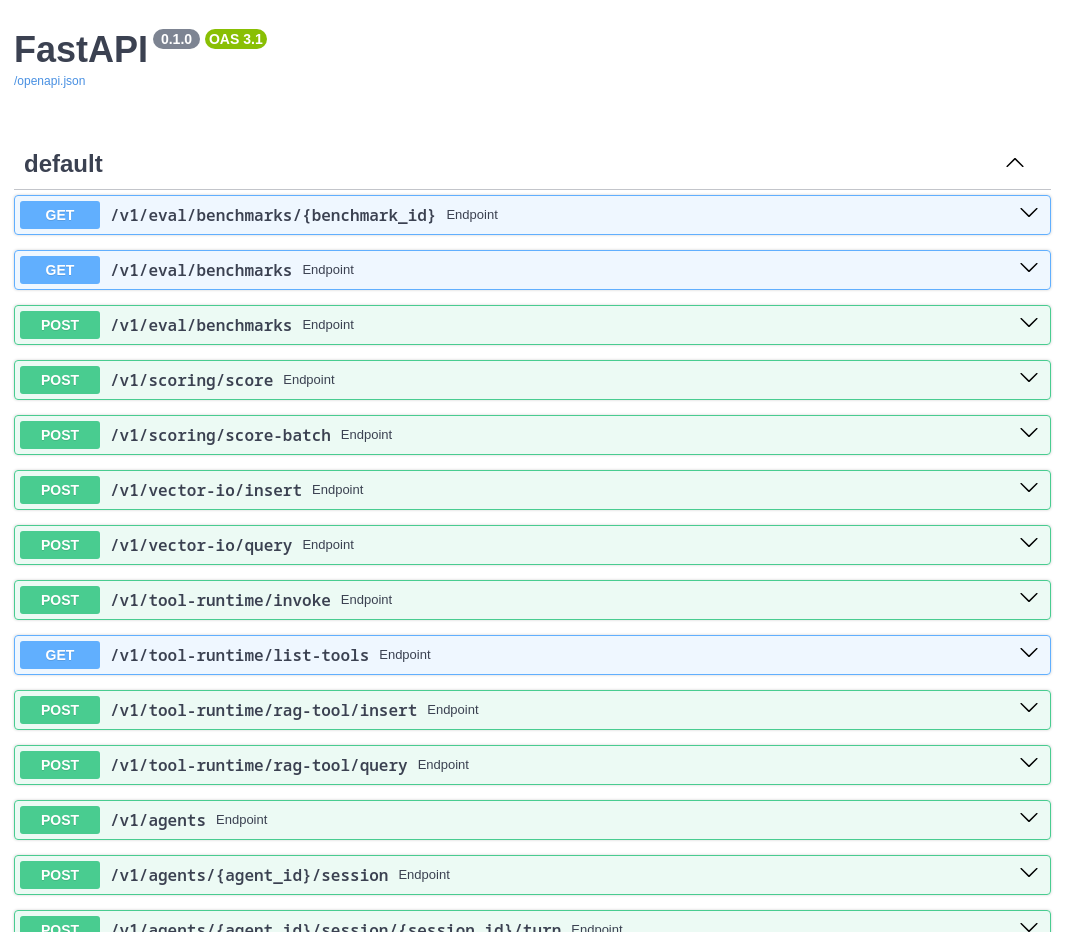

The good news, however, is that the stack supports a docs endpoint (in our case, http://10.1.2.128:8321/docs) that you can use to explore the APIs (Figure 1). You can also use that endpoint to invoke and experiment with the APIs, which we found helped a lot.

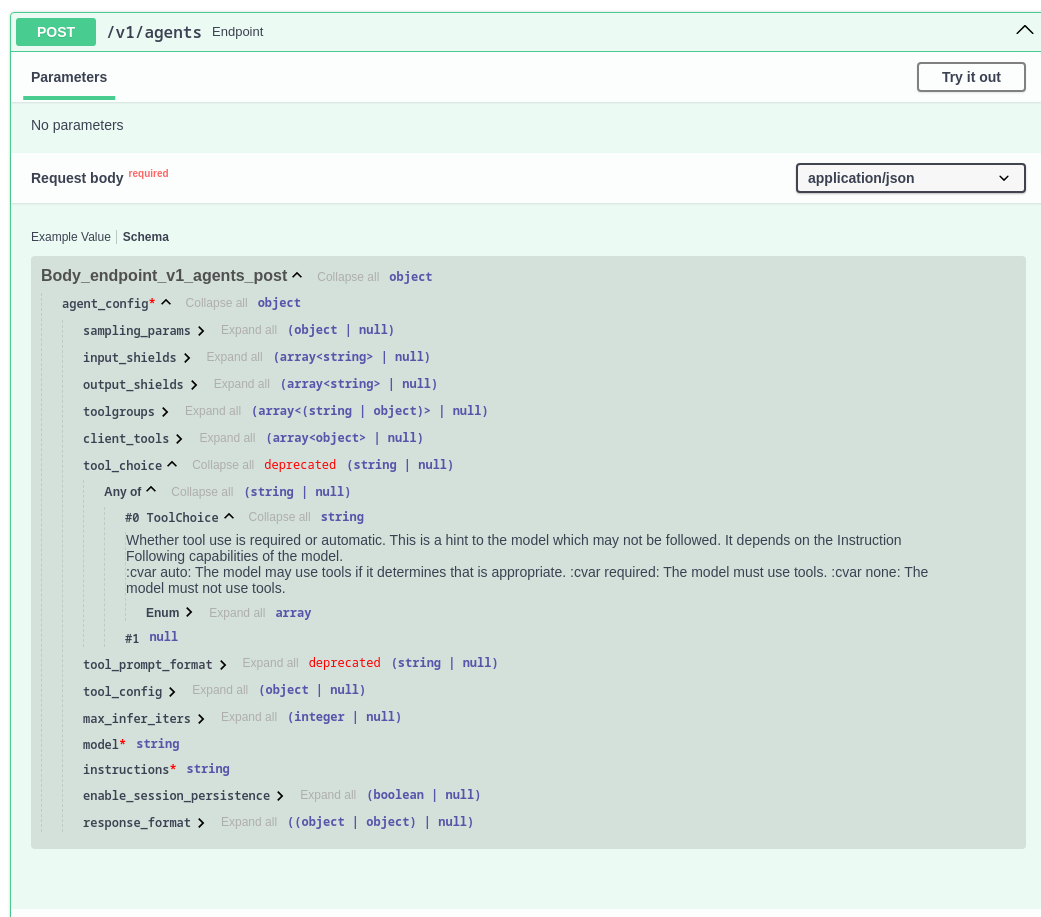

From the initial page, you can drill down to either invoke specific APIs or look at the specific schemas. For example, Figure 2 shows the schema for the agent API after drilling down to look at the tool_choice option.

If you can't quite find an example or documentation that shows what you want to do, using the docs endpoint is a great resource.

Our first Node.js Llama Stack application

Being Node.js developers, we wanted to start by exploring an example that we could run with Node.js. A TypeScript-based client is available, so that is what we used: llama-stack-client-typescript. As stated in the documentation, it is an automatically generated client based on an OpenAPI definition of the reference API. There is also Markdown documentation for the implementation itself. As mentioned in the previous section, the docs endpoint was also a great resource for understanding the client functionality.

To start, we wanted to implement the same question flow that we used in the past to explore tool calling. This consists of providing the LLM with 2 tools:

favorite_color_tool: Returns the favorite color for a person in the specified city and country.favorite_hockey_tool: Returns the favorite hockey team for a person in the specified city and country.

Then, we ran through this sequence of questions to see how well they were answered:

'What is my favorite color?',

'My city is Ottawa',

'My country is Canada',

'I moved to Montreal. What is my favorite color now?',

'My city is Montreal and my country is Canada',

'What is the fastest car in the world?',

'My city is Ottawa and my country is Canada, what is my favorite color?',

'What is my favorite hockey team ?',

'My city is Montreal and my country is Canada',

'Who was the first president of the United States?',The full source code for the first example is available in ai-tool-experimentation/llama-stack/favorite-color.mjs.

Defining the tools

In our first application, we used local tools defined as part of the application itself. Llama Stack has its own JSON format that is used to define the tools (every framework seems to have its own, unless you are using MCP).

For Llama Stack, the definitions ended up looking like this:

const availableTools = [

{

tool_name: 'favorite_color_tool',

description:

'returns the favorite color for person given their City and Country',

parameters: {

city: {

param_type: 'string',

description: 'the city for the person',

required: true,

},

country: {

param_type: 'string',

description: 'the country for the person',

required: true,

},

},

},

{

tool_name: 'favorite_hockey_tool',

description:

'returns the favorite hockey team for a person given their City and Country',

parameters: {

city: {

param_type: 'string',

description: 'the city for the person',

required: true,

},

country: {

param_type: 'string',

description: 'the country for the person',

required: true,

},

},

},

];Tool implementations

Our tool implementations were the same as in previous explorations (with the exception of one interesting tweak that we will discuss later in this post).

function getFavoriteColor(args) {

const city = args.city;

const country = args.country;

if (city === 'Ottawa' && country === 'Canada') {

return 'the favoriteColorTool returned that the favorite color for Ottawa Canada is black';

} else if (city === 'Montreal' && country === 'Canada') {

return 'the favoriteColorTool returned that the favorite color for Montreal Canada is red';

} else {

return `the favoriteColorTool returned The city or country

was not valid, assistant please ask the user for them`;

}

}

function getFavoriteHockeyTeam(args) {

const city = args.city;

const country = args.country;

if (city === 'Ottawa' && country === 'Canada') {

return 'the favoriteHocketTool returned that the favorite hockey team for Ottawa Canada is The Ottawa Senators';

} else if (city === 'Montreal' && country === 'Canada') {

return 'the favoriteHockeyTool returned that the favorite hockey team for Montreal Canada is the Montreal Canadians';

} else {

return `the favoriteHockeyTool returned The city or country

was not valid, please ask the user for them`;

}

}Asking the questions

For the first example, we used the chat completion APIs (as opposed to the agent APIs). The call to the API providing the tools looked like this:

for (let i = 0; i < questions.length; i++) {

console.log('QUESTION: ' + questions[i]);

messages.push({ role: 'user', content: questions[i] });

const response = await client.inference.chatCompletion({

messages: messages,

model_id: model_id,

tools: availableTools,

});

console.log(' RESPONSE:' + (await handleResponse(messages, response)));

}Invoking tools

Because we are using the completions API, calling the tools is not handled for us; we just get a request to invoke the tool and return the response as part of the context in a subsequent call to the completions API. For Llama Stack, our handleResponse that does that looks like this:

async function handleResponse(messages, response) {

// push the models response to the chat

messages.push(response.completion_message);

if (

response.completion_message.tool_calls &&

response.completion_message.tool_calls.length != 0

) {

for (const tool of response.completion_message.tool_calls) {

// log the function calls so that we see when they are called

log(' FUNCTION CALLED WITH: ' + inspect(tool));

console.log(' CALLED:' + tool.tool_name);

const func = funcs[tool.tool_name];

if (func) {

const funcResponse = func(tool.arguments);

messages.push({

role: 'tool',

content: funcResponse,

call_id: tool.call_id,

tool_name: tool.tool_name,

});

} else {

messages.push({

role: 'tool',

call_id: tool.call_id,

tool_name: tool.tool_name,

content: 'invalid tool called',

});

}

}

// call the model again so that it can process the data returned by the

// function calls

return handleResponse(

messages,

await client.inference.chatCompletion({

messages: messages,

model_id: model_id,

tools: availableTools,

}),

);

} else {

// no function calls just return the response

return response.completion_message.content;

}

}So far, this is all quite similar to the code we used for other frameworks, with tweaks to use the JSON format used to define tools with Llama Stack and tweaks to use the methods provided by the Llama Stack APIs.

An interesting wrinkle

When we first ran the example using the same tool implementations that we had used before, we saw the following output when the LLM did not have the information needed by one of the tools:

node favorite-color.mjs

Iteration 0 ------------------------------------------------------------

QUESTION: What is my favorite color?

CALLED:favorite_color_tool

RESPONSE:{"type": "error", "message": "The city or country was not valid, please ask the user for them"}

QUESTION: My city is Ottawa

CALLED:favorite_color_tool

RESPONSE:{"type": "error", "message": "The city or country was not valid, please ask the user for them"}

QUESTION: My country is Canada

CALLED:favorite_color_tool

RESPONSE:"black"This is not what we expected; with other frameworks (when we used a similar 8B llama model) the RESPONSE: was a better formatted request for the information which was needed, instead of the direct output from the tool itself. This is likely related to how Llama Stack handles what it sees as errors returned by the tool.

We found that by tweaking the response from the tool to call out the assistant specifically by changing the following:

return `the favoriteColorTool returned The city or country

was not valid, please ask the user for them`;to this:

return `the favoriteColorTool returned The city or country

was not valid, assistant please ask the user for them`;Adding the single word assistant in front of please ask the user for them helped the model handle the response in a way that the flow was as expected, with the RESPONSE being a request for more information when needed. With this change, the flow as as follows:

node favorite-color.mjs

Iteration 0 ------------------------------------------------------------

QUESTION: What is my favorite color?

CALLED:favorite_color_tool

RESPONSE:Please provide your city and country.

QUESTION: My city is Ottawa

CALLED:favorite_color_tool

RESPONSE:Please provide your country.

QUESTION: My country is Canada

CALLED:favorite_color_tool

RESPONSE:Your favorite color is black.

QUESTION: I moved to Montreal. What is my favorite color now?

CALLED:favorite_color_tool

RESPONSE:Your favorite color is now red.

QUESTION: My city is Montreal and my country is Canada

CALLED:favorite_color_tool

RESPONSE:Your favorite color is still red.

QUESTION: What is the fastest car in the world?

RESPONSE:I cannot answer this question as none of the provided functions can be used to determine the fastest car in the world.

QUESTION: My city is Ottawa and my country is Canada, what is my favorite color?

CALLED:favorite_color_tool

RESPONSE:Your favorite color is black.

QUESTION: What is my favorite hockey team ?

CALLED:favorite_hockey_tool

RESPONSE:Your favorite hockey team is The Ottawa Senators.

QUESTION: My city is Montreal and my country is Canada

CALLED:favorite_hockey_tool

RESPONSE:Your favorite hockey team is the Montreal Canadiens.

QUESTION: Who was the first president of the United States?

RESPONSE:I cannot answer this question as none of the provided functions can be used to determine the first president of the United States. Llama loves its tools

In the output, you might have noticed that when we asked questions that were not related to the tools, the response was that the LLM could not answer the question. This is despite us knowing that the answer is in the data the model was trained on.

QUESTION: Who was the first president of the United States?

RESPONSE:I cannot answer this question as none of the provided functions can be used to determine the first president of the United States. QUESTION: What is the fastest car in the world?

RESPONSE:I cannot answer this question as none of the provided functions can be used to determine the fastest car in the world.We have seen this before with Llama 3.1 and other frameworks (see the section "A last minute wrinkle" in A quick look at tool use/function calling with Node.js and Ollama). In addition, we double-checked that the default with Llama Stack for tool choice was "auto," which means it should decide when/if tools should be used. So at this point, Llama seems to love its tools so much that if you provide a tool it feels it must use one of the tools.

Using MCP with Llama Stack

No look at tool calling with a framework would be complete without covering MCP. Model Context Protocol (MCP) is a protocol that allows integration between large language models (LLMs) and tools, often as part of an agent. One of the interesting aspects of MCP is that tools hosted in a server can be consumed by different frameworks and even frameworks that use a different language from which the server was written.

In the article A quick look at MCP with Large Language Models and Node.js we packaged up our favorite_color_tool and favorite_hockey_tool into an MCP server and used those tools with 2 different frameworks. To round out our initial exploration of Llama Stack, we will look at using those same tools through the same MCP server with Llama Stack.

Where is my MCP server now?

In our previous explorations, the MCP server was started by our application, ran on the same system as our application, and communication was through Standard IO (stdio for short). This is good if what your application needs to access through the tools is local to the machine on which the application is running, but not so good otherwise. As an example, it would likely be better to lock down access to a database to a single centralized machine instead of letting all client machines running an application connect to the database directly.

The MCP support built into Llama Stack works a bit differently than what we saw with the other frameworks. MCP servers are registered with the Llama Stack instance and then the tools provided by the MCP server are assessable to clients that connect to that instance. The MCP support also assumes an Server-Sent Events (SSE) connection instead of stdio, which allows the MCP server to run remotely from where the Llama Stack is running.

Starting the MCP server

Because our existing MCP server in ai-tool-experimenation/mcp/favorite-server used stdio, the first question was how we could use it with Llama Stack. We'd either need to modify the code or find some kind of bridge. Lucky for us, we found Supergateway, which allowed us to start our existing MCP server and have it support SSE. The script we used to do that is in ai-tool-experimentation/mcp/start-mcp-server-for-llama-stack.sh and was as follows:

npx -y supergateway --port 8002 --stdio "node favorite-server/build/index.js"We chose to run it on the same machine on which our Llama Stack container was running, but it could have been run on any machine accessible to the container.

One other note is that we did update the code for the MCP server so that the tools added the "assistant" request in the response when not enough information was provided. This addition did not affect the flow with the other frameworks.

Registering the MCP server

Before an MCP server can be used with Llama Stack, we must tell Llama Stack about it through a registration process. This provides Llama Stack with the information needed to contact the MCP server.

Our Node.js script to register the server was ai-tool-experimentation/mcp/llama-stack-register-mcp.mjs, as follows:

import LlamaStackClient from 'llama-stack-client';

const client = new LlamaStackClient({

baseURL: 'http://10.1.2.128:8321',

timeout: 120 * 1000,

});

await client.toolgroups.register({

toolgroup_id: 'mcp::mcp_favorites',

provider_id: 'model-context-protocol',

mcp_endpoint: { uri: 'http://10.1.2.128:8002/sse' },

});The toolgroup_id is the ID that we will need when we want the LLM to use the tools from the MCP server. You should only have to register the MCP server once after the Llama Stack instance is started. Because we ran the MCP server on the same host as the Llama Stack instance, you can see that the IP addresses are the same.

Using tools from the MCP server

From what we can see so far, you can only use tools from an MCP server through the Llama Stack agents APIs, not the continuation APIs that we used for our first application. Therefore, we switched to the agent API for our second application .

When using the agent API, interaction with tools is handled for you behind the scenes. Instead of getting a request to invoke a tool and having to invoke and return the response this happens within the framework itself. This simplified the application significantly, as we just had to tell the API which tool group we wanted to use and then ask our standard set of questions. The agent APIs also manage memory so that was another simplification, as we did not need to keep a history of the messages that had already been exchanged.

The code to create the agent and tell it which MCP server we wanted to use was as follows:

// Create the agent

const agentic_system_create_response = await client.agents.create({

agent_config: {

model: model_id,

instructions: 'You are a helpful assistant',

toolgroups: ['mcp::mcp_favorites'],

tool_choice: 'auto',

input_shields: [],

output_shields: [],

max_infer_iters: 10,

},

});

const agent_id = agentic_system_create_response.agent_id;

// Create a session that will be used to ask the agent a sequence of questions

const sessionCreateResponse = await client.agents.session.create(agent_id, {

session_name: 'agent1',

});

const session_id = sessionCreateResponse.session_id;You can see that we use mcp::mcp_favorites to match the toolgroup_id that we used when we registered the server. Having done that it was a simple matter of asking each question using the agent API.

The code is a bit longer that it might have been otherwise because as of the time of writing, the agent API only supports a streaming API, which takes a few more lines in order to collect the response:

for (let i = 0; i < questions.length; i++) {

console.log('QUESTION: ' + questions[i]);

const responseStream = await client.agents.turn.create(

agent_id,

session_id,

{

stream: true,

messages: [{ role: 'user', content: questions[i] }],

},

);

// as of March 2025 only streaming was supported

let response = '';

for await (const chunk of responseStream) {

if (chunk.event.payload.event_type === 'turn_complete') {

response = response + chunk.event.payload.turn.output_message.content;

}

}

console.log(' RESPONSE:' + response);

}The full source code for this application is in ai-tool-experimentatio/mcp/llama-stack-agent-mcp.mjs. The flow ran and used the same tools; we just don't get the information about tools when they are called, as the application does not see the tool calls, only the final response:

Iteration 0 ------------------------------------------------------------

QUESTION: What is my favorite color?

RESPONSE:I don't have information about your city and country. Please provide me with that information so I can tell you your favorite color.

QUESTION: My city is Ottawa

RESPONSE:I still don't have information about your country. Please provide me with that information so I can tell you your favorite color.

QUESTION: My country is Canada

RESPONSE:Your favorite color is black.

QUESTION: I moved to Montreal. What is my favorite color now?

RESPONSE:Since you've moved to a new city, I'll need to update your information. However, I don't have any information about your favorite color being tied to a specific location. If you'd like to know your favorite color based on your current location, I can try to look it up for you.

Please note that the favorite_color_tool function is not designed to update or change your favorite color based on your location. It's more of a static tool that provides information about your favorite color based on your city and country at the time of the initial query.

If you'd like, I can try to look up your favorite color again using the favorite_color_tool function with your new location.

QUESTION: My city is Montreal and my country is Canada

RESPONSE:Your favorite color has changed to red since you moved to Montreal.

QUESTION: What is the fastest car in the world?

RESPONSE:I don't have any information about the fastest car in the world. The functions provided do not include any information about cars or their speeds.

QUESTION: My city is Ottawa and my country is Canada, what is my favorite color?

RESPONSE:Your favorite color is still black.

QUESTION: What is my favorite hockey team ?

RESPONSE:Your favorite hockey team is The Ottawa Senators.

QUESTION: My city is Montreal and my country is Canada

RESPONSE:Your favorite hockey team is now the Montreal Canadians.

QUESTION: Who was the first president of the United States?

RESPONSE:I don't have any information about the presidents of the United States. The functions provided do not include any information about historical figures or political leaders.It is interesting that the flow was a bit different because the agent uses a more sophisticated way of "thinking," but in our case it seems to have "over thought" instead of just calling the tool to get the answer:

QUESTION: I moved to Montreal. What is my favorite color now?

RESPONSE:Since you've moved to a new city, I'll need to update your information. However, I don't have any information about your favorite color being tied to a specific location. If you'd like to know your favorite color based on your current location, I can try to look it up for you.

Please note that the favorite_color_tool function is not designed to update or change your favorite color based on your location. It's more of a static tool that provides information about your favorite color based on your city and country at the time of the initial query.

If you'd like, I can try to look up your favorite color again using the favorite_color_tool function with your new location.Compared to what we saw when using the completions API:

QUESTION: I moved to Montreal. What is my favorite color now?

CALLED:favorite_color_tool

RESPONSE:Your favorite color is now red.What about access to the local application environment?

The Llama Stack approach to MCP support is good for most cases except when you want to access the local environment where the application is running instead of that of where the Llama Stack is running or some other machine completely. If you want to access the environment where the application is running instead, what do you do?

While Llama Stack does not have built in MCP support for this, it is quite easy to add it in a similar manner to what we did in for Ollama in A quick look at MCP with large language models and Node.js. We start an MCP server locally, communicate with it over stdio, and convert the tools it provides into the format needed for the use of local tools.

The code to start the local MCP server was:

await mcpClient.connect(

new StdioClientTransport({

command: 'node',

args: ['favorite-server/build/index.js'],

}),

);In the case of Llama Stack, the code needed to convert the JSON tool descriptions provided by the MCP server into the format needed by Llama Stack was as follows:

let availableTools = await mcpClient.listTools();

availableTools = availableTools.tools;

for (let i = 0; i < availableTools.length; i++) {

const tool = availableTools[i];

tool.tool_name = tool.name;

delete tool.name;

tool.parameters = tool.inputSchema.properties;

for (const [key, parameter] of Object.entries(tool.parameters)) {

parameter.param_type = parameter.type;

delete parameter.type;

if (tool.inputSchema.required.includes(key)) {

parameter.required = true;

}

}

delete tool.inputSchema;

}With that, we could provide those tool descriptions in the completion API. When tools calls were requested by the LLM, we could convert those requests into invocations of the tools through the MCP server as follows:

for (const tool of response.completion_message.tool_calls) {

// log the function calls so that we see when they are called

log(' FUNCTION CALLED WITH: ' + inspect(tool));

console.log(' CALLED:' + tool.tool_name);

try {

const funcResponse = await mcpClient.callTool({

name: tool.tool_name,

arguments: tool.arguments,

});

for (let i = 0; i < funcResponse.content.length; i++) {

messages.push({

role: 'tool',

content: funcResponse.content[i].text,

call_id: tool.call_id,

tool_name: tool.tool_name,

});

}

} catch (e) {

messages.push({ role: 'tool', content: `tool call failed: ${e}` });

}

}The full code for the example using tools from a local MCP server with Llama Stack is in ai-tool-experimentation/mcp/llama-stack-local-mcp.mjs. It's nice that you can easily use both a local MCP server or one registered with the Llama Stack, depending on what makes the most sense for your application.

Wrapping up

This post looked at using Node.js with large language models and Llama Stack. We explored how to run tools with the completions API, the agent API, and how to do so with in-line tools, local MCP tools, and remote MCP tools. We hope it has given you, as a JavaScript/TypeScript/Node.js developer, a good start on using large language models with Llama Stack.

To learn more about developing with large language models and Node.js, JavaScript, and TypeScript, see the post Essential AI tutorials for Node.js developers.

Explore more Node.js content from Red Hat:

- Visit our topic pages on Node.js and AI for Node.js developers.

- Download the e-book A Developer's Guide to the Node.js Reference Architecture.

- Explore the Node.js Reference Architecture on GitHub.