I'm guessing if you've done enough repeated builds on OpenShift, using Maven, that you are probably aware of the "download the internet" phenomenon that plagues build times. You start a build, expecting all those Maven dependencies you downloaded for your last build to be re-used, but quickly see your network traffic ramp up while the same 100MB of jars are downloaded again and again. Even builds of a few minutes tend to grind on me, frustrate me as a developer when I'm trying to test/deploy/fix quickly.

I'm guessing if you've done enough repeated builds on OpenShift, using Maven, that you are probably aware of the "download the internet" phenomenon that plagues build times. You start a build, expecting all those Maven dependencies you downloaded for your last build to be re-used, but quickly see your network traffic ramp up while the same 100MB of jars are downloaded again and again. Even builds of a few minutes tend to grind on me, frustrate me as a developer when I'm trying to test/deploy/fix quickly.

Thankfully, Maven has a nice feature that allows you to set up local mirrors that cache dependencies and make them available to future builds, only updating from the upstream repo as needed on a regular (and configurable) schedule.

For those using OpenShift, and in particular many of the available JBoss S2I images, you can:

- Use incremental builds to save Maven dependencies after a build, and re-use them during subsequent builds

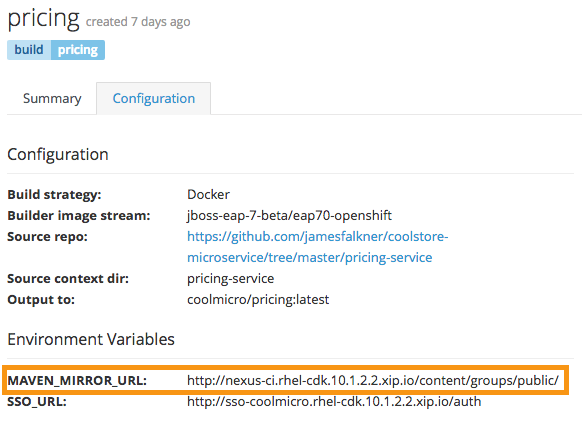

- Setup a local Maven mirror and set a buildtime environment variable

MAVEN_MIRROR_URLthat points to it. - Extend existing Source to Image (S2I) builder images to provide a custom

settings.xml

Jorge outlines how to do all these in an awesome blog post.

Maven mirrors for Docker-based builds

When using S2I, you more or less get the Maven mirror capability for free. And for production use, I would recommend creating or extending S2I images as Jorge outlined. But what if there is no S2I images available for you to use? In my case, I wanted the Maven mirror behavior and wanted to use JBoss EAP 7 Beta and a Keycloak adapter to SSO-enable my app. Unfortunately, the available S2I image for EAP 7 doesn't include Keycloak, and the Keycloak adapter docker image is based on EAP 6. so I had two choices:

- Create a new S2I image by extending the existing EAP 7 Beta S2I image and modifying (extending) its S2I assemble script to add the Keycloak adapter

- Use a Docker build (FROM the EAP 7 Beta S2I image), injecting the local Maven mirror and Keycloak adapter

My workflow isn't advanced enough to be able to keep track of custom build images, so I went with option 2 and put in some logic into my Dockerfile:

# Use EAP 7 Beta as a base image

FROM jboss-eap-7-beta/eap70-openshift

ENV KEYCLOAK_VERSION 1.9.4.Final

# add maven mirror

RUN sed -i -e 's/<mirrors>/&\n <mirror>\n <id>sti-mirror<\/id>\n <url>${env.MAVEN_MIRROR_URL}<\/url>\n <mirrorOf>external:*<\/mirrorOf>\n <\/mirror>/' ${HOME}/.m2/settings.xml

# install adapter

WORKDIR ${JBOSS_HOME}

RUN curl -L https://downloads.jboss.org/keycloak/$KEYCLOAK_VERSION/adapters/keycloak-oidc/keycloak-wildfly-adapter-dist-$KEYCLOAK_VERSION.tar.gz | tar zx

# Standalone.xml modifications.

RUN sed -i -e 's/<extensions>/&\n <extension module="org.keycloak.keycloak-adapter-subsystem"\/>/' $JBOSS_HOME/standalone/configuration/standalone-openshift.xml && \

sed -i -e 's/<profile>/&\n <subsystem xmlns="urn:jboss:domain:keycloak:1.1"\/>/' $JBOSS_HOME/standalone/configuration/standalone-openshift.xml && \

sed -i -e 's/<security-domains>/&\n <security-domain name="keycloak">\n <authentication>\n <login-module code="org.keycloak.adapters.jboss.KeycloakLoginModule" flag="required"\/>\n <\/authentication>\n <\/security-domain>/' $JBOSS_HOME/standalone/configuration/standalone-openshift.xml

# perform the build

RUN mvn package

# after build, copy artifacts (e.g. .war files from target/) to deploy directory (e.g. $JBOSS_HOME/standalone/deployments)

Notice the use of ${env.MAVEN_MIRROR_URL} in the Dockerfile. This must be set as an environment variable in your OpenShift BuildConfig so that the Dockerfile picks it up.

Also note the fancy sed command to alter the standalone-openshift.xml file (this is the EAP configuration file in use when EAP runs on OpenShift). It would have been nice to be able to invoke Keycloak's adapter-install-offline.cli file, but unfortunately the shenanigans the base image goes through to launch EAP in OpenShift renders jboss-cli.sh pretty much useless.

Finally, note the URL above is the public endpoint (route) to the Nexus server. S2I builds on OpenShift are able to use an internal hostname (such as nexus.ci.svc.cluster.local) to get to the Nexus server at buildtime, but unfortunately for Docker-based builds, this naming is not configured in the underlying container (it is only possible for S2I builds at the moment.)