The responsibility of maintaining and updating execution or decision environments (EEs/DEs) often falls to a few individuals, typically automation engineers. This article explores a structured method to automate this process and delegate responsibilities effectively across teams.

Imagine the following scenario... You are working as an Ansible Operations Engineer at Fort Automation in the Strategic Automation Corps. You’ve onboarded various groups to Red Hat Ansible Automation Platform and set up organizations for them. Now, the Linux, Windows, Network, and other teams are developing Ansible Playbooks that rely on specific certified and validated collections. As these teams expand their automation efforts, they consider creating and maintaining custom execution environments tailored to their unique requirements. These custom EEs include the collections most often required for their particular automation.

Updating custom execution environments

Keeping custom EEs updated is essential for several key reasons:

- Security and stability: Base images like

ee-minimal-rhel8receive critical updates, including security patches, which ensure the EE remains secure and stable. - Compatibility: As new versions of collections are released, they may require testing to verify compatibility with existing playbooks. Staying updated prevents disruptions caused by incompatibilities.

- Enhanced features: Updates to collections frequently introduce new functionalities that can improve automation capabilities or address limitations in previous versions.

- Maintenance and future-proofing: Just as it is vital to keep playbooks and roles compatible with newer versions of

ansible-core, it is equally important to maintain the EE they run on. Regularly updating EEs ensures alignment with the latest tools and prevents the accumulation of technical debt, making long-term maintenance more manageable and efficient.

Staying updated aligns with the Red Hat guidelines for execution environment lifecycle management and best practices.

Set up the build server

Everything presented here was already made available earlier thanks to the work of Sean Sullivan, David Danielsson, and all the contributors of the Red Hat Communities of Practice Execution Environment Utilities Collection. If you have not already completed the Utilizing Configuration as Code lab, make sure to complete the Config as Code Creating Execution environments lesson because these are the playbooks, roles, and collections we will use here. Please note that the collection containers.podman-1.13.0 (or older) is required since newer versions introduce issues in infra.ee_utilities (working on it).

We recommend setting up a development/build server to embark on this journey. Building an execution environment (read container) from within a container is not currently supported. In our lab, we use a RHEL 9.4 virtual machine named builder with the Red Hat Ansible Automation Platform 2.5 for Red Hat Enterprise Linux (RHEL) 9 x86_64 (RPMs) repository enabled.

Follow this setup process:

- Install tools: Build an RHEL 9.4+ system and install

Ansible,ansible-playbook, andansible-builder. Starting with Ansible Automation Platform 2.5, most ansible tools are installable via RPM, it is no longer necessary to install upstream tools. - Create service account: Create a non-privileged service account, such as

ansible. - Set up directory: Create a directory (e.g.,

/home/ansible/build_dir) and copy the content from the Config as Code Creating Execution Environments lab underbuild_dir. For your convenience, a solved version of the lab is available here, allowing you to easily copy the content. - Configure: Modify the

ansible.cfg,inventory,auth.yml, andvault.ymlfiles to align with your environment and validate you can pull/push EEs to your Private Automation Hub using a non-administrative service account limited to the following roles:galaxy.execution_environment_collaboratorandgalaxy.execution_environment_namespace_owner. - Security: Use vaulted variables in

vault.yml, which can be decrypted automatically by settingvault_password_file=.passwordunder[defaults]in youransible.cfgRestrict access to the service account’s directories. More on this later under the Limitations section of this article. - Prepare playbook: Modify the

build_ee.ymlplaybook as follows:

---

- name: Playbook to configure execution environments

hosts: builder

gather_facts: false

connection: local

vars_files:

- "../ee-definition.yml"

- "../vault.yml"

tasks:

- name: Include ee_builder role

ansible.builtin.include_role:

name: infra.ee_utilities.ee_builder

...The playbook now uses the following vars_files :

../ee-definition.yml: This file is a raw copy of your execution/decision environment configuration file.../vault.yml: This file should contain the vaulted variables specific to your environment. Keep only the variables required in it.

Validation steps

Before automating the process with Git and webhooks, confirm the following manual steps work:

Setup: Log in as

ansibleand navigate to/home/ansible/build_dir:$ cd /home/ansible/build_dirFetch the EE definition file: Save the EE definition file (

de-dynatrace.yml) asee-definition.yml:$ wget -O ee-definition.yml \ https://raw.githubusercontent.com/daleroux/casc_ee/main/de-dynatrace.ymlRun the playbook: Execute the playbook to build and push the EE to the Private Automation Hub:

$ ansible-playbook -i inventory.ini playbooks/build_ee.yml PLAY [Playbook to configure execution environments] **************************** … output truncated … PLAY RECAP ********************************************************************* builder : ok=12 changed=7 unreachable=0 failed=0 skipped=2 rescued=0 ignored=0- Validation: Ensure the execution environment builds successfully and is uploaded to your Private Automation Hub before moving forward.

Automating the build process: CI/CD integration

Streamline the process by integrating with GitLab repositories and pipelines:

Repository setup:

Create two repositories:

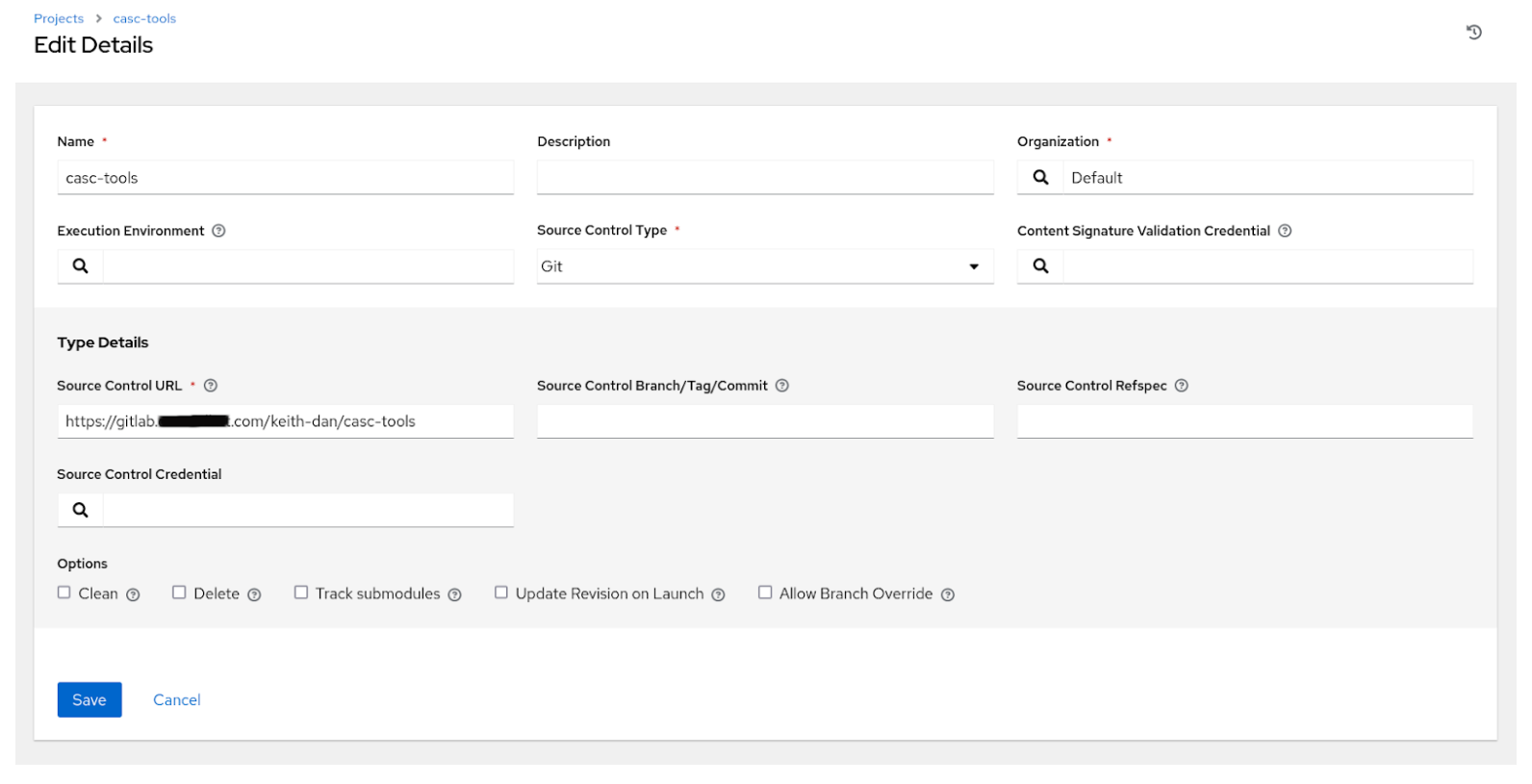

casc-tools: Contains the fetch_ee_definition.yml playbook.- Create a matching

casc-toolsproject in Ansible Automation Platform (or give a name of your choosing) that use the previous repository as source (Figure 1).

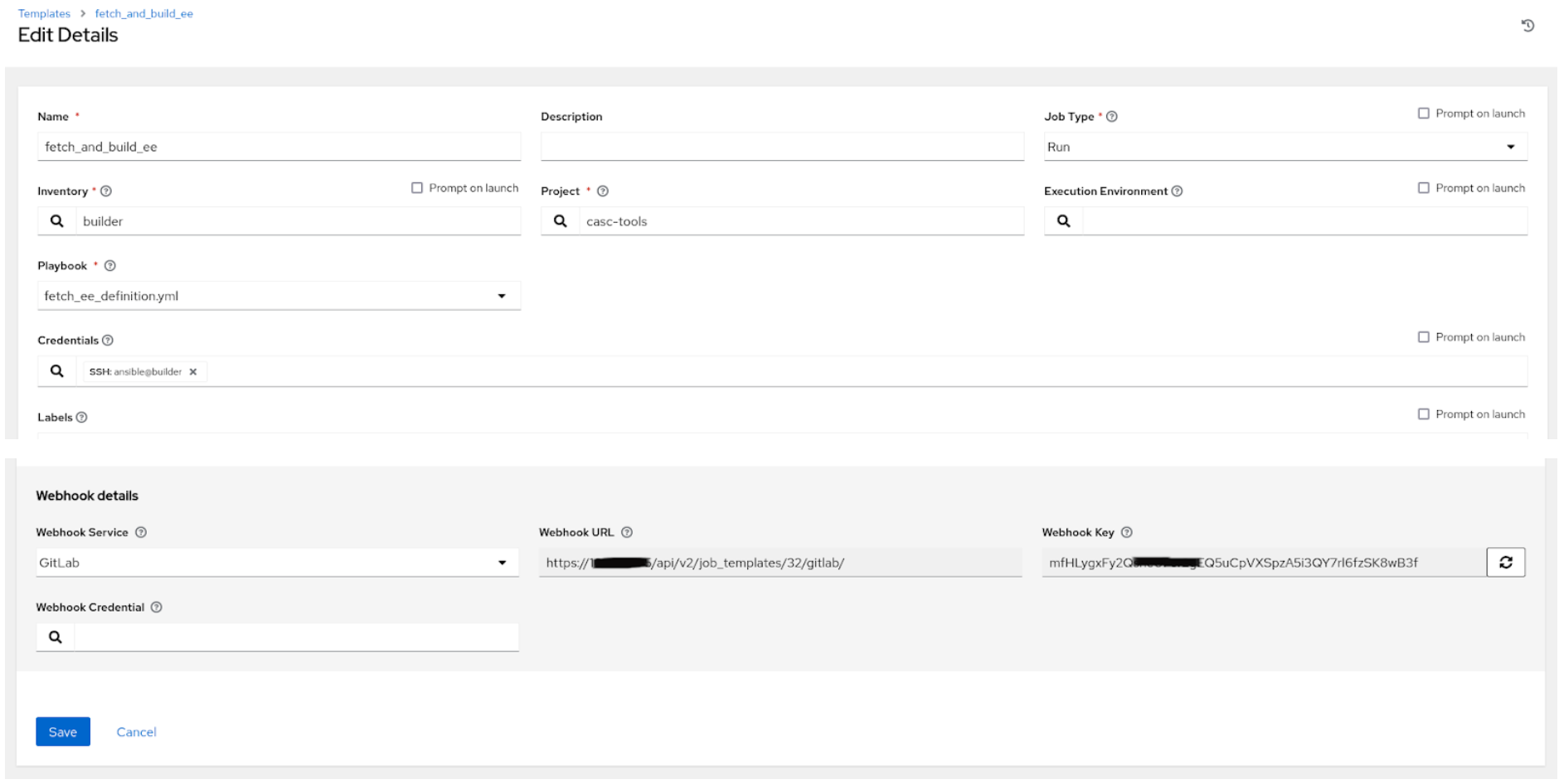

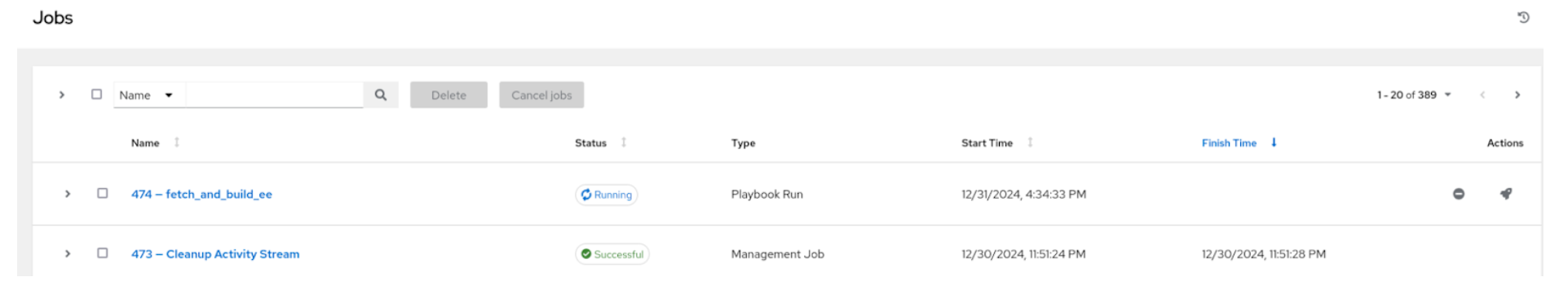

- Then create a job template, fetch_and_build_ee, which will launch the fetch_ee_definition.yml playbook against the inventory named, builder, which only contains our build server. The job template uses the machine credential

ansible@builder, which allows to authenticate as the non-privilegedansibleuser on the build server through secure shell (SSH). Click Save to enable Webhooks of type GitLab and generate the Webhook URL and Webhook Key (Figure 2). Take note of these as they will be needed for a later step.

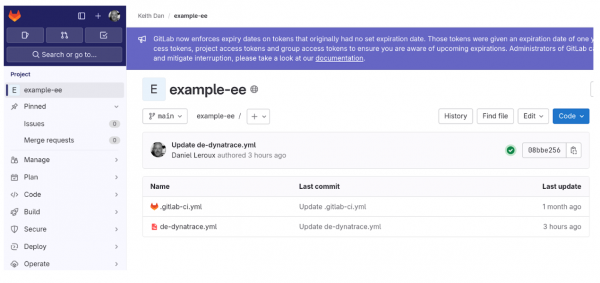

- The second GitLab repository will be used to host a EE/DE definition file (here

de-dynatrace.yml) and a .gitlab-ci.yml configuration file used by GitLab CI/CD to execute a pipeline each time a change is made to thede-dynatrace.ymlfile (Figure 3).

Pipeline execution: Modify

.gitlab-ci.ymlto trigger a pipeline on updates to the EE definition according to your environment. Use a shell like (casc-ee.sh) to simulate a Gitlab webhook which initiates a job template (fetch_and_build_ee) on the Ansible Automation Platform controller and passes two keys: one specifying the URL of the DE/CE to rebuild and push to the private hub and one random key, which tricks the Ansible Automation Platform controller to see it as a new GitLab webhook, since that key changes every time (see the last line incasc-ee.shbelow):-d "{\"key\": "\"$1"\", \"key2\": "\"$WEBHOOK_UUID"\" }"- GitLab runner: Use a virtual machine or container to act as a GitLab runner, initiating the webhook to trigger updates. In our environment, we use a RHEL8.9 VM with cURL installed on it with casc-ee.sh located under

/usr/local/bin. If you decide to use this shell script, make sure to modify it so it reflects your environment (your Webhook URL and Webhook Key).

Now, let's see it in action.

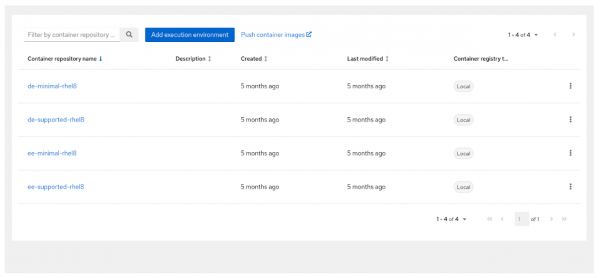

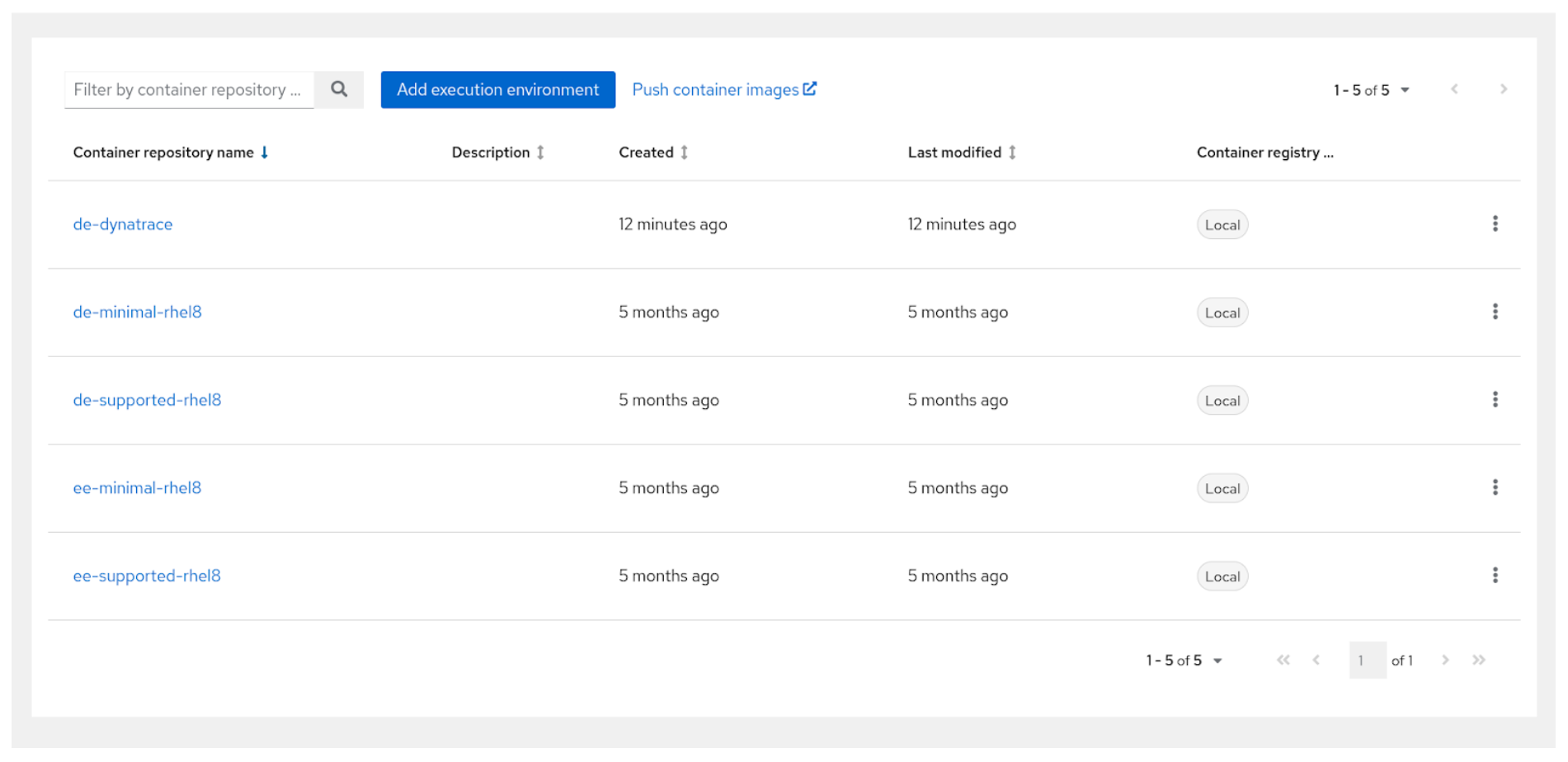

First we observe the de-dynatrace decision environment is not present in the list of DE/EE's on our Private Hub (Figure 4).

Let's clone our example-ee repository so we can modify it, stage it, and push it back to its remote location:

[dleroux@barra ansible]$ git clone https://some-gitlab-url.com/keith-dan/example-ee

Cloning into 'example-ee'...

warning: redirecting to https://some-gitlab-url.com/keith-dan/example-ee.git/

remote: Enumerating objects: 176, done.

remote: Counting objects: 100% (146/146), done.

remote: Compressing objects: 100% (125/125), done.

remote: Total 176 (delta 43), reused 0 (delta 0), pack-reused 30

Receiving objects: 100% (176/176), 17.54 KiB | 17.54 MiB/s, done.

Resolving deltas: 100% (52/52), done.

[dleroux@barra ansible]$ cd example-ee/

[dleroux@barra example-ee (main)]$ ls

de-dynatrace.yml

[dleroux@barra example-ee (main)]$ cat de-dynatrace.yml

---

ee_list:

- name: de-dynatrace

tag: 1-0-0-1

dependencies:

galaxy:

collections:

- name: ansible.eda

- name: dynatrace.event_driven_ansible

system:

- pkgconf-pkg-config [platform:rpm]

- systemd-devel [platform:rpm]

- python39-devel [platform:rpm]

- gcc [platform:rpm]

python:

- psutil

- six

options:

package_manager_path: /usr/bin/microdnf

ee_base_image: "10.8.109.76/de-minimal-rhel8:latest"

ee_image_push: true

ee_prune_images: false

ee_create_ansible_config: false

ee_pull_collections_from_hub: false

ee_create_controller_def: true

[dleroux@barra example-ee (main)]$ sed -i 's/1-0-0-1/1-0-0-2/g' de-dynatrace.yml

[dleroux@barra example-ee (main *)]$ git add .

[dleroux@barra example-ee (main +)]$ git commit -m "increased tag to 1-0-0-2"

[main c046617] increased tag to 1-0-0-2

1 file changed, 1 insertion(+), 1 deletion(-)

[dleroux@barra example-ee (main)]$ git push

warning: redirecting to https://some-gitlab-url.com/keith-dan/example-ee.git/

Enumerating objects: 5, done.

Counting objects: 100% (5/5), done.

Delta compression using up to 16 threads

Compressing objects: 100% (3/3), done.

Writing objects: 100% (3/3), 326 bytes | 326.00 KiB/s, done.

Total 3 (delta 1), reused 0 (delta 0), pack-reused 0 (from 0)

To https://some-gitlab-url.com/keith-dan/example-ee

a932d4b..c046617 main -> main

[dleroux@barra example-ee (main)]This action launched the fetch_and_build_ee job template on our Ansible controller, as shown in Figure 5.

Once the job template ends successfully, the de-dynatrace decision environment appears on our Private Automation Hub, as shown in Figure 6.

Delegating responsibility: A scalable solution

By empowering individual teams to manage their own GitLab repositories and EE definitions, you eliminate the bottleneck of centralized maintenance.

Teams can do the following:

- Maintain custom EE definitions independently.

- Modify

.gitlab-ci.ymlto specify their EE definition repository. - Trigger pipelines as needed, ensuring autonomy and efficiency.

Automation engineers can achieve scalability and efficiency by leveraging tools like GitLab and Ansible Automation Platform to delegate responsibilities wisely, and let each team manage their configurations while maintaining oversight through standardized practices.

Limitations and mitigation strategies

The current dependency on containers.podman-1.13.0 (or earlier versions) exists because of the changes introduced in containers.podman-1.14.0 requiring modifications to the infra.ee_utilities collection.

The methods described here deviate from good Ansible practices. For instance, the fetch_ee_definition.yml playbook uses ansible.builtin.command in the task. Run the playbook to build the EE and upload it to Hub. Using the command is not idempotent, but it's as good as is gets.

Additionally, relying on a .password file to automatically decrypt vault.yml comes with security risks. Mitigating these risks requires implementing other mechanisms, such as restricting access to the directory containing the .password file. The vault.yml file should reference passwords and authentication tokens from a non-administrative service account with limited privileges on your Private Automation Hub. Those privileges are listed in the previous section, the set up the builder step.

Next steps

This article demonstrated a structured method to automate creating and updating execution or decision environments. We also illustrated how to effectively delegate responsibilities across teams. Though we have shared this solution, we trust you to carefully weigh the rewards versus risks and implement adequate countermeasures in your environment.

To support you in your journey, check out the Red Hat communities of practice and Good practices for Ansible—GPA. For more information, refer to the Ansible Content Collections for configuration as code documentation.

All playbooks, scripts, a DE/DE example configuration file, and CI workflows in this article are available in the GitHub directory. Get started with a free trial of Red Hat Ansible Automation Platform.